.NET Rocks! is the longest-running podcast about the .NET programming platform. It's been around so long that the word podcast wasn't even coined yet. In each show, Carl and Richard (the hosts) talk with an expert for an hour. Thus these shows contain a lot of valuable knowledge and insights, but it being audio, it is not easy to extract that data.

In this tutorial, you'll develop an application that can answer questions about specific .NET Rocks! episodes using AI.

To build this application you'll use a bunch of technologies:

- Semantic Kernel is a polyglot LLM framework by Microsoft. You'll use Semantic Kernel's abstractions to interact with an LLM and store the transcripts in a vector database.

- You'll use AssemblyAI to transcribe the podcasts using the AssemblyAI.SemanticKernel integration.

- The transcripts will be stored in an open-source vector database, Chroma.

- You'll use OpenAI's GPT models to generate responses to your prompts, and their embeddings model to generate embeddings for the transcripts so they can be stored in the vector database.

If you're new to developing with AI, the overview above may be overwhelming. Don't fret, we'll walk you through the process step-by-step.

What you'll need

- .NET 8 SDK (newer and older versions will work too with small adjustments)

- An OpenAI account and API key

- Git to clone the Chroma DB repository.

- Docker and Docker Compose to run the Chroma DB docker-compose file. Alternatively, you can use a different vector database supported by Semantic Kernel.

- An AssemblyAI account and API key

Sign up for an AssemblyAI account to get an API key for free, or sign into your AssemblyAI account, then grab your API key from the dashboard.

You can find the source code for this project on GitHub.

Set up your Semantic Kernel .NET project

Open a terminal and run the following commands:

dotnet new console -o DotNetRocksQna

cd DotNetRocksQna

Next, add the following NuGet packages:

dotnet add package AssemblyAI.SemanticKernel

dotnet add package Microsoft.SemanticKernel

dotnet add package Microsoft.SemanticKernel.Connectors.Memory.Chroma --version 1.4.0-alpha

dotnet add package Microsoft.Extensions.Configuration

dotnet add package Microsoft.Extensions.Configuration.UserSecrets

dotnet add package Microsoft.Extensions.Logging

Here's what the packages are for:

- AssemblyAI.SemanticKernel: This is the AssemblyAI integration for Semantic Kernel which contains the

TranscriptPlugin. TheTranscriptPluginis a Semantic Kernel plugin that can transcribe audio files. - Microsoft.SemanticKernel: The Semantic Kernel framework for programming against AI services.

- Microsoft.SemanticKernel.Connectors.Memory.Chroma: The connector to configure Chroma DB as the memory for Semantic Kernel.

- Microsoft.Extensions.Configuration: The library for loading and building configuration in .NET.

- Microsoft.Extensions.Configuration.UserSecrets: The extension to add support for user-secrets in .NET configuration.

- Microsoft.Extensions.Logging: A logging library that integrates with Semantic Kernel.

Semantic Kernels has a couple of APIs that are still experimental, which is why Microsoft added some compilation warnings to make you aware of this. You will not see these warnings until you use the experimental APIs. You'll need to suppress these warnings to be able to build your project.

Edit your DotNetRocksQna.csproj file and add the NoWarn node you see below.

<PropertyGroup>

<OutputType>Exe</OutputType>

<TargetFramework>net8.0</TargetFramework>

...

<NoWarn>SKEXP0003,SKEXP0011,SKEXP0013,SKEXP0022,SKEXP0055,SKEXP0060</NoWarn>

</PropertyGroup>

Info

You can find more details about each experimental code in Semantic Kernel's EXPERIMENTS.md

Save the file and continue.

Next, you'll need to configure some API keys using the .NET secrets manager.

Run the following commands to configure the secrets and replace <YOUR_OPENAI_API_KEY> with your OpenAI API key and <YOUR_ASSEMBLYAI_API_KEY> with your AssemblyAI API key.

dotnet user-secrets init

dotnet user-secrets set OpenAI:ApiKey "<YOUR_OPENAI_API_KEY>"

dotnet user-secrets set AssemblyAI:Plugin:ApiKey "<YOUR_ASSEMBLYAI_API_KEY>"

Open your project using your preferred IDE or editor and update Program.cs with the following code.

using System.Text;

using System.Text.Json;

using AssemblyAI.SemanticKernel;

using Microsoft.Extensions.Configuration;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Connectors.Chroma;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Text;

namespace DotNetRocksQna;

internal class Program

{

private const string CompletionModel = "gpt-3.5-turbo";

private const string EmbeddingsModel = "text-embedding-ada-002";

private const string ChromeDbEndpoint = "http://localhost:8000";

private static IConfiguration config;

private static Kernel kernel;

private static ISemanticTextMemory memory;

public static async Task Main(string[] args)

{

config = CreateConfig();

kernel = CreateKernel();

memory = CreateMemory();

}

private static IConfiguration CreateConfig() => new ConfigurationBuilder()

.AddUserSecrets<Program>().Build();

private static string GetOpenAiApiKey() => config["OpenAI:ApiKey"] ??

throw new Exception("OpenAI:ApiKey configuration required.");

private static string GetAssemblyAiApiKey() => config["AssemblyAI:ApiKey"] ??

throw new Exception("AssemblyAI:ApiKey configuration required.");

private static Kernel CreateKernel()

{

var builder = Kernel.CreateBuilder()

.AddOpenAIChatCompletion(CompletionModel, GetOpenAiApiKey());

return builder.Build();

}

private static ISemanticTextMemory CreateMemory()

{

return new MemoryBuilder()

.WithChromaMemoryStore(ChromeDbEndpoint)

.WithOpenAITextEmbeddingGeneration(EmbeddingsModel, GetOpenAiApiKey())

.Build();

}

}

Here is what the code does:

CreateConfigbuilds the configuration from the user-secrets and other sources.CreateKernelbuilds aKernelwith a chat completion service, an embeddings generation service. You can change theCompletionModelconstant to use a different OpenAI GPT model likegpt-4if you have access to it.CreateMemroybuilds a semantic memory using Chroma DB, and OpenAI's embedding generation model.

The Chroma DB connector expects the Chroma DB endpoint to run locally at http://localhost:8000.

Make sure the Docker daemon is running and then follow the instructions from the Semantic Kernel Chroma Connector to quickly get a Chroma DB running locally using Git and Docker. Alternatively, you can use any of the supported vector databases listed in the Semantic Kernel docs.

Let's verify that the chat completion service works. Update the Main method with the following code.

config = CreateConfig();

kernel = CreateKernel();

memory = CreateMemory();

// verify chat completion service works

var questionFunction = kernel.CreateFunctionFromPrompt(

"When did {{$artist}} release '{{$song}}'?"

);

var result = await questionFunction.InvokeAsync(kernel, new KernelArguments

{

["song"] = "Blank Space",

["artist"] = "Taylor Swift",

});

Console.WriteLine("Answer: {0}", result.GetValue<string>());

The code creates a semantic function passing in your prompt and arguments to embed into the prompt.

Once you have a function, you can reuse it and invoke it multiple times. To pass in arguments to the function, you create KernelArguments and pass them to .InvokeAsync.

Run your application and verify the output.

dotnet run

The output should look similar to this.

Answer: Taylor Swift released the song "Blank Space" on November 10, 2014.

Warning

If the application throws an exception at this point, the chat completion isn't configured correctly. Verify that you configure the chat completion correctly using the AddOpenAIChatCompletion method.

But what about one of her more recent songs? Replace Blank Space with Anti-Hero and run it again. The output should look something like this.

Answer: Taylor Swift has not released a song called 'Anti-Hero' as of my knowledge up until September 2021. It's possible that the song you're referring to is by another artist or it may be an unreleased or upcoming track by Taylor Swift.

This means the chat completion service works, but as explained in the output, the model was trained with data up to October 2021, so the song "Anti-Hero" by Taylor Swift did not exist at the time.

To solve this, let's add some Taylor Swift songs to the Chroma DB, and query the database based on the question.

Update the Main method with the following code.

config = CreateConfig();

kernel = CreateKernel();

memory = CreateMemory();

const string question = "When did Taylor Swift release 'Anti-Hero'?";

// verify that embeddings generation and Chroma DB connector works

const string collectionName = "Songs";

await memory.SaveInformationAsync(

collection: collectionName,

text: """

Song name: Never Gonna Give You Up

Artist: Rick Astley

Release date: 27 July 1987

""",

id: "nevergonnagiveyouup_ricksastley"

);

await memory.SaveInformationAsync(

collection: collectionName,

text: """

Song name: Anti-Hero

Artist: Taylor Swift

Release date: October 24, 2022

""",

id: "antihero_taylorswift"

);

var docs = memory.SearchAsync(collectionName, query: question, limit: 1);

var doc = docs.ToBlockingEnumerable().SingleOrDefault();

Console.WriteLine("Chroma DB result: {0}", doc?.Metadata.Text);

// verify chat completion service works

var questionFunction = kernel.CreateFunctionFromPrompt(

"""

Here is relevant context: {{$context}}

---

{{$question}}

""");

var result = await questionFunction.InvokeAsync(kernel, new KernelArguments

{

["context"] = doc?.Metadata.Text,

["question"] = question

});

Console.WriteLine("Answer: {0}", result.GetValue<string>());

Run your application and verify the output.

dotnet run

Warning

If the application throws an exception at this point, there's an issue with your Chroma DB or embedding generation service. Verify that your Chroma DB is running locally, and the embedding generation service is configured correctly using WithOpenAITextEmbeddingGenerationService.

The output should look something like this.

Chroma DB result: Song name: Anti-Hero

Artist: Taylor Swift

Release date: October 24, 2022

Answer: Taylor Swift released the song "Anti-Hero" on October 24, 2022.

If the output looks like the above, your embeddings generation service and Chroma DB connector are all working. In the next section, let's explain some of the patterns and concepts you've used above.

RAG, embeddings, vector databases, and semantic functions

RAG

Unknowingly, you just used the Retrieval Augmented Generation pattern, or RAG for short. In simple words, RAG means, to retrieve relevant information and augment it into your prompt as extra context. RAG solves two common problems with generative AI.

- The models are trained only on public data and up to a certain date. They lack context for recent events.

- The models can only accept a limited amount of tokens. If you have large bodies of text you want to use with these models, you will exceed the token limit.

RAG also improves transparency by letting the LLM reference and cite source documents.

However, RAG doesn't specify how the relevant information is queried, but the most common way to retrieve relevant information is by generating embeddings and querying the embeddings using a vector database.

Embeddings

An embedding turns text into a vector of floating point numbers. To figure out how relevant two pieces of text are to each other, you generate embeddings for both using an embeddings model and calculate the cosine similarity. Luckily, you don't need to understand all that to take advantage of embeddings. Using this technique, you can determine that even though the words "dog" and "fog" are similar by comparing letters, the meaning of the words isn't similar at all. Meanwhile "dog" and "cat" are very different words, but their meaning is a lot more similar.

In this tutorial, you're using OpenAI's embeddings model to generate the embeddings.

Here's a more in-depth explanation of embeddings on our YouTube channel.

Vector databases

Once you have generated the embeddings for your text, you can store them in a vector database. These vector databases are optimized to store and query embeddings. You can query the database by giving it a string and ask for X amount of documents that are most similar.

In this tutorial, you're using the open-source vector database, Chroma DB.

Here's a more in-depth explanation of vector databases on our YouTube channel

Using RAG, embeddings, and vector databases in Semantic Kernel

In Semantic Kernel you don't need to generate embeddings and interact with the vector databases directly. The ISemanticTextMemory abstraction will automatically generate the embeddings and interact with the vector database for you.

To generate embeddings, Semantic Kernel will use the service you configured using .WithOpenAITextEmbeddingGeneration. To store and query the embeddings, Semantic Kernel will use the vector database (or other types of storage) that you configured using the MemoryBuilder.

In the application you just wrote, you store information about two songs in the vector database using memory.SaveInformationAsync, query the most relevant document using memory.SearchAsync, and augment the resulting document inside your prompt. By augmenting the document in your prompt, you can now ask questions about it even though the model previously was unaware of its existence.

Implement show picker

Now that you verified that everything is working, let's work on the task at hand. Let's add functionality that can load .NET Rocks! shows and allow the user to choose a show to ask questions about.

Create a .NET Rocks! plugin to load the shows

To load the show details, you're going to create a plugin with native functions. Native functions are functions that can return a result like semantic functions, but instead of sending prompts to a completion model, native functions execute custom code like C# code.

Create a file DotNetRocksPlugin.cs with the following code.

using System.ComponentModel;

using System.Text.Json;

using System.Text.Json.Serialization;

using System.Web;

using System.Xml;

using Microsoft.SemanticKernel;

namespace DotNetRocksQna;

public class DotNetRocksPlugin

{

private const string RssFeedUrl = "https://pwop.com/feed.aspx?show=dotnetrocks";

public const string GetShowsFunctionName = nameof(GetShows);

[KernelFunction, Description("Get shows of the .NET Rocks! podcast.")]

public async Task<string> GetShows()

{

using var httpClient = new HttpClient();

var rssFeedStream = await httpClient.GetStreamAsync(RssFeedUrl);

var doc = new XmlDocument();

doc.Load(rssFeedStream);

var nodes = doc.DocumentElement.SelectNodes("//item");

var shows = new List<Show>();

for (var showIndex = 0; showIndex < nodes.Count; showIndex++)

{

var node = nodes[showIndex];

var link = node["link"].InnerText;

var queryString = HttpUtility.ParseQueryString(new Uri(link).Query);

var showNumber = int.Parse(queryString["ShowNum"]);

var title = node["title"].InnerText;

var audioUrl = node["enclosure"].Attributes["url"].Value;

shows.Add(new Show

{

Index = showIndex + 1,

Number = showNumber,

Title = title,

AudioUrl = audioUrl

});

}

return JsonSerializer.Serialize(shows);

}

}

public class Show

{

[JsonPropertyName("index")] public int Index { get; set; }

[JsonPropertyName("show_number")] public int Number { get; set; }

[JsonPropertyName("title")] public string Title { get; set; }

[JsonPropertyName("audio_url")] public string AudioUrl { get; set; }

}

The plugin retrieves the RSS feed of the .NET Rocks! show, extracts the show number, the title, and the audio URL for each show, and converts it to a JSON array.

In your Program class, update the CreateKernel method with the following code:

private static Kernel CreateKernel()

{

var builder = Kernel.CreateBuilder()

.AddOpenAIChatCompletion(CompletionModel, GetOpenAiApiKey());

builder.Plugins.AddFromType<DotNetRocksPlugin>();

return builder.Build();

}

Now CreateKernel also adds the DotNetRocksPlugin into the kernel. The native functions in the plugin can now be invoked directly or invoked from within another semantic function.

Example of calling the native function directly:

var result = await kernel.InvokeAsync<string>(

nameof(DotNetRocksPlugin),

DotNetRocksPlugin.GetShowsFunctionName

);

Console.WriteLine(result);

The output would be the JSON array of shows.

Example of calling the native function from within a semantic function:

var function = kernel.CreateFunctionFromPrompt(

"""

Here are the latest .NET Rocks! shows in JSON:

{{DotNetRocksPlugin.GetShows}}

---

What is the latest show title?

""");

var result = await function.InvokeAsync<string>(kernel);

Console.WriteLine(result);

The output would be something like The latest show title is "Azure and GitHub with April Edwards"..

Use semantic functions to pick the show

In your Program class, update the Main method with the following code:

public static async Task Main(string[] args)

{

config = CreateConfig();

kernel = CreateKernel();

memory = CreateMemory();

var show = await PickShow();

}

Then add the PickShow method with the following code:

private static async Task<Show> PickShow()

{

Console.WriteLine("Getting .NET Rocks! shows\n");

var shows = await kernel.InvokeAsync<string>(

nameof(DotNetRocksPlugin),

DotNetRocksPlugin.GetShowsFunctionName

);

var showsList = await kernel.InvokePromptAsync<string>(

"""

Be succinct.

---

Here is a list of .NET Rocks! shows:

{{$shows}}

---

Show titles as ordered list and ask me to pick one.

""",

new KernelArguments

{

["shows"] = shows

}

);

Console.WriteLine(showsList);

Console.WriteLine();

Console.Write("Pick a show: ");

var query = Console.ReadLine() ?? throw new Exception("You have to pick a show.");

Console.WriteLine("\nQuerying show\n");

var pickShowFunction = kernel.CreateFunctionFromPrompt(

"""

Select the JSON object from the array below using the given query.

If the query is a number, it is likely the "index" property of the JSON objects.

---

JSON array: {{$shows}}

Query: {{$query}}

""",

executionSettings: new OpenAIPromptExecutionSettings

{

ResponseFormat = "json_object"

}

);

var jsonShow = await pickShowFunction.InvokeAsync<string>(

kernel,

new KernelArguments

{

["shows"] = shows,

["query"] = query

},

cancellationToken: ct

);

var show = JsonSerializer.Deserialize<Show>(pickShowResult) ??

throw new Exception($"Failed to deserialize show.");

Console.WriteLine($"You picked show: \"{show.Title}\"\n");

return show;

}

The PickShow method invokes the native DotNetRocksPlugin.GetShows function and puts the resulting JSON in the shows variable of the context. JSON isn't very user-friendly, so the next semantic function tells the model to turn the JSON array into an ordered list. The program then prints the ordered list to the console and asks the user to pick a show.

Note there are no instructions on how to pick the show, the user can enter any string into the console.

For example, all of these answers would work fine as long as the chat completion model can make sense of it:

show 55the one with Jimmythe one about MediatRshows.Single(s => s.Title.Contains("MediatR"))SELECT * FROM shows WHERE 'title' LIKE "%MediatR%"

The pickShowFunction will figure out which show you meant by your query and return the correct JSON object. That JSON object is then converted back into a Show object.

Warning

The code assumes that the pickShowFunction will return valid JSON, but depending on the model and service, that may not always be the case. These completion models will often add an explainer sentence before or after the JSON which would make the result invalid JSON. When using OpenAI LLMs, you can pass the ResponseFormat = "json_object" parameter to force the response to be JSON.

Transcribe the show and store transcripts in Chroma

Let's transcribe the selected show using AssemblyAI.

Update the Main method to call TranscribeShow.

public static async Task Main(string[] args)

{

config = CreateConfig();

kernel = CreateKernel();

memory = CreateMemory();

var show = await PickShow();

await TranscribeShow(show);

}

Update CreateKernel to add the AssemblyAIPlugin.

private static Kernel CreateKernel()

{

var builder = Kernel.CreateBuilder()

.AddOpenAIChatCompletion(CompletionModel, GetOpenAiApiKey())

.AddAssemblyAIPlugin(config);

builder.Plugins.AddFromType<DotNetRocksPlugin>();

return builder.Build();

}

Then add the TranscribeShow method.

private static async Task TranscribeShow(Show show)

{

var collectionName = $"Transcript_{show.Number}";

var collections = await memory.GetCollectionsAsync();

if (collections.Contains(collectionName))

{

Console.WriteLine("Show already transcribed\n");

return;

}

Console.WriteLine("Transcribing show");

var transcript = await kernel.InvokeAsync<string>(

nameof(TranscriptPlugin),

TranscriptPlugin.TranscribeFunctionName,

new KernelArguments

{

["INPUT"] = show.AudioUrl

}

);

var paragraphs = TextChunker.SplitPlainTextParagraphs(

TextChunker.SplitPlainTextLines(transcript, 128),

1024

);

for (var i = 0; i < paragraphs.Count; i++)

{

await memory.SaveInformationAsync(collectionName, paragraphs[i], $"paragraph{i}");

}

}

The TranscribeShow method will transcribe the show and create a collection in Chroma for each show. So if there's already a collection for a show in the database, the show has already been transcribed. In that case, the method returns early. To transcribe the show, the native TranscriptPlugin.Transcribe function is invoked with the INPUT parameter configured as the show.AudioUrl.

The result of the function will be the transcript text.

.NET Rocks! shows are usually around an hour long, so the transcript is quite long. Too long to augment the transcript in your prompts as a whole. So instead of storing the whole transcript as a single document, you split up the transcript into lines and paragraphs. The TextChunker.SplitPlainTextLines and TextChunker.SplitPlainTextParagraphs will split up the transcript by the given number of tokens. So each paragraph will be 1024 tokens or less. Each paragraph is stored in the Chroma, so when you query the database later, you'll know each document will also be 1024 tokens or less. This is important to not exceed the maximum number of tokens of the model.

Implement questions and answers using RAG

For the last part, you'll ask the user for a question via the console, query the vector database for relevant paragraphs of the transcript, send the prompt to the completion model, and print out the result.

Update the Main method to call the AskQuestions method.

public static async Task Main(string[] args)

{

config = CreateConfig();

kernel = CreateKernel();

memory = CreateMemory();

var show = await PickShow();

await TranscribeShow(show);

await AskQuestions(show);

}

Then, add the AskQuestions method.

private static async Task AskQuestions(Show show)

{

var collectionName = $"Transcript_{show.Number}";

var askQuestionFunction = kernel.CreateFunctionFromPrompt(

"""

You are a Q&A assistant who will answer questions about the transcript of the given podcast show.

---

Here is context from the show transcript: {{$transcript}}

---

{{$question}}

""");

while (true)

{

Console.Write("Ask a question: ");

var question = Console.ReadLine();

Console.WriteLine("Generating answers");

var promptBuilder = new StringBuilder();

await foreach (var searchResult in memory.SearchAsync(collectionName, question, limit: 3))

{

promptBuilder.AppendLine(searchResult.Metadata.Text);

}

if (promptBuilder.Length == 0) Console.WriteLine("No context retrieved from transcript vector DB.\n");

var answer = await askQuestionFunction.InvokeAsync<string>(kernel, new KernelArguments

{

["transcript"] = promptBuilder.ToString(),

["question"] = question

});

Console.WriteLine(answer);

}

}

The AskQuestions creates a semantic function that answers a question and can be augmented with relevant pieces of the transcript. When the user asks a question, the question itself is used to query the vector database and retrieves up to 3 documents. The documents are aggregated using a StringBuilder and then configured as the transcript argument. The question is also configured as an argument. The method will invoke the askQuestionFunction and print the result to the console.

Run the application again and try it out.

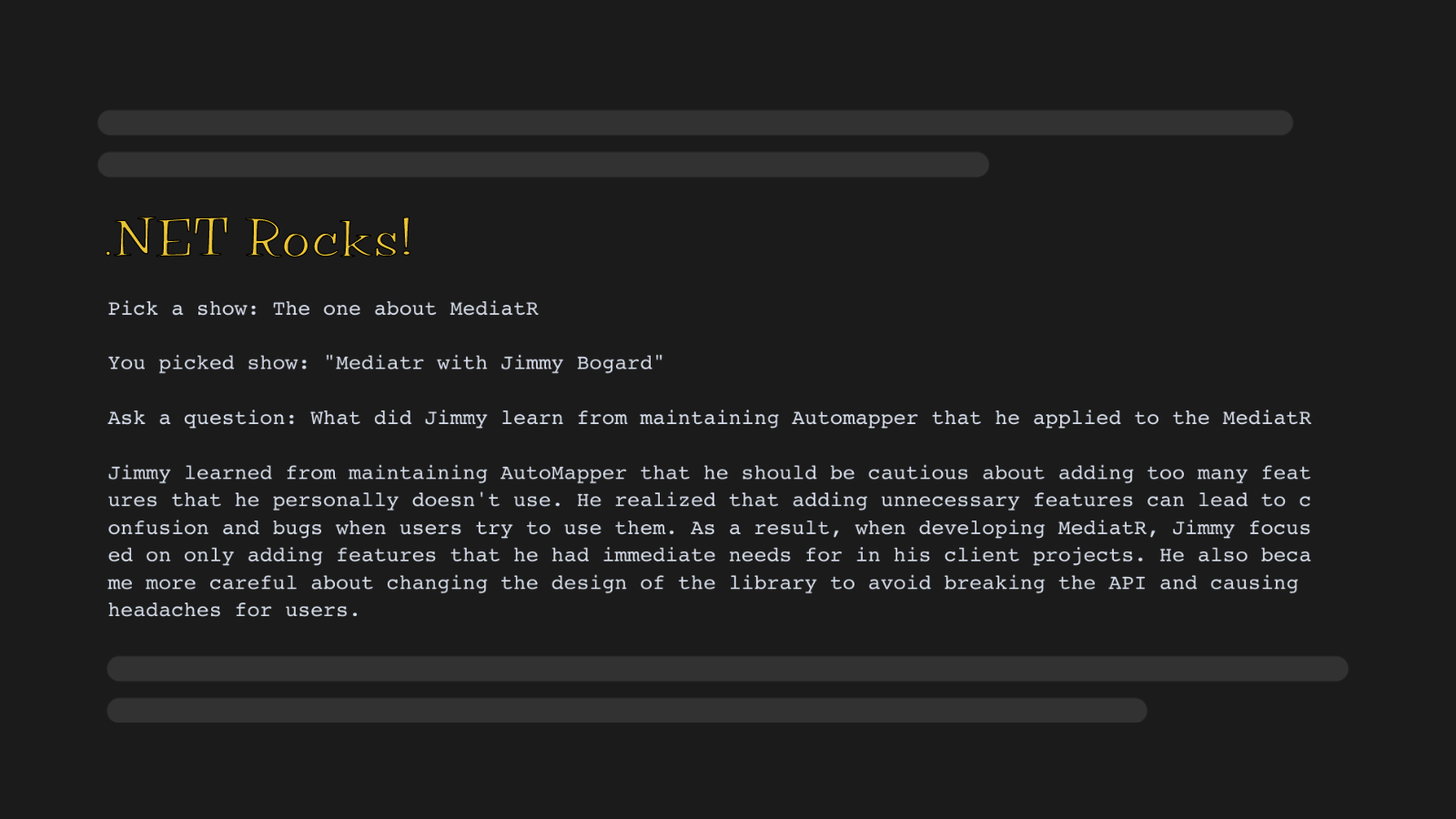

When I ran the application, I answered the prompt as shown and got the below output.

Getting .NET Rocks! shows

Here is a list of .NET Rocks! shows:

1. Azure and GitHub with April Edwards

2. Data Science and UX with Grishma Jena

3. IoT Development using Particle Photon with Colleen Lavin

4. Mediatr with Jimmy Bogard

5. Applied Large Language Models with Brian MacKay

6. Minimal Architecture with Jeremy Miller

7. Chocolatey in 2023 with Gary Ewan Park

8. Leveling up your Architecture Game with Thomas Betts

9. The Ethics of Large Language Models with Amber McKenzie

10. Modular Monoliths with Layla Porter

11. Multi-Model Data Stores with Ted Neward

12. Fluent Assertions with Dennis Doomen

13. Scaling a Monolith with Derek Comartin

14. Going Full Time on Open Source with Shaun Walker

15. Azure Developer CLI with Savannah Ostrowski

16. Building Apps using OpenAI with Mark Miller

17. OpenTelemetry with Laïla Bougriâ

18. No Free Lunch in Machine Learning with Jodie Burchell

19. PHP and WebAssembly with Jakub Míšek

20. Immutable Architectures with Michael Perry

Please pick one of the shows from the list.

Pick a show: The one about MediatR

You picked show: "Mediatr with Jimmy Bogard"

Ask a question: What did Jimmy learn from maintaining Automapper that he applied to the MediatR

Jimmy learned from maintaining AutoMapper that he should be cautious about adding too many features that he personally doesn't use. He realized that adding unnecessary features can lead to confusion and bugs when users try to use them. As a result, when developing MediatR, Jimmy focused on only adding features that he had immediate needs for in his client projects. He also became more careful about changing the design of the library to avoid breaking the API and causing headaches for users.

Warning

In this application, we're focusing on the happy path, but with generative AI, it's hard to predict what the output will be, and thus errors easily surface. You can modify the prompts to get more consistent results, or validate and extract relevant information using regular expressions, or double down on AI by using another prompt to extract data from the generated results. The generative AI space is rapidly evolving and the industry as a whole is still trying to figure out how to best program against these models.

LeMUR, our production-ready LLM framework for spoken audio

You just developed an application that can answer questions about podcasts shows using the RAG pattern. If you try asking a bunch of different questions, you'll probably notice that the response isn't always great. In some cases, the vector database returns no documents at all because it couldn't find anything relevant to the query. This is a common challenge.

There are many ways to optimize the RAG pattern like chunking up the transcript in different sizes, letting the chunks overlap each other, or by use LLMs to generate additional information to put inside the database, or use LLMs to summarize the sections of the transcript and putting summaries in the database.

It takes a lot of engineering and maintenance to create production-grade LLM applications.

That's why we built LeMUR, our production-grade LLM framework for spoken audio. Use LeMUR to apply common LLM tasks against your audio data such as summarizing, Q&A, extracting action items, and running any custom prompt.