In this tutorial, you'll learn how to create an application that can answer your questions about an audio file, using LangChain.js and AssemblyAI's new integration with LangChain.

LangChain is a framework for developing applications using Large Language Models (LLM). LangChain provides components that are commonly needed to build integrations with LLMs. However, LLMs only operate on textual data and do not understand what is said in audio files. That's why we contributed its own integration to LangChain.js so developers can integrate AssemblyAI's transcription models to transcribe audio files to text so that they can be used in LangChain.

Note

For now, the AssemblyAI integration is only for LangChain.js, the TypeScript/JavaScript version of LangChain. However, we are working on the equivalent integration for Python LangChain.

Prerequisites

To follow along, you'll need the following:

- Node.js (version 18 or above)

- An OpenAI account and an OpenAI API key

- An AssemblyAI account and API key

Sign up for an AssemblyAI account to get an API key for free, or sign into your AssemblyAI account, then grab your API key from the dashboard.

Set up your TypeScript Node.js project

Next, if you don't already have a Node.js application, create a new directory, run npm init, and accept all the defaults.

mkdir transcript-langchain

cd transcript-langchain

npm init

This application will use TypeScript, but you can skip the following step if you want to use JavaScript.

Install typescript and initialize your TypeScript project:

npm install --save-dev typescript

npx tsc --init

Create a file named index.ts and add the following code:

console.log("Hello World!")

Then, run tsc to compile TypeScript to JavaScript:

npx tsc

You'll see a new file appear called index.js with the JavaScript code generated from your TypeScript code.

Run the code using node:

node index.js

# Output: Hello World!

Configure environment variables

The application you're building needs your OpenAI API key and AssemblyAI API key. A common way to do this is by storing them into a .env file and loading the .env file at the start of your application.

First, create a new .env file with the following contents:

OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>

ASSEMBLYAI_API_KEY=<YOUR_ASSEMBLYAI_API_KEY>

Then replace <YOUR_OPENAI_API_KEY> with your OpenAI API key, and <YOUR_ASSEMBLYAI_API_KEY> with your AssemblyAI API key.

Then, install the dotenv package and some types that you'll use:

npm install -S dotenv

npm i --save-dev @types/node

Update the index.ts code to load the environment variables and print them to the console:

import 'dotenv/config';

console.log(process.env);

Finally, run the code to see the result:

npx tsc && node index.js

You'll see all your environment variables printed out, including the OPENAI_API_KEY and ASSEMBLYAI_API_KEY.

Warning

Make sure to never check your API keys and other secrets into source control, by hard-coding or accidentally adding the .env file. Keep those secrets safe and secure!

Add LangChain.js and create a Q&A chain

First, add LangChain.js using NPM or your preferred package manager:

npm install -S langchain

Next, update the index.ts code with the following question and answers (Q&A) sample:

import 'dotenv/config';

import { OpenAI } from "langchain/llms/openai";

import { loadQAStuffChain } from 'langchain/chains';

import { Document } from 'langchain/document';

(async () => {

const llm = new OpenAI({});

const chain = loadQAStuffChain(llm);

const docs = [new Document({

pageContent: "A runner's knee is something I don't know about."

})];

const response = await chain.call({

input_documents: docs,

question: "What is a runner's knee?",

});

console.log(response.text);

})();

The code above connects to OpenAI's LLM and creates a chain for Q&A that is being called with a hardcoded document and the question "What is a runner's knee?".

If you run the code using npx tsc && node index.js, the output will be something like "I don't know." because of the hard-coded document that was used as the input.

In the upcoming section, you'll use AssemblyAI's transcript loader to load the transcript of an audio file as a document instead of the hard-coded one above.

Load audio transcript

The langchain package already includes the new document loaders for AssemblyAI's transcription models. To use the loaders, import the AudioTranscriptLoader from langchain/document_loaders/web/assemblyai.

import 'dotenv/config';

import { OpenAI } from "langchain/llms/openai";

import { loadQAStuffChain } from 'langchain/chains';

import { AudioTranscriptLoader } from 'langchain/document_loaders/web/assemblyai';

(async () => {

const llm = new OpenAI({});

const chain = loadQAStuffChain(llm);

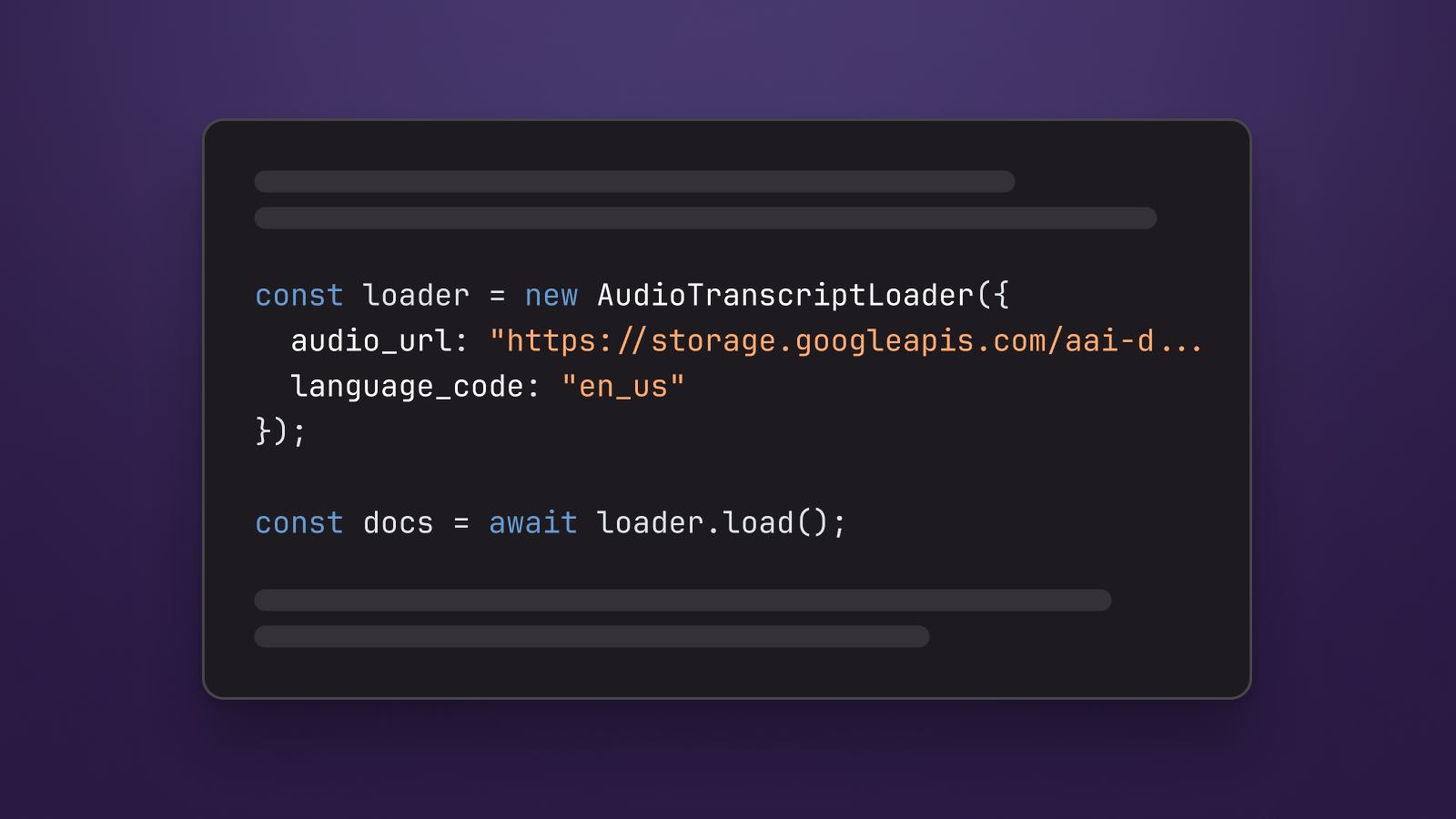

const loader = new AudioTranscriptLoader({

// You can also use a local path to an audio file, like ./sports_injuries.mp3

audio_url: "https://storage.googleapis.com/aai-docs-samples/sports_injuries.mp3",

language_code: "en_us"

});

const docs = await loader.load();

const response = await chain.call({

input_documents: docs,

question: "What is a runner's knee?",

});

console.log(response.text);

})();

The AudioTranscriptLoader uses AssemblyAI's API to transcribe the audio file passed to audio_url. The AudioTranscriptLoader.load() function creates an array with a single document containing the transcript text and transcript metadata.

Note

You can also pass in a local file path to the audio_url property and the loader will upload the file for you to AssemblyAI's CDN.

Now that the AudioTranscriptLoader has loaded the transcript from the sports injuries audio file, the LLM can give a well-informed answer, which looks something like this:

Runner's knee is a condition characterized by pain behind or around the kneecap. It is caused by overuse muscle imbalance and inadequate stretching. Symptoms include pain under or around the kneecap, pain when walking.

Note

LeMUR

AssemblyAI also has its own pre-built solution called LeMUR (Leveraging Large Language Models to Understand Recognized Speech). With LeMUR you can use an LLM to perform tasks over large amounts of long audio files. You can learn more about using the LeMUR API in the docs.

Conclusion

In this tutorial, you learned about the new AssemblyAI integration that was added to LangChain.js.

You created a Q&A application that is able to answer questions about an audio file, by leveraging AssemblyAI and LangChain.js.