The rise of Large Language Models (LLMs) is revolutionizing how we interact with technology. Today, ChatGPT and other LLMs can perform cognitive tasks involving natural language that were unimaginable a few years ago.

The exploding popularity of conversational AI tools has also raised serious concerns about AI safety. From the large-scale proliferation of biased or false information to risks of psychological distress for chatbot users, the potential for misuse of language models is a subject of intense debate.

Much of current AI research aims to design LLMs that seek helpful, truthful, and harmless behavior. One such method, Reinforcement Learning from Human Feedback (RLHF), is currently leading the charge. Many companies, including OpenAI, Google, and Meta, have incorporated RLHF into their AI models, hoping to provide a more controlled user experience.

Unfortunately, while RLHF does offer some level of control, it won’t be the ultimate silver bullet for aligning AI systems with human values. Indeed, RLHF tuning may negatively affect a model's ability to perform specific tasks. Despite this, RLHF remains the industry's go-to solution for achieving alignment in LLMs.

In this article, we'll explore how RLHF works, how it truly impacts a language model's behavior, and discuss the current limitations (see section below) of this approach. This piece should be helpful to anyone who wants a better understanding of LLMs and the challenges in making them safe and reliable. While some familiarity with LLM terminology will be beneficial, we have aimed to make this article accessible to a broad audience.

RLHF as Human Preference Tuning

RLHF is about teaching an AI model to understand human values and preferences. How is it capable of doing this?

Let’s imagine having two distinct language models:

- A base model that’s only been trained to predict the next word on text sequences from a large and diverse text dataset, such as the whole internet. It can produce fluent responses on a wide range of topics. Still, the tone of the answer varies depending on the subject, the exact wording of the question (prompt), and some stochasticity (randomness).

- A second language model, call it the preference model. The preference model can determine what humans prefer by assigning scores to different responses from the base model.

The goal now is to use the preference model to alter the behavior of the base model in response to a prompt. The underlying idea is straightforward: Using the preference model, the base model is refined iteratively (via a fine-tuning process), changing its internal text distribution to prioritize responses favored by humans.

In a way, the preference model serves as a means to directly introduce a "human preference bias” into the base model.

The first step is to create a dataset of examples reflecting human preferences. Researchers can collect human feedback in several ways: rating different model outputs, demonstrating preferred responses to prompts, correcting undesirable model outputs, or providing critiques in natural language. Each of these approaches would lead to a different kind of reward model that can be used for fine-tuning. The core idea of RLHF revolves around training such reward models.

The choice of reward model may depend on different aspects, including the specific “human values” to be optimized for. Fine-tuning a model using high-quality demonstrations is considered the gold standard, but this approach is time and resource-intensive, limiting its scalability. On the other hand, organizing a group of human annotators to select preferred outputs iteratively can result in a relatively large dataset on which to train a preference model. Thanks to this advantage in scalability, most companies choose this as their primary strategy for doing RLHF, as OpenAI did with ChatGPT.

Once the reward model is ready, it can be used to fine-tune the base model via Reinforcement Learning. In this machine learning method, an agent (in our case, the base model) learns to choose actions (here, responses to user input) that maximize a score (technically called a reward). Much like how AlphaGo learned to play Go and Chess, the base model trains itself using a self-play reinforcement learning framework. Being tasked with generating output responses to a list of prompts, its predictions are tweaked to lean towards the highest-scoring responses.

The RLHF Effect

Reinforcement Learning from Human Feedback represents a significant advancement in language models, providing a more user-friendly interface for harnessing their vast capabilities. But how do we interpret the effect of RLHF fine-tuning over the original base LLM?

One way to think about it is the following.

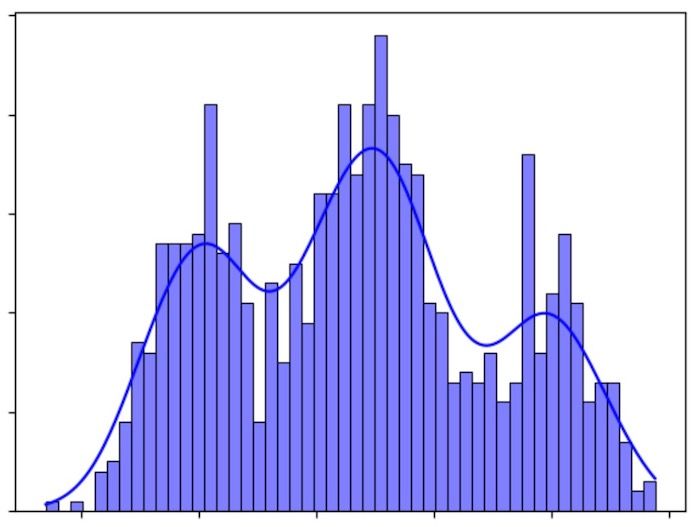

The base model may behave like a sort of stochastic parrot, a reservoir of textual chaos learned from a universe of online text with its invaluable insights and its less desirable content. It may accurately mirror the distribution of internet text and thus be able to replicate (“parrot”) the multitude of available tones, personas, sources, and voices seen in its training data.

However, the base model's choice of tone of voice in responding to an input prompt can be very unpredictable. Due to its highly multimodal nature, the quality of its responses could vary significantly, depending on the source (or mode) it decides to emulate.

For instance, a user might ask about a notable political figure. The model could generate a response resembling a neutral, informative Wikipedia article (it chooses an “encyclopedic mode” in the distribution, so to speak), or it could echo a more radical viewpoint, depending on the exact phrasing and tone of the question.

How to control these unpredictable dynamics? Leaving it entirely up to the model’s stochastic decision-making nature may not be ideal for most real-world LLM applications. As we’ve seen, RLHF addresses this issue by fine-tuning the model based on human preference data, offering a more reliable user experience.

But does this come at a cost?

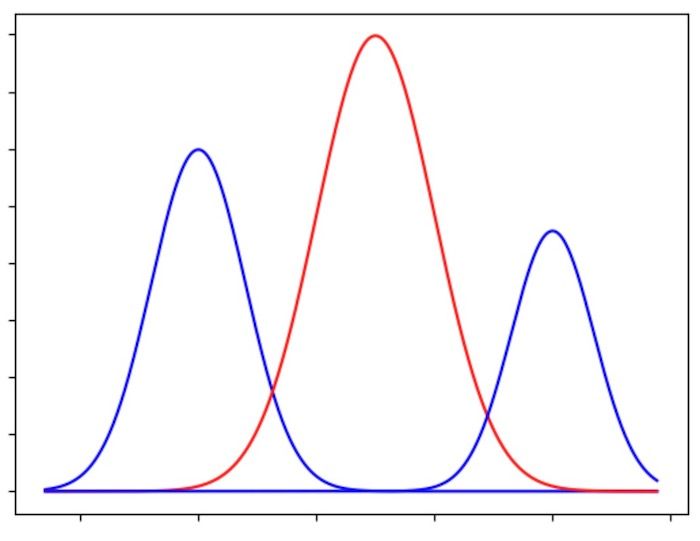

Applying RLHF shapes a language model by infusing a human preference bias into its outputs. Operationally, we can interpret this effect as introducing a mode-seeking mechanism that guides the model through its text distribution and leads to outputs with higher rewards, effectively narrowing the potential range of generated content.

The kind of biases that RLHF introduces reflect the values of the individuals who have informed the preference-dataset. For instance, ChatGPT is biased towards delivering responses that are helpful, truthful, and harmless, mirroring the inclinations of its annotators, and the guidelines they have been instructed to follow.

What gets compromised for the original model through this process?

While RLHF enhances the reliability and consistency of the model's responses, it will necessarily limit the diversity of its generative capabilities. Whether this trade-off represents a benefit or a limitation depends on the intended use case.

In applications such as chatbot assistants or search, where accuracy is critical, RLHF certainly proves advantageous. However, for creative uses like generating ideas or assisting in writing, a reduction in output diversity could stifle the birth of more original content.

The advent of RLHF fine-tuning has arguably revolutionized conversational AI. Yet, at least for large-scale implementations, it's a framework still in its nascent stages. To delve deeper into our understanding, the next and final section surveys some recent research on RLHF’s limitations and its impact on AI safety and the alignment of language models.

Current Limitations of RLHF Fine-Tuning

RLHF's goal of creating a helpful and harmless model imposes a necessary trade-off: In the extreme case, a model that refrains from answering any prompt is entirely harmless, yet not particularly helpful.

Conversely, an overly helpful model might respond to questions it should avoid, risking harmful outcomes. Finding the right balance imposes the first obvious challenge.

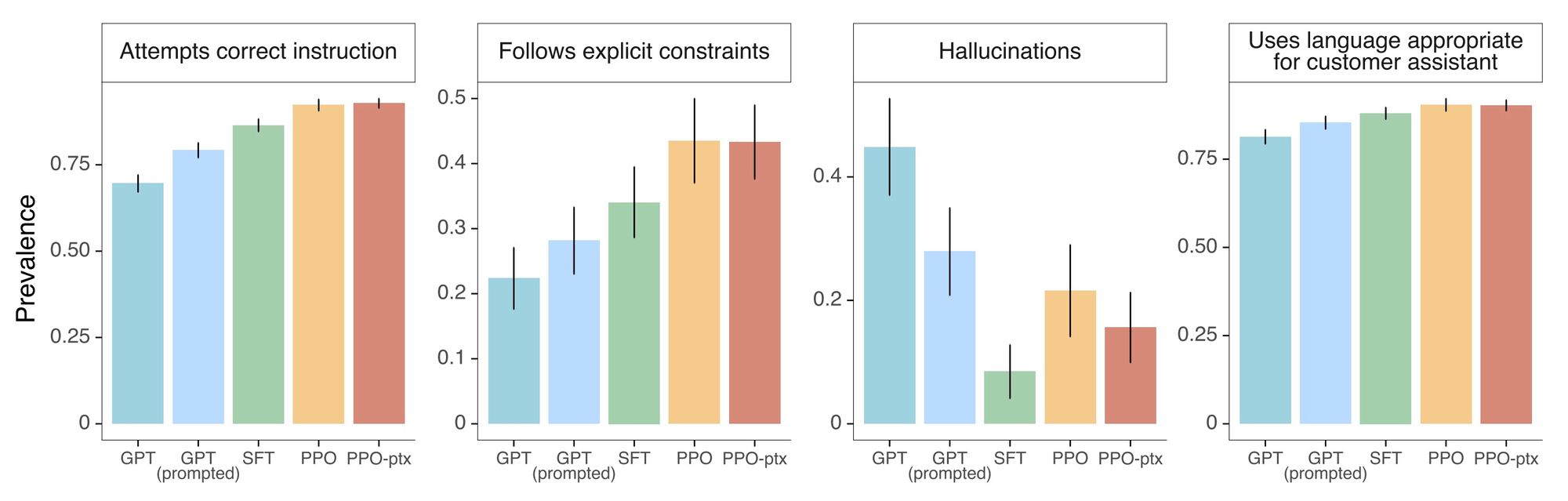

Truthful-oriented RLHF tuning, as applied at OpenAI, explicitly intends to reduce hallucination – the tendency of LLMs to sometimes generate false or invented statements. However, RLHF tuning often worsens hallucinations, as a study based on InstructGPT reported. Ironically, the same study has served as the primary basis for ChatGPT’s initial RLHF design.

The precise mechanism behind the hallucination phenomenon is still generally unclear. A first hypothesis in a paper from Google DeepMind suggests that LLMs hallucinate because they “lack an understanding of the cause and effect of their actions.” A different view posits that hallucinations arise from the behavior-cloning nature of language models, especially when an LLM is trained to mimic responses containing information it doesn't possess.

Quite interestingly, the human evaluators of InstructGPT generally preferred its outputs over the SFT model (i.e. no RLHF), despite the increase in hallucinations. This fact hints at another central problem with the RLHF methodology: the intrinsic difficulty in evaluating its results.

The core part of RLHF evaluation is based on crowd work, i.e., on human feedback evaluation: it takes place by having human annotators rate the quality of the model outputs. The argument seems straightforward: Because RLHF tuning is based on human input, human input should also (primarily) evaluate its results. But this process poses some issues, as human evaluation is highly time-consuming and expensive and suffers from strong subjectivity. Truthfulness, in particular, can be incredibly challenging to evaluate systematically.

What about assessing truthfulness by some set of objective metrics?

Unfortunately, it doesn’t get much simpler. Yannic Kilcher’s provocative experiment, for instance, revealed how his GPT-4chan – an LLM fine-tuned on a dataset filled with extreme and unmoderated conversations – significantly improved its score on TruthfulQA, a standard open-source benchmark assessing truthfulness in LLM-generated answers.

Even after RLHF fine-tuning, LLMs are not immune to exploits known as jailbreak attacks, where the model can be manipulated to produce objectionable content through subtly altered instructions. A study found that merely changing certain verbs in the input prompt can impact the safety of a model’s output significantly. Another recent astonishing result discovered a class of “universal” jailbreaking prompts seemingly able to work across different LLMs.

Even without adversarial attacks, RLHF’s efficacy to reduce social biases is not well understood. For instance, a recent paper highlighted ChatGPT’s tendency to reproduce gender defaults and stereotypes assigned to certain occupations, when translating from English to another language.

Unfortunately, it’s even unclear if further improvements to RLHF may ever entirely solve the problem of adversarial prompting. Some recent theoretical results seem to suggest that “any behavior that has a finite probability of being exhibited by the LLM can be triggered by certain prompts, which increases with the length of the prompt.”

If such results (and other related ones) were to be confirmed, they would imply a theoretical impossibility of language model alignment by RLHF or any other analogous alignment framework.

From a practical perspective, however, one must say that RLHF seems to be working, at least superficially, by enabling a more friendly user interface. But how much RLHF training exactly is necessary? More precisely, what is the relative importance of RLHF tuning to high-quality instruction tuning?

One of the most evident downsides of published reports on large-scale RLHF tuning of LLMs is the lack of proper comparison studies for the effect of RLHF tuning vs. utilizing a comparable amount of human labor to fine-tune a base model on high-quality demonstration data.

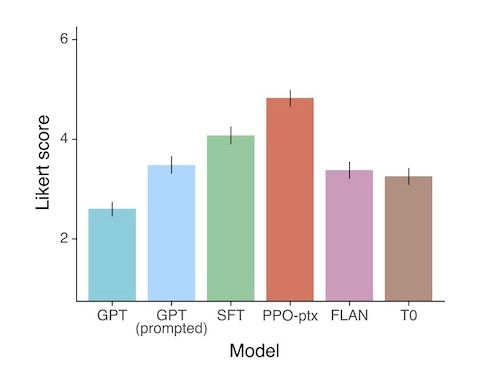

A recent technical report introduced the model LIMA (Less Is More for Alignment) as a first step in this direction. Rather than relying on RLHF fine-tuning, LIMA builds on Meta's open-source LLaMA as its base model. The authors curated a dataset of (only) 1,000 high-quality demonstration examples, covering a wide range of conversation topics. This curated dataset was used to fine-tune a LLaMA 65B model.

The results showed a remarkable performance in direct human preference evaluations, even when tested against LLMs extensively fine-tuned with RLHF, like GPT-4 and Bard models.

While such studies are still missing a full view of the landscape, they suggest that focusing on the data quality might be way more beneficial than prioritizing scalability when fine-tuning LLMs. Unraveling the exact scaling laws that govern the balance between demonstration data and RLHF or similar techniques (e.g. RLAIF) remains an intriguing research direction and will be key to improving LLM performance.

Final Words

Many techniques around LLMs, including RLHF, will continue to evolve. At its current stage, RLHF for language model alignment has significant limitations. However, rather than disregarding it, we should aim to better understand it.

There is a wealth of interconnected topics waiting to be explored. And we will be exploring these in future blog posts! If you enjoyed this article, feel free to check out some of our other recent articles to learn about

- How Reinforcement Learning from AI Feedback works

- Graph Neural Networks in 2023

- How physics advanced Generative AI

You can also follow us on Twitter, where we regularly post content on these subjects and many other exciting aspects of AI.