Real Time Speech Recognition with Python

Learn how to do real time streaming Speech-to-Text conversion in Python using the AssemblyAI Speech-to-Text API.

Overview

In this tutorial, we’ll be using AssemblyAI’s real time transcription to transcribe from the microphone in real time. Please note that this is a paid feature. We’ll be using the Python PyAudio library to stream the sound from our microphone. We’ll be using the python websockets library to connect to AssemblyAI’s streaming websocket endpoint.

First we’ll go over how to install Python PyAudio and websockets. Then we’ll cover how to use PyAudio, websockets, and asyncio to transcribe our mic’s audio in real time.

Prerequisites

- Install PyAudio

- Install Websockets

Install PyAudio

How to install PyAudio on Mac

You may get the error message about not being able to find portaudio. You can install portaudio with

brew install portaudio

After installing portaudio, you should be able to install PyAudio like so:

pip install pyaudio

How to install PyAudio on Windows

You may get an error message about Microsoft C++, in which case you’ll need to find the right wheel online and install that specific wheel and install as above but replace "pyaudio" with the name of your .whl file.

Install Websockets

We can install websockets with

pip install websockets

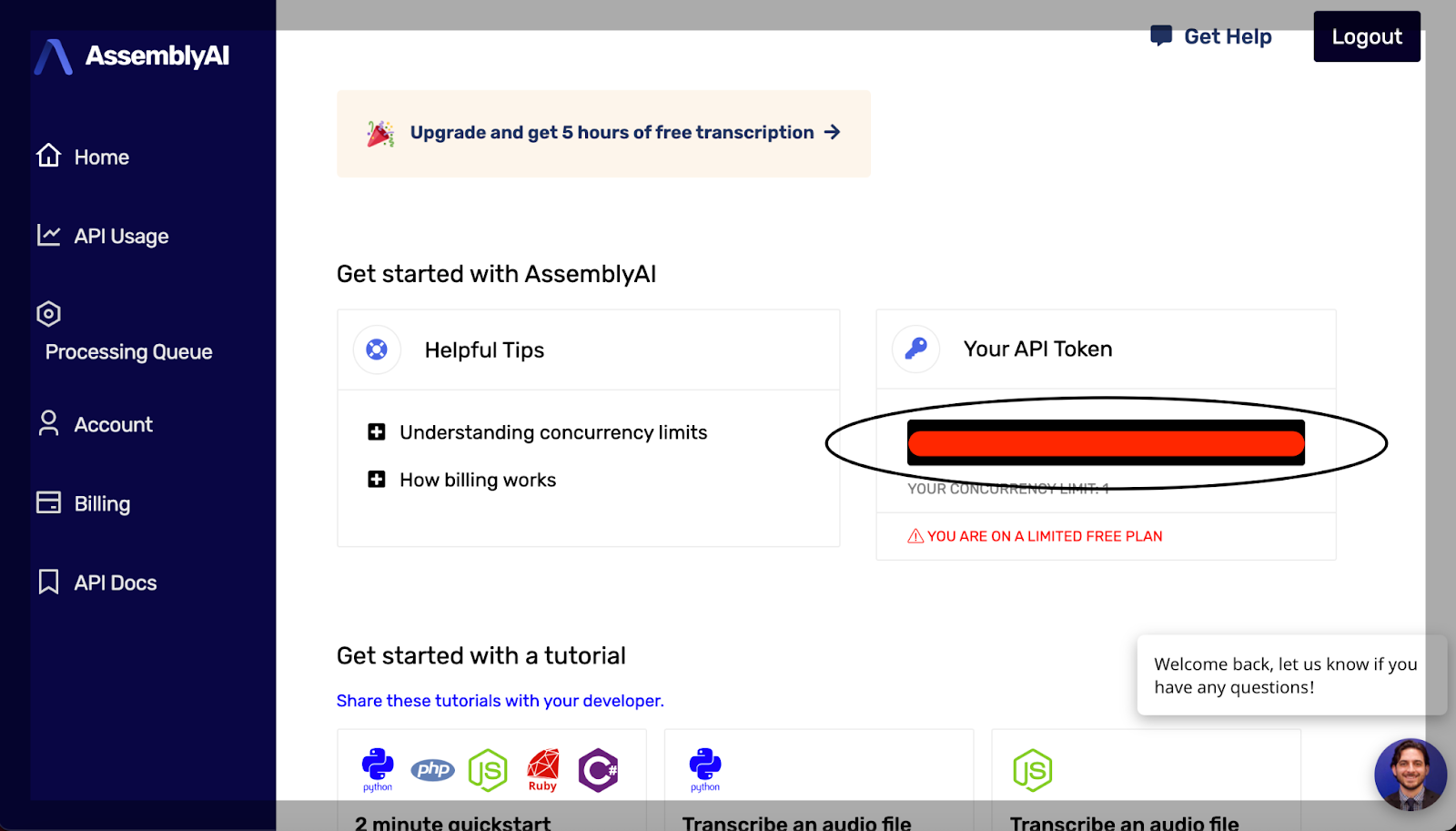

Get your AssemblyAI API Key

After installing pyaudio and webhooks, we’ll need to get our API key from Assembly. You will find your key on your console where I’ve marked below.

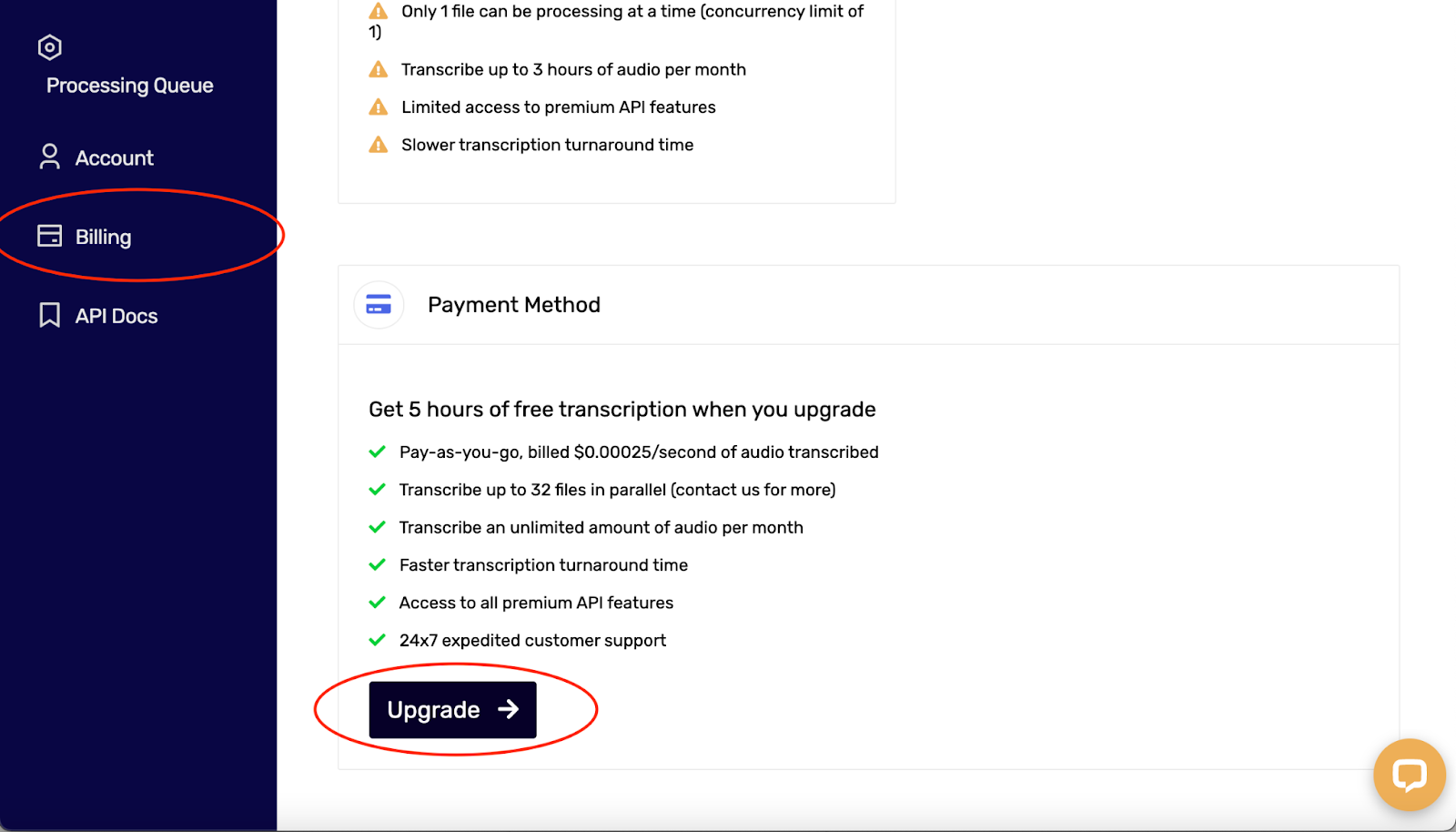

If you want to follow this tutorial and you’re on a free account, you can upgrade by going to Billing and clicking upgrade as outlined below.

Steps:

- Open up a stream with Python PyAudio

- Make an async function that will send and receive data

- Use async to run in a loop (automatically time out after 1 minute)

- Run it in the terminal and talk

Open up a stream with Python PyAudio

The first thing we’ll do is open up a stream using Python’s PyAudio library that we just installed above. To do this, we’ll need to specify the number of frames per buffer, the format, the number of channels, and the sample rate. Our custom stream will use 3200 frames per buffer, PyAudio’s 16bit format, 1 channel, and a sample rate of 16000 Hz.

import pyaudio FRAMES_PER_BUFFER = 3200 FORMAT = pyaudio.paInt16 CHANNELS = 1 RATE = 16000 p = pyaudio.PyAudio() # starts recording stream = p.open( format=FORMAT, channels=CHANNELS, rate=RATE, input=True, frames_per_buffer=FRAMES_PER_BUFFER )

Make an async function that will send and receive data

Now that we’ve opened up our Python PyAudio stream, we can stream audio from the mic to our program, we need to connect it to the AssemblyAI real time websocket to transcribe it. We’ll start by making an asynchronous function that will schedule sending and receiving data concurrently. To do this we’ll need to open up a websocket connection inside of the function, and create two more asynchronous functions that use the open websocket. We’ll use Python asyncio’s gather function to concurrently wait for both the send and receive functions to execute.

Our asynchronous send function will continuously try to stream the data at the rate of the number of frames per buffer we opened up the Python PyAudio stream at, convert that data to base64, decode it to string format, and send the formatted data in a request to the AssemblyAI websocket endpoint except in the case of a disconnect. Our asynchronous receive function will continuously try to print out the received data from the endpoint if there is any. Then we use asyncio.gather to wait for both functions to execute concurrently.

import websockets import asyncio import base64 import json from configure import auth_key # the AssemblyAI endpoint we're going to hit URL = "wss://api.assemblyai.com/v2/realtime/ws?sample_rate=16000" async def send_receive(): print(f'Connecting websocket to url ${URL}') async with websockets.connect( URL, extra_headers=(("Authorization", auth_key),), ping_interval=5, ping_timeout=20 ) as _ws: await asyncio.sleep(0.1) print("Receiving SessionBegins ...") session_begins = await _ws.recv() print(session_begins) print("Sending messages ...") async def send(): while True: try: data = stream.read(FRAMES_PER_BUFFER) data = base64.b64encode(data).decode("utf-8") json_data = json.dumps({"audio_data":str(data)}) await _ws.send(json_data) except websockets.exceptions.ConnectionClosedError as e: print(e) assert e.code == 4008 break except Exception as e: assert False, "Not a websocket 4008 error" await asyncio.sleep(0.01) return True async def receive(): while True: try: result_str = await _ws.recv() print(json.loads(result_str)['text']) except websockets.exceptions.ConnectionClosedError as e: print(e) assert e.code == 4008 break except Exception as e: assert False, "Not a websocket 4008 error" send_result, receive_result = await asyncio.gather(send(), receive())

Use async to run in a loop (automatically time out after 1 minute)

We’ve finished writing our function that will both send and receive data asynchronously from our PyAudio stream to the AssemblyAI websocket. Now we’ll use asyncio to run that in a loop until we get a timeout or an error using the following line.

asyncio.run(send_receive())

Run it in the terminal and talk

That’s all the coding we need to do. Now, we open up our terminal and run our program. It should look like the video linked at the beginning of this post!

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.