AI trends in 2024: Graph Neural Networks

From fundamental research to productionized AI models, let’s discover how this cutting-edge technology is powering production applications and may be shaping the future of AI.

While AI systems like ChatGPT or Diffusion models have been in the limelight recently, Graph Neural Networks (GNN) have been rapidly advancing. In the last couple of years GNNs have quietly become the dark horse behind a wealth of exciting new achievements that have made it all the way from purely academic research breakthroughs to actual solutions actively deployed at large-scale.

Companies like Uber, Google, Alibaba, Pinterest, Twitter and many others have been already shifting to GNN-based approaches in some of their core products, motivated by the substantial performance improvements exhibited by these methods compared to the previous state-of-the-art AI architectures.

Despite the diversity in the type of problems and the differences of their underlying datasets, all these breakthroughs use the single unifying framework of GNNs to operate at their core. This suggests a potential shift of perspective: graph-structured data provides a general and flexible framework for describing and analyzing any possible set of entities and their mutual interactions.

What are the actual advantages of Graph Machine Learning? And why do Graph Neural Networks matter in 2024? This article will recap on some highly impactful applications of GNNs, giving you everything you need to know to get up to speed on the next big wave in AI.

Introduction

Graph data is everywhere in the world: any system consisting of entities and relationships between them can be represented as a graph. Although deep learning algorithms in the last decade have made outstanding progress in areas such as natural language processing, computer vision, and speech recognition, due to their ability to extract high-level features from data by passing it through non-linear layers, most deep learning architectures are specifically tailored for Euclidean-structured data, such as tabular data, images, text, and audio, while graph data has been largely ignored.

Traditional AI methods have been designed to extract information from objects encoded by somewhat “rigid” structures. For example, images are typically encoded as fixed-size 2-dimensional grids of pixels, and text as a 1-dimensional sequence of words (or tokens). On the other hand, representing data in a graph-structured way may reveal valuable information that emerges from a higher-dimensional representation of these entities and their relationships, and would otherwise be lost.

Unfortunately, the high flexibility of graphs in allowing for a large number of possibilities to represent the same piece of data comes at the expense of the complexity to design homogeneous frameworks that are able to learn on that data and generalize across different domains. A variety of approaches have been proposed in the past two decades for AI systems capable of dealing with graph data at scale, but these advances were often tied to the specific case and settings for which they had been developed.

In some ways, this reflects what happened during the Deep Learning revolution a decade ago, when speech recognition systems that used to consist of combinations of Hidden Markov Models, Gaussian Mixture Models, and computer vision systems that relied heavily on traditional signal processing have progressively converged to end-to-end deep learning systems that even often use the same fundamental architectures: a standard example for this being Transformers and the Attention mechanism, originated in the field of Natural Language Processing and more recently spreading across so many different domains.

In recent years, a relatively small community of high-level deep learning researchers has been making great strides in showing how various data problems in different domains are best cast as graph problems, where Graph Neural Networks –together with some of their variations– have been shown to outperform mainstream methods in a variety of deep learning tasks. GNNs have de facto become a crucial tool for solving real-world problems in many totally different and seemingly unrelated areas such as drug discovery, recommendation systems, traffic predictions, and many more, in which more traditional and case-specific methods fail.

What is the current role of GNNs in the broader AI research landscape? Let’s first take a look at some statistics revealing how GNNs have seen a spectacular rise within the research community.

Graph Neural Networks in the AI Research Landscape

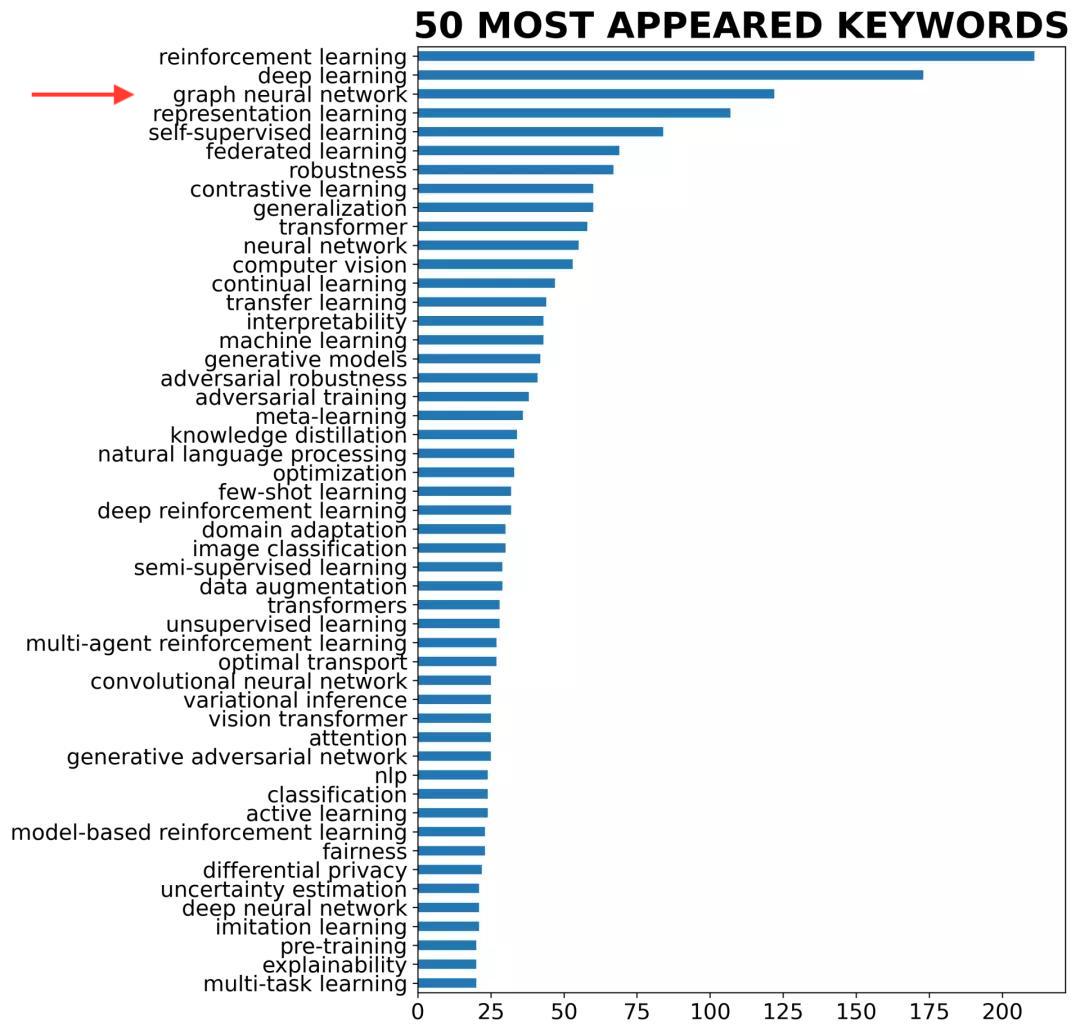

We can get a first glimpse of the growth of GNNs in research if we look at the accepted research publications from the last few years for both ICLR and NeurIPS, the two major annual conferences which focus on cutting edge AI research. We find that the term Graph Neural Network consistently ranked in the top 3 keywords year over year.

A recent bibliometric study systematically analysed this research trend, revealing an exponential growth of published research involving GNNs, with a striking +447% average annual increase in the period 2017-2019. The State of AI Report 2021 further confirmed Graph Neural Network to be the keyword in AI research publications “with the largest increase in usage from 2019 to 2020”.

We can also examine the versatility of Graph Neural Networks by looking at their impact across different domains of application. The following figure aims at illustrating the distribution of GNN papers across 22 categories.

As we can see, GNNs have seen impressive growth in AI research and their impact is distributed across vastly different domains. Let's now look at a few of these use cases and learn how GNNs have made a quantifiable impact there.

Graph Neural Networks: Selected Use Cases

Graph Neural Networks have become a key ingredient behind many recent exciting projects. Let’s take a closer look at some examples and the results of applying GNNs to large-scale models in production.

GNNs for Recommender Systems

The team at Uber Eats develops an application for food delivery and have recently started to introduce graph learning techniques into the recommendation system empowering the app, which aims to surface the foods that are most likely to appeal to an individual user.

Given the large size of graphs dealt with in such settings (Uber Eats serves as a portal to more than 320,000 restaurants in over 500 cities globally), Graph Neural Networks represent a very appealing choice. In fact, GNNs require only a fixed amount of parameters that do not depend on the size of the input graph, making learning scalable to large graphs.

On a first test for the model on recommending dishes and restaurants the team reported a performance boost of over 20% compared to the existing production model on key metrics like Mean Reciprocal Rank, Precision@K, and NDCG. After the integration of the GNN model into the Uber Eats recommendation system (which incorporates other non graph-based features) the developers observed a jump from 78% to 87% in AUC compared to the existing productionized baseline model, and a later analysis of impact revealed that the GNN-based feature was by far the most influential feature in the recommendation model as a whole.

Pinterest is a visual discovery engine that operates as a social network, where users interact with visual bookmarks, known as pins, that link to web-based resources. The platform provides users with the ability to organize pins into thematic collections, called boards. The "Pinterest graph", which encompasses 2 billion pins, 1 billion boards, and over 18 billion edges, represents a rich and complex visual ecosystem with potential implications for understanding user behaviour and preferences.

Pinterest is currently actively deploying PinSage, a GNN-powered recommendation system scaled-up to operate on the Pinterest graph. PinSage is able to predict in novel ways which visual concepts that users have found interesting can map to new things they might appeal to them.

In order to measure its accuracy, the research team has evaluated the performance of PinSage against other state-of-the-art content-based deep learning baselines (based on nearest neighbours of visual or annotation embedding methods) and used the following two key metrics for their recommendation task:

- Hit-rate – directly measuring the probability that recommendations made by the algorithm contain the items related to the query.

- Mean Reciprocal Rank (MRR) – measuring the degree to which relevant results are close to the top of search results.

Overall, PinSage yields 150% improvement in hit-rate and 60% improvement in MRR over the best baseline productionized model.

Both Uber Eats and Pinterest teams have built their AI systems on top of a specific flavour of Graph Neural Network called GraphSAGE, because of its strong scalability properties. GraphSAGE (an open-source GNN framework developed by Stanford researchers) is explicitly designed to efficiently generate useful node embeddings on unseen data and represents a major breakthrough in recommendation systems. When evaluated against three hard classification benchmarks[1], it outperformed all relevant baselines by an average of 51% (classification F1-score) compared to using node features alone, and also consistently outperformed a strong baseline (DeepWalk), with a 100x decrease in inference time.

GNNs for Traffic Prediction

Another highly impactful application of Graph Neural Networks came from a team of researchers from DeepMind who showed how GNNs can be applied to transportation maps to improve the accuracy of estimated time of arrival (ETA). The idea is to use a GNN to learn representations of the transportation network that capture the underlying structure of the network and its dynamics.

This system is already actively deployed at scale by Google Maps in several major cities around the world, and the new approach has led to drastic reductions in the proportion of negative user outcomes when querying the ETA (with up to 50% accuracy improvements compared to the prior approach deployed in production).

GNNs for Weather Forecasting

In November 2023 Google DeepMind introduced GraphCast, a new weather forecasting model, and open-sourced the model’s code.

GraphCast is now considered to be the most accurate 10-day global weather forecasting system in the world and can predict extreme weather events further into the future than was previously possible.

The model is also highly efficient and can make 10-day forecasts in less than a minute on a single Google TPU. For comparison, a 10-day forecast using a conventional approach can take hours of computation in a supercomputer with hundreds of machines.

The detailed research findings were published in Science.

GNNs for Data Mining

A new exciting application area for Graph Neural Networks is Data Mining.

Most organizations store their key business data in relational databases, where information is spread across many linked tables. Traditionally, machine learning on this data required manual feature engineering to first aggregate the data from all relevant tables into one single table before modeling. A process that is time-consuming and easily loses information.

Recently, researchers proposed a new approach called Relational Deep Learning that leverages GNNs to learn useful patterns and embeddings directly from a relational database, without any feature engineering.

Given the ubiquity of relational databases, this technology has immense potential to enable new AI applications in countless industries.

GNNs for Materials Science

In a paper published in Nature last November, the team at Google DeepMind introduced Graph Networks for Materials Exploration (or GNoME), a new deep learning tool that can discover new materials and predict their stability – at scale.

GNoME leverages GNNs to model materials at the atomic level. The atoms and their bonds are represented as graphs, with nodes denoting individual atoms and edges capturing interatomic interactions. Descriptors of the elemental properties are embedded in the node features. By operating on these graphs, the GNNs can effectively learn to predict the energetic properties of the molecules.

Crucially, GNoME employs active learning in conjunction with Density Functional Theory (DFT) calculations to iteratively expand its knowledge. Here’s what this means: The framework alternates between using its GNNs to screen candidate materials, with DFT simulations in the loop that are basically used to verify the model’s most uncertain predictions. This creates an automatic feedback loop where the model is continuously retrained on the expanded dataset.

This combination of neural networks and first-principles physics simulations enables very data-efficient and accurate learning. Remarkably, GNoME demonstrates some "emergent abilities," in that it can generalize to entirely new compositions beyond its training distribution.

GNNs for Drug Discovery

Perhaps one of the most famous recent applications of AI methods in the pharmaceutical domain came out of a research project from the Massachusetts Institute of Technology that turned into a publication in the prestigious scientific journal Cell.

The goal was to use AI models to predict the antibiotic activity of molecules by learning their graph representations, this way capturing their potential antibiotic activity. The choice of encoding the information with graphs is very natural in this setting, since antibiotics can be represented as small molecular graphs, where the nodes are atoms and the edges correspond to their chemical bonds.

The AI model learns from this data to predict the most promising molecules subject to certain desirable conditions, and subsequently these predictions get tested and validated in the lab, this way helping biologists to prioritize the molecules to be analyzed, from a pool of billions of possible candidates.

This led to the identification of a previously unknown compound, named Halicin, found to be a highly potent antibiotic, also effective against antibiotic-resistant bacteria, an outcome regarded as a major breakthrough by field experts in antibiotic discovery research.

The news hit the media, with featured articles by the BBC and Financial Times among others, but barely any consideration was given to the fact that a GNN-based approach lies at the backbone of the specific AI model deployed. On the other hand, the researchers reported how using a directed-message passing deep neural network approach, a core feature of GNNs, was essential for the discovery: the other state-of-the-art models that were also tested against Halicin, in fact, failed to output a high prediction rank, contrary to the graph-based AI model.

GNNs for Explainable AI

Drug discovery AI models like the ones discussed above are used to create numerical predictions that then need to be tested in the lab. The model itself is not able a priori to explain its own results. This situation is common to most current AI models, and it’s the reason why many people refer to them as “black boxes”.

A breakthrough paper published in Nature at the end of 2023 from MIT and Harvard seeks to demonstrate something different on this front. The authors trained GNNs to screen chemical compounds for those that kill methicillin-resistant Staphylococcus aureus, the deadliest among bacteria that have evolved to be invulnerable to common antibiotics.

The researchers were not only able to predict antibiotic activity of over 12 Million compounds, but did so by using explainable graph algorithms, which by design are able to explain the rationale behind their predictions.

GNNs for Protein Design

The goal of protein design is to create proteins that have desired properties, and can be done through (typically high cost) experimental methods that allow researchers to engineer new proteins by directly manipulating the amino acid sequence of a protein. The design of new proteins has huge potential applications, such as developing new drugs, enzymes, or materials.

Baker Lab recently combined Graph Neural Networks and Diffusion techniques, creating an AI system named RosettaFoldDiffusion (RFDiffusion), which has turned out to be capable of designing protein structures satisfying custom constraints. The AI model operates via an E(n)-Equivariant Graph Neural Network, a special kind of GNN expressly designed to process data structures with rigid motion symmetries (such as translation, rotation and reflection in space), and was fine-tuned as a denoiser, i.e. a diffusion model.

Released in November 2022, RFDiffusion is a highly complex system capable of tackling a wide multitude of specific tasks in protein design, and accordingly has been tested against a variety of metrics and benchmarks. The results have shown dramatic improvements over state-of-the-art competitors: RFDiffusion solved 100% more benchmark problems (23 out of 25) than the previous state-of-the-art deep neural network model in designing scaffolds for protein structural motifs, and achieved an 18% success rate in designing protein binders –a problem referred to as a “grand challenge” in protein design. Moreover, RFDiffusion's experimental success rate ranges from 5x to 214x improvement depending on the target protein.

Some experts in the field believe that RFDiffusion could be one of the “biggest advances in structural biology of this decade together with AlphaFold”, an advancement that relies critically on the latest advances in Graph Neural Networks.

Final words

Graph Neural Networks are a rapidly evolving field with many exciting developments, and the AI research community has substantially increased attention to this area in the last few years.

In industry, applications of graph machine learning in remarkably different domains have only recently started to appear and GNNs already established themselves as a game-changer in some state-of-the-art production-ready models deployed at large-scale. These recent successes bring about an opportunity for a new range of applications, and it will be fascinating to see what the field will bring this year.

Make sure to follow us on Twitter to stay in the loop when we release new content!

Footnotes

- These consisted of two evolving document graphs based on citation data and Reddit post data (predicting paper and post categories, respectively), and a multigraph generalization experiment based on a dataset of protein-protein interactions (predicting protein functions).

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.