Nov. Voice AI meetup recap: Real-world challenges of deploying voice AI agents

On November 12th, AssemblyAI and Rime hosted a panel discussion at Arcana in San Francisco, bringing together technical leaders building production voice agents. Over wine and networking, Zach Kamran (Co-Founder & CTO at Simple AI), Indresh MS (VP of Production Technology Labs at Aquant), and Ben Cherry (Head of Developer Products at LiveKit) shared hard-won lessons from hundreds of production calls, failed architectures, and unexpected user behaviors.

Voice AI agents are moving from controlled demos into production environments, and the reality is messier than anyone expected.

On November 12th, AssemblyAI and Rime hosted a panel discussion at Arcana in San Francisco, bringing together technical leaders building production voice agents. Over wine and networking, Zach Kamran (Co-Founder & CTO at Simple AI), Indresh MS (VP of Production Technology Labs at Aquant), and Ben Cherry (Head of Developer Products at LiveKit) shared hard-won lessons from hundreds of production calls, failed architectures, and unexpected user behaviors.

The conversation revealed a consistent pattern: what works in testing often fails in production. What seems like a simple technical problem turns out to be a complex interplay of technology, user psychology, and business constraints.

Teams are learning these lessons the hard way.

The evaluation challenge: Moving beyond demo metrics

Testing voice agents presents a fundamentally different challenge than testing traditional software. You can't just write unit tests and call it done.

"We analyzed 200-300 calls from field service technicians," one panelist explained. "About 80% of interactions were just yes/no responses. We expected these conversational flows, but that's not how people actually used it."

This gap reveals the first major challenge: simulation-based testing matters, but it can't replace real-world analysis.

Building an evaluation framework

Teams are converging on a hybrid approach, combining quantitative metrics with qualitative analysis.

Quantitative metrics like latency are straightforward to measure and benchmark. Response times under one second feel natural; anything beyond two seconds triggers user frustration.

Qualitative observations need conversion into measurable outcomes. Success metrics become highly vertical-specific. What constitutes a "good" interaction for a healthcare scheduling bot differs dramatically from a field service technical support agent.

The most effective approach? Judge voice agents by the same standards you'd use for human performance.

The AI-to-AI testing advantage

One technique is gaining traction: using AI agents to test other AI agents. Teams can simulate thousands of conversations programmatically and surface edge cases that would take months to encounter in production.

But here's the catch: AI-to-AI testing reveals technical failures, not behavioral ones. You still need human call analysis to understand how real users interact with your agent; those interactions are often nothing like what you designed for.

What users actually expect (and why it matters)

Human-AI interaction introduces challenges that technology alone can't solve.

The dual emotion problem

Users approach AI with contradictory expectations that create an impossible standard.

At first, they expect AI to "know everything." If a voice agent needs to ask clarifying questions or admits uncertainty, users become immediately skeptical.

At the same time, they have zero tolerance for errors. While a 30-40 minute wait time for a human agent is grudgingly acceptable, a voice agent that fails on the first, second, or third attempt triggers immediate frustration and abandonment.

This creates a narrow margin for error that human agents don't face.

Speech doesn't always mean conversation

Perhaps the most surprising finding from production deployments. Users don't actually want to have conversations with AI agents in many contexts.

In the field services example, technicians in hands-free, hazardous environments needed quick confirmations, not extended dialogues. The sophisticated conversational capabilities that took months to build went largely unused.

This pattern repeats across verticals. Users want task completion, not conversation; the most successful voice agents optimize for efficiency and accuracy over natural language fluency.

Technical challenges: Where Speech-to-Text breaks in production

The gap between advertised accuracy rates and production performance is larger than most teams expect.

The <5% error rate myth

"Claims of less than 5% error rates don't reflect what we see in production," one panelist noted. "Background noise, multiple speakers, domain-specific vocabulary—all of these drive error rates much higher in real environments."

Accuracy degrades most with:

- Technical terminology and industry jargon that base models haven't encountered

- Model numbers and long identifiers that sound ambiguous when spoken

- Multiple speakers in the same audio stream

- Background noise in field, retail, or call center environments

Modern speech recognition models address these challenges through training on diverse audio conditions.Teams can also improve domain-specific accuracy through Keyterms prompting that boost recognition of industry-specific terms, product names, and technical jargon that general-purpose models might miss.

The endpointing deadlock

Turn-taking in conversation is harder than it looks. Humans naturally pause for 1-2 seconds when thinking or processing information. Voice agents that respond after one second of silence feel responsive, but what happens when a user is reading a long model number or reference code?

Premature interruptions break the flow, while delayed responses feel unnatural. And when both the agent and human try to speak at the same time, you end up in a deadlock scenario where both sides wait for the other to continue.

There's no perfect solution here, only trade-offs. Some teams tune for faster responses and accept occasional interruptions; others add longer pauses for specific contexts where users are likely to provide lengthy inputs.

Architecture patterns: What works (and what doesn't)

Clear patterns are emerging for architecting reliable voice agents.

Avoid stacked agents

One tempting pattern is to use agent-to-agent delegation for information retrieval. Need to look up customer data? Have the voice agent call a specialized "database agent" that handles the query.

Don't do this.

"Stacked agents increase hallucination risk exponentially," one panelist warned. "Every additional agent layer adds opportunities for the system to fabricate information or misinterpret context."

Direct tool access beats agent intermediaries almost every time. If you need to query a database, give the voice agent direct access to that tool; if you need to call an API, make it a direct function call rather than routing through another agent.

The tool limitation strategy

Another emerging best practice is to limit agents to 2-3 tools per conversational turn.

More tools don't improve capabilities. They increase decision paralysis and reduce accuracy. When an agent has access to 20 different functions, determining the right one for a given context becomes unreliable.

The solution? Segment users dynamically to narrow the tool context. If you know a user is calling about billing, only expose billing-related tools; if they're in a technical support flow, provide troubleshooting tools but hide account management functions.

This segmentation improves both accuracy and response time while reducing the cognitive load on the underlying model.

Redundancy is non-negotiable

Voice agents require hard real-time dependencies that traditional applications don't. When Speech-to-Text goes down, your entire system stops working.

Production-grade deployments require:

- Multiple STT providers with automatic failover

- Multiple LLM providers for response generation

- Fallback systems that gracefully degrade rather than fail completely

One panelist described having three different STT providers in production, with automatic failover if any single provider experiences latency spikes or outages. The operational overhead is worth it. Voice agents can't tolerate the downtime that might be acceptable for a web application.

When selecting STT providers for your redundancy strategy, prioritize those with proven uptime records and fast failover capabilities. AssemblyAI maintains 99.99% uptime and provides both synchronous and asynchronous processing options; this makes it a reliable component in multi-provider architectures where consistency matters.

Speech-to-speech vs. modular: Choosing your approach

The debate between end-to-end speech-to-speech models and modular architectures (STT → LLM → TTS) dominated much of the discussion.

Current limitations of real-time models

Speech-to-speech models that process audio directly without intermediate text transcription offer impressive conversational qualities. They handle tone, emotion, and natural speech patterns better than modular approaches.

But they have significant limitations for production use.

Tool calling capabilities are poor. If your agent needs to query databases, call APIs, or interact with external systems, speech-to-speech models struggle.

They struggle to follow instructions for complex tasks. For simple conversational characters or basic Q&A, they work well; for multi-step business processes with conditional logic, they fall short.

Enterprise compliance becomes a challenge. Speech-to-speech models function as black boxes—you don't have visibility into the intermediate reasoning or the ability to sanitize inputs and outputs for compliance requirements. For now, most production enterprise deployments use modular architectures. The trade-off in conversational naturalness is worth the gains in reliability, auditability, and integration capabilities.

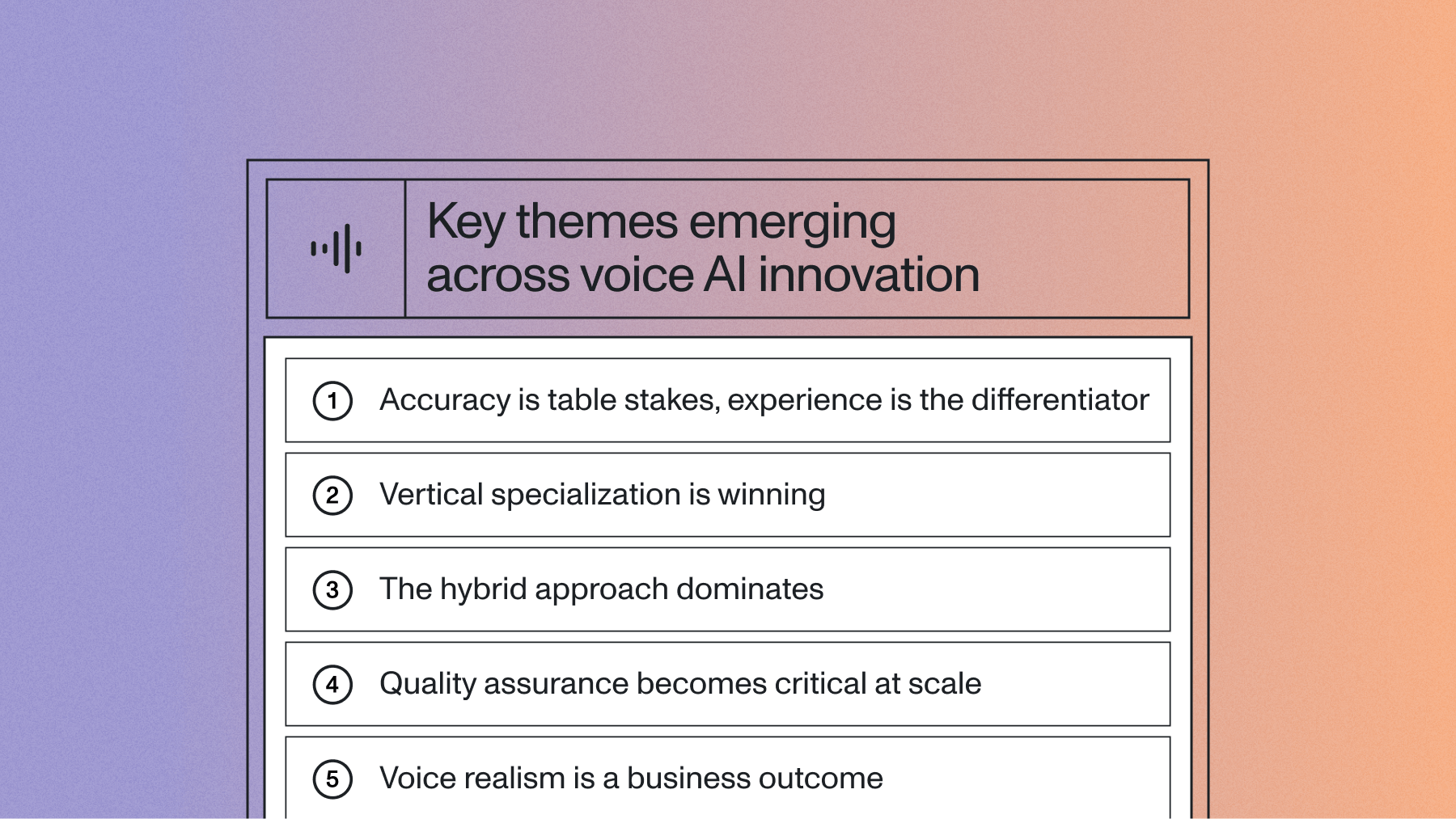

Market direction: Where the opportunity lies

The conversation repeatedly circled back to a central tension—should teams build vertical-specific voice agents or horizontal platforms?

The vertical-first approach

The consensus leaned heavily toward vertical-first deployments. "No voice agent is currently 'good enough' for general use," one panelist observed. "That's a massive opportunity—but it means you need to narrow your focus."

Vertical-specific agents can:

- Optimize for industry-specific vocabulary and workflows

- Set appropriate success metrics based on domain expertise

- Integrate deeply with existing vertical tools and systems

Horizontal platforms, by contrast, struggle to meet the specific requirements of any given use case. The successful voice agents in production today are purpose-built for specific workflows, not general-purpose assistants.

The accessibility opportunity

Perhaps the most compelling long-term vision discussed—voice interfaces as accessibility tools.

Voice is humanity's most natural form of interaction. It requires no typing skills, no interface literacy, no technical knowledge. For populations that struggle with traditional computing interfaces—whether due to age, disability, education, or familiarity—voice agents remove fundamental barriers.

"This could open computing to non-technical populations in ways we haven't seen since the smartphone."

The business opportunity is substantial; so is the social impact.

Final words

Voice agents are moving beyond proof-of-concept into production—and the gap between demo performance and reality is wide. Success requires evaluation frameworks that combine simulation with real-world testing, architectures built for redundancy, and realistic expectations about current capabilities. The teams succeeding aren't deploying the most sophisticated technology—they're building pragmatically to meet users' actual needs. Speech recognition accuracy in noisy environments, reliable uptime, and flexible integration capabilities are requirements for any production deployment.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.