One platform, multiple models: Simplifying Voice AI with LLM Gateway

LLM Gateway unifies voice AI development with one API for transcription and LLM processing. Access OpenAI, Anthropic, and Google models without managing multiple integrations.

Voice AI teams face a common frustration: building a single feature requires orchestrating multiple vendors, managing separate API keys, and maintaining custom integration code. You're transcribing audio with one service, sending that transcript to an LLM provider, and wrangling the response back into your application. It works, but it's fragile, time-consuming, and expensive to maintain.

A medical documentation platform processes thousands of patient visits daily. Their engineering team maintains integrations with a speech-to-text provider, routes transcripts to multiple LLM endpoints depending on the task, and manages error handling across this entire chain. When a new model launches or an API schema changes, they're back in the code, updating pipelines and testing edge cases.

This fragmentation isn't just a technical inconvenience, but can be a strategic bottleneck. Teams spend weeks building orchestration layers instead of improving their core product. They're locked into specific providers because switching means rewriting integration code. And when something breaks, debugging across multiple services turns a five-minute fix into an afternoon of vendor coordination.

LLM Gateway changes this. It's a unified platform that takes you from raw audio to structured insights through a single API, with access to leading language models from OpenAI, Anthropic, and Google.

The hidden cost of fragmented voice AI stacks

Most voice AI applications follow a predictable pattern: capture audio, transcribe it, process the transcript with an LLM, and extract insights. Simple in theory. In practice, it means managing relationships with multiple vendors, each with their own authentication, billing, rate limits, and quirks.

A call center analytics company processing 400,000+ minutes of audio monthly described their workflow: transcribe with one API, copy the transcript to another service for sentiment analysis, route it elsewhere for summarization, then parse everything back into a consistent format their application can use. Each step introduces latency, potential failures, and maintenance overhead.

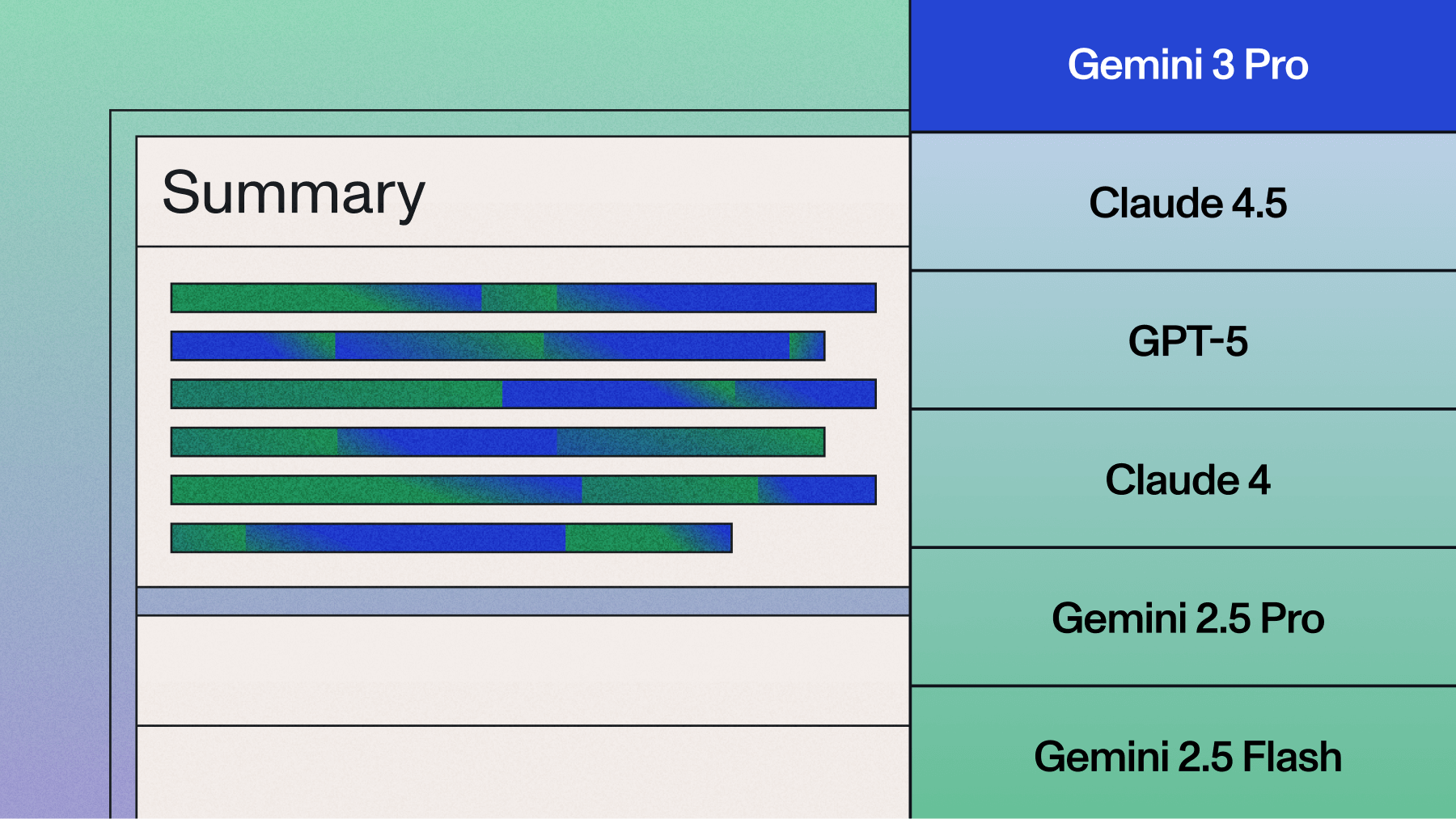

These costs compound over time. New models release frequently: GPT-4.5, Claude Opus 4, Gemini 2.0. But accessing them means waiting for your LLM provider to add support, updating your integration code, and testing thoroughly before deploying. Teams often wait weeks between a model's announcement and actually using it in production.

Then there's the operational burden. Engineering teams juggle multiple API keys, navigate different authentication patterns, reconcile separate billing statements, and debug issues that span vendor boundaries. A transcription error? Check the speech-to-text logs. LLM output not formatted correctly? Different support channel, different troubleshooting process.

How LLM Gateway works

LLM Gateway provides a single API endpoint that handles the entire flow from audio to insights. You send audio files or configure streaming transcription, specify which LLM you want to use, and receive structured results, all through AssemblyAI's infrastructure.

From fragmented to unified

The platform integrates deeply with transcript data. Instead of copying text between services, you prompt directly against transcripts within the same API call. This tight integration enables context-aware processing. For example, you can specify that a transcript is from a "Native American history lecture" or a "medical consultation," and the LLM uses that context to generate more accurate outputs.

Multi-model flexibility

LLM Gateway provides access to multiple frontier models through a consistent interface:

- OpenAI's GPT family: GPT-4o, GPT-4o mini, GPT-4 Turbo

- Anthropic's Claude: Claude Opus 4, Claude Sonnet 4, Claude Haiku

- Google's Gemini: Gemini 2.0 Flash, Gemini 1.5 Pro, Gemini 1.5 Flash

These models support tool calling, streaming responses, and internet search capabilities. The schema remains consistent across providers, so switching from GPT to Claude means changing one parameter, not rewriting integration code.

This flexibility enables optimization strategies that were previously too complex to implement. Use Claude Haiku for extracting simple metadata (fast and cheap), Claude Sonnet for detailed summaries (balanced performance), and Claude Opus 4 for complex reasoning tasks (maximum capability). You're not locked into a single model's pricing or performance characteristics.

Real-world applications

Medical documentation automation

Healthcare providers spend 20-30% of their time on documentation. One telehealth platform automated this using LLM Gateway's integrated processing.

Their workflow: patient consultation audio streams to AssemblyAI's real-time transcription, the completed transcript is immediately processed by Claude to generate structured SOAP notes (Subjective, Objective, Assessment, Plan), and formatted documentation appears in their system within 30 seconds of the call ending.

The key improvement wasn't just automation, but consistency. The LLM Gateway processes every consultation with the same level of detail and structure, regardless of the provider's documentation style. Clinicians review and approve notes rather than writing them from scratch, reducing documentation time by 60-70%.

Call center intelligence

A call center analytics company processing over 50,000 hours of customer conversations monthly needed to extract trends, sentiment, and compliance risks at scale. Their previous approach involved three separate services: speech-to-text, sentiment analysis, and keyword extraction, each requiring its own integration.

With LLM Gateway, they consolidated everything. Real-time transcription captures conversations as they happen, GPT-4 analyzes sentiment and flags compliance concerns, and structured data flows directly into their reporting dashboard. When they want to test Claude's performance on sentiment detection versus GPT's, they change one parameter without needing to rewrite code.

The operational impact was immediate. Instead of debugging issues across three vendor relationships, they work with one support team. When GPT-4.5 launched, they tested it in production within 24 hours. Their engineering team stopped maintaining orchestration code and started building features that differentiate their product.

Legal intake optimization

Law firms handling hundreds of intake calls daily needed to score agent performance, identify high-value cases, and ensure regulatory compliance. The technical challenge: processing streaming audio, generating real-time scorecards, and translating conversations in a HIPAA-compliant manner.

Their solution combined AssemblyAI's streaming transcription with LLM Gateway's model selection. For real-time agent feedback during calls, they use GPT-4o mini (low latency, cost-effective). For post-call analysis and case summaries, they switch to Claude Sonnet (deeper reasoning, better at legal context). The entire stack runs through one API, one set of credentials, one compliance framework.

Technical implementation

Getting started requires minimal changes to existing AssemblyAI integrations. If you're already transcribing audio, you add LLM processing by including a prompt in your API request.

Basic example

Here's a simple implementation that transcribes audio and generates a summary:

import requests

import time

# Step 1: Transcribe the audio

base_url = "https://api.assemblyai.com"

headers = {

"authorization": "<YOUR_API_KEY>"

}

# You can use a local filepath:

# with open("./my-audio.mp3", "rb") as f:

# response = requests.post(base_url + "/v2/upload",

# headers=headers,

# data=f)

# upload_url = response.json()["upload_url"]

# Or use a publicly-accessible URL:

upload_url = "https://assembly.ai/sports_injuries.mp3"

data = {

"audio_url": upload_url

}

response = requests.post(base_url + "/v2/transcript", headers=headers, json=data)

transcript_id = response.json()["id"]

polling_endpoint = base_url + f"/v2/transcript/{transcript_id}"

while True:

transcript = requests.get(polling_endpoint, headers=headers).json()

if transcript["status"] == "completed":

break

elif transcript["status"] == "error":

raise RuntimeError(f"Transcription failed: {transcript['error']}")

else:

time.sleep(3)

# Step 2: Send transcript to LLM Gateway

prompt = "Provide a brief summary of the transcript."

llm_gateway_data = {

"model": "claude-sonnet-4-5-20250929",

"messages": [

{"role": "user", "content": f"{prompt}\n\nTranscript: {transcript['text']}"}

],

"max_tokens": 1000

}

response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers=headers,

json=llm_gateway_data

)

print(response.json()["choices"][0]["message"]["content"])You'll see this output after running the code above:

The transcript describes several common sports injuries - runner's knee,

sprained ankle, meniscus tear, rotator cuff tear, and ACL tear. It provides

definitions, causes, and symptoms for each injury. The transcript seems to be

narrating sports footage and describing injuries as they occur to the athletes.

Overall, it provides an overview of these common sports injuries that can result

from overuse or sudden trauma during athletic activities

Model selection strategies

Different tasks benefit from different models. Here's a practical framework:

Use GPT-4o mini or Claude Haiku for:

- Extracting simple metadata (speaker names, dates, phone numbers)

- Classification tasks (routing, categorization)

- High-volume processing where speed and cost matter

Use GPT-4o or Claude Sonnet for:

- Detailed summaries requiring understanding of context

- Sentiment analysis with nuanced interpretation

- Multi-step reasoning over transcript content

Use Claude Opus 4 or GPT-4 Turbo for:

- Complex legal or medical document generation

- Deep analysis requiring extensive context

- Tasks where output quality justifies higher cost

Pricing and availability

LLM Gateway uses pass-through pricing for language model usage. You pay the same rates you'd pay going directly to OpenAI, Anthropic, or Google, plus AssemblyAI's transcription costs. We don't mark up LLM usage or require minimum commitments, and billing consolidates through your existing AssemblyAI account.

The platform is available now. Existing AssemblyAI customers can start using LLM Gateway by adding the configuration to their API requests. New users can sign up and access both transcription and LLM processing through the same account.

Why consolidation matters

Consolidated AI infrastructure isn't just about convenience, it's about velocity. Teams building voice AI features compete on how quickly they can ship improvements, test new models, and respond to user feedback. When your infrastructure spans multiple vendors, each iteration cycle gets slower.

LLM Gateway removes that friction. New models become available within a day of release because AssemblyAI maintains the integrations. Schema changes and API updates happen transparently. When you want to A/B test Claude versus GPT on summarization quality, you change one parameter and compare results—no separate integration work needed.

This consolidation also improves reliability. Instead of debugging failures across multiple services ("Was it the transcription? The LLM? The network between them?"), you work with a single provider who owns the entire flow. When something breaks, you file one support ticket, not three.

For developers, it means writing less plumbing code and building more features. For product teams, it means faster experimentation and iteration. For companies, it means predictable costs and reduced operational complexity.

Voice AI development requires speed and flexibility. Teams that experiment quickly and adopt new models rapidly gain competitive advantage. LLM Gateway provides that foundation by unifying transcription and LLM processing, so you can focus on building differentiated features instead of managing integrations.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.