Build voice AI apps with LLM Gateway

Learn how LLM Gateway connects speech recognition with LLMs to build intelligent applications. Explore real-world use cases, pricing, and implementation strategies.

The explosion of audio content has created an unprecedented opportunity for businesses. Organizations processing audio data continue growing rapidly, yet most struggle to extract meaningful intelligence from their conversations, meetings, and calls.

Here's the challenge: traditional speech-to-text gives you transcripts, but transcripts alone don't drive business decisions. You need intelligence—summaries that capture key points, answers to specific questions, and structured data that integrates with your existing systems.

This is where Large Language Models (LLMs) transform speech applications from simple transcription tools into intelligent business platforms. But connecting speech recognition to LLMs has historically required complex engineering and significant resources.

In this guide, we'll explore how Voice AI apps are revolutionizing business operations, examine the categories driving real ROI, and show you how to build production-ready applications using AssemblyAI's LLM Gateway.

Voice AI applications transforming business operations

Voice AI apps are software applications that combine speech recognition, Large Language Models, and speech synthesis to understand conversations, extract business insights, and automate workflows. These applications transform raw audio data into actionable intelligence—automatically generating meeting summaries, analyzing sales calls, and extracting structured data from customer interactions.

The transformation is happening across every industry. Healthcare organizations like PatientNotes.app are automating clinical documentation. Legal firms including JusticeText are revolutionizing how they process depositions and court proceedings.

Voice AI apps deliver measurable business impact through automation. Organizations report 40-60% productivity improvements in audio-intensive workflows, with ROI typically achieved within 2-3 months.

Key business transformations include:

- Real-time intelligence: Customer service teams spot issues before escalation

- Data-driven coaching: Sales managers coach based on actual conversation patterns

- Scalable feedback analysis: Product teams understand user feedback at enterprise scale

Categories of Voice AI apps driving ROI

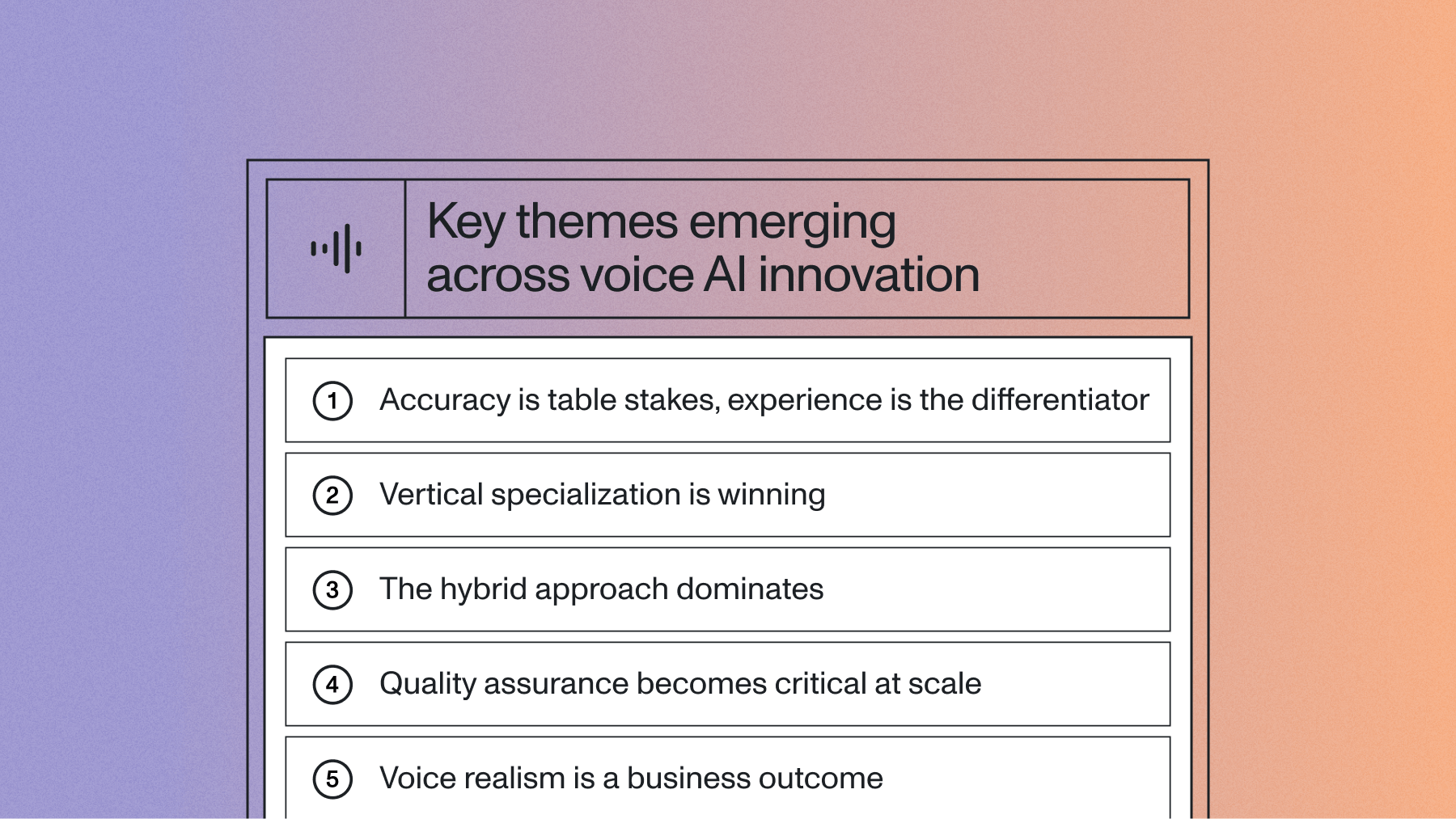

While the possibilities for Voice AI are vast, the most successful implementations fall into several key categories, each addressing specific business challenges with measurable impact.

Meeting intelligence platforms

Companies like Circleback AI and Supernormal are transforming meeting workflows with 65% reduction in follow-up time and 30% increase in action item completion rates.

Business impact includes:

- Improved accountability: Automatic action item tracking and assignment

- Faster decision-making: Instantly searchable discussion points

- Enhanced knowledge transfer: Organizational memory of all meetings

Sales intelligence solutions

Sales intelligence represents one of the fastest-growing Voice AI categories. Platforms built by companies like Clari analyze every customer interaction to provide coaching insights, track deal progression, and identify at-risk opportunities.

These applications excel at pattern recognition. They identify which talk tracks lead to successful outcomes, which objections frequently derail deals, and which competitive mentions require immediate attention. Sales leaders gain visibility that was previously impossible—understanding not just what deals are closing, but why.

Customer service automation

Contact centers are experiencing a Voice AI revolution. Companies like CallSource and Observe.ai are deploying Voice AI to automatically categorize support tickets, identify customer sentiment, and surface quality assurance insights.

The transformation goes beyond operational efficiency. Voice AI enables proactive service—identifying frustrated customers before they churn, spotting product issues before they become widespread, and ensuring consistent service quality across thousands of agents.

Healthcare documentation systems

Healthcare providers face enormous documentation burdens. Voice AI applications from companies like PatientNotes.app and clinical documentation platforms are automating the creation of SOAP notes, extracting diagnostic codes, and ensuring comprehensive record-keeping without sacrificing patient interaction time.

LLM Gateway: Applying LLMs to spoken data

AssemblyAI's LLM Gateway is a framework for applying Large Language Models (LLMs) to spoken data. It provides a unified API to access leading models from providers like Anthropic, OpenAI, and Google, reducing the complexity of building intelligent Voice AI applications.

The typical workflow involves two main steps: first, transcribing your audio to get an accurate text transcript, and second, using that transcript with the LLM Gateway to generate insights.

Application Architecture Flow:

Audio Input → Speech-to-Text API (/v2/transcript) → Transcript Text → LLM Gateway (/v1/chat/completions) → Structured Output

↓ ↓ ↓ ↓ ↓

Raw Audio → Accurate Transcript → Your Prompt → LLM Analysis → Your Application

This modular approach gives you full control over the process. You can preprocess the transcript, inject custom context, and engineer prompts precisely for your use case before sending the data to your chosen LLM. This lets your team focus on building features that differentiate your product rather than managing multiple complex LLM integrations.

What makes the LLM Gateway particularly powerful is its flexibility. You can apply sophisticated reasoning to transcripts of any length by managing the context you provide to the model, enabling you to extract business-relevant insights from even the longest and most complex conversations.

Core Voice AI capabilities

AssemblyAI provides a suite of capabilities that address the most common speech intelligence use cases. These are available through our Speech-to-Text API and LLM Gateway, offering a flexible way to solve business problems across various industries.

Summarization (Speech Understanding)

The Summarization feature, part of our speech understanding models, transforms lengthy audio content into concise, actionable insights. Unlike generic text summarization, it's designed to understand conversation dynamics. You can enable it with a single parameter in your transcription request.

For example, a 90-minute board meeting becomes a structured summary highlighting decisions made, action items assigned, and key discussion points—formatted specifically for executive review. Sales teams use this to quickly understand prospect calls without listening to entire recordings.

The business impact is immediate: executives report saving hours weekly on meeting follow-ups, while sales teams can review significantly more prospect interactions in the same timeframe.

Question & answer (LLM Gateway)

LLM Gateway lets you ask specific questions about your audio transcripts and receive precise, contextual answers. This goes far beyond keyword search. After transcribing your audio, you pass the transcript text to the LLM Gateway with a question.

Customer success teams use this to quickly identify why clients are churning by asking questions like "What concerns did the customer raise about our pricing?" or "How did the customer respond to our retention offer?" The model analyzes the conversation context to provide accurate, actionable answers.

Legal teams leverage Q&A to review depositions and client meetings, asking targeted questions about specific topics without manually reviewing hours of recordings.

Custom prompts and data extraction (LLM Gateway)

Custom prompts with LLM Gateway provide the ultimate flexibility to extract any specific information from your audio content. This function lets you program the LLM to understand your unique business context and return precisely formatted results, such as JSON.

Healthcare organizations use custom prompts to extract structured medical information from patient consultations for electronic health records. Financial services firms extract compliance-related information from advisor-client meetings, ensuring regulatory requirements are consistently met. This capability also enables powerful call data extraction that integrates directly with business systems like CRMs or project management tools.

The key advantage is customization without complexity—you define what you need in natural language, and the LLM Gateway handles the implementation.

Enterprise Voice AI app implementations with proven results

The combination of LeMUR's core functions creates powerful applications across multiple industries. Leading organizations are implementing Voice AI solutions that deliver measurable business value.

Meeting intelligence applications

Meeting intelligence platforms like Otter.ai and Fireflies.ai demonstrate the market demand for Voice AI applications. These platforms process millions of meetings monthly, providing summarization, action item tracking, and searchable conversation archives. Companies including Mem and Circleback AI are building on similar technology to deliver enhanced meeting intelligence.

Organizations implementing meeting intelligence solutions report substantial productivity gains: dramatic reduction in meeting follow-up time, improved project coordination, and increased action item completion rates.

The key success factor is customization—generic meeting summaries provide limited value, but summaries formatted for specific business processes drive measurable outcomes.

Sales intelligence applications

Sales intelligence platforms represent one of the fastest-growing applications of Voice AI technology. Platforms like Gong and Chorus.ai have demonstrated how conversation analysis can dramatically improve sales performance.

AssemblyAI's APIs enable similar capabilities for organizations building internal sales intelligence tools:

- Deal analysis: Automatically extract competitor mentions, pricing discussions, and decision-making criteria from sales calls

- Coaching insights: Identify successful conversation patterns and areas for improvement across sales teams

- Pipeline intelligence: Track deal progression signals and risk factors mentioned during prospect conversations

Sales teams using conversation intelligence report higher close rates and shorter sales cycles. The technology delivers rapid return on investment through improved deal conversion alone. Companies like Salesroom and UpdateAI are leveraging these capabilities to transform sales performance.

Customer service solutions

Customer service represents the largest opportunity for Voice AI applications, with contact centers processing billions of interactions annually. The LLM Gateway enables several high-impact use cases:

Call center implementations use LeMUR to automatically categorize support tickets, identify escalation triggers, and extract customer satisfaction indicators. This reduces manual quality assurance workload substantially while improving consistency in issue identification.

Support automation examples include automatically routing calls based on conversation content, generating follow-up summaries for customer records, and identifying training opportunities for support agents.

The business case is compelling: contact centers can achieve significant cost savings through improved efficiency and reduced quality assurance costs. Organizations like Observe.ai and CallSource demonstrate the transformative potential of Voice AI in customer service.

Technical architecture for scalable Voice AI apps

Voice AI applications require three core technical layers working together seamlessly. Understanding this architecture reduces development time and prevents common implementation failures.

Essential architecture components:

Core architecture layers

Speech Recognition Layer: This foundational layer converts raw audio into accurate transcripts. The challenge isn't just accuracy—it's handling diverse accents, background noise, technical terminology, and multiple speakers. Modern speech recognition must also provide speaker diarization to distinguish between different voices in multi-party conversations.

Transcript Processing Layer: Raw transcripts require significant processing before LLM analysis. This layer manages context windows, handles conversation threading, and prepares text for optimal LLM consumption. For long audio files, intelligent chunking strategies ensure the LLM receives relevant context without exceeding token limits.

LLM Analysis Layer: The processed transcript flows to the LLM with carefully crafted prompts. This layer must handle prompt engineering, response parsing, and error recovery. The challenge is maintaining consistency across different types of conversations while allowing for customization based on specific use cases.

Integration considerations

Beyond the core layers, successful Voice AI apps require robust integration capabilities:

- Audio ingestion: Supporting multiple file formats, streaming protocols, and quality levels

- Queue management: Handling concurrent processing requests without bottlenecks

- Storage optimization: Efficiently managing audio files, transcripts, and analysis results

- API orchestration: Coordinating multiple services while maintaining low latency

- Error handling: Gracefully recovering from failures at any layer

AssemblyAI's platform provides the core components for this architecture, including a highly accurate Speech-to-Text API and a flexible LLM Gateway. This simplifies the integration of speech and language models, allowing teams to focus on building unique application features rather than managing infrastructure.

Enhanced speech recognition foundation

LLM Gateway builds on AssemblyAI's industry-leading speech recognition models:

Universal: Our production-ready model, ideal for a wide range of applications. It offers an excellent accuracy-to-latency ratio and supports over 99 languages with features like automatic language detection and speaker diarization.

Universal-3 Pro: A prompt-based Speech Language Model offering superior accuracy, especially with domain-specific terminology. It reduces missed entities by leveraging contextual understanding for more precise transcription.

Implementation strategies and best practices

Successfully launching a Voice AI app requires strategic planning beyond technical implementation. Organizations that achieve the best results follow proven patterns that balance ambition with practical constraints.

Start with high-impact use cases

The most successful Voice AI implementations begin with narrowly defined, high-value problems. Instead of attempting to revolutionize entire workflows immediately, focus on specific pain points where automation delivers immediate value.

Consider starting with use cases that have clear success metrics: reducing time spent on meeting notes, improving sales call review efficiency, or automating specific types of customer inquiries. These focused implementations build organizational confidence and provide learnings for broader deployment.

Design for your workflow

Voice AI apps deliver maximum value when integrated seamlessly into existing workflows. This means understanding not just what information to extract, but where it needs to go and how it will be used.

Map your current processes before implementing Voice AI. Identify where manual transcription or review creates bottlenecks. Design your application to deliver insights directly into the tools your teams already use—whether that's Salesforce, Slack, or specialized industry platforms.

Iterate based on user feedback

Voice AI applications improve dramatically through iteration. Start with baseline functionality, gather user feedback, and refine your prompts and processing based on real-world usage.

Pay particular attention to edge cases that emerge during deployment. A sales intelligence app might initially miss certain objection patterns. Build feedback loops that capture these insights and drive continuous improvement.

Scale gradually

Successful Voice AI deployment follows a deliberate scaling pattern:

- Pilot phase: Test with a small group of power users who can provide detailed feedback

- Department rollout: Expand to a full team or department to validate at moderate scale

- Cross-functional deployment: Extend to related teams that can benefit from similar functionality

- Enterprise scale: Full deployment with established best practices and support structures

This graduated approach ensures technical infrastructure scales appropriately while giving teams time to adapt to new workflows and capabilities.

Other resources

Video Tutorials:

- How to Create Speaker-Based Subtitles for Your Videos with AI: Learn advanced audio processing techniques

- Build an AI Voice Translator: Explore multilingual speech applications

- Build An AI Chat Bot In Java: Understand real-time speech processing implementation

These tutorials provide hands-on guidance for implementing advanced Voice AI features, covering everything from basic transcription to complex multi-language applications.

Implementation Guides:

- Getting started with LLM Gateway covers authentication, available models, and first API calls.

- The Apply LLMs to audio files guide shows how to connect transcription with the LLM Gateway.

- LLM Gateway Cookbooks demonstrate proven implementation patterns for specific use cases.

Building production Voice AI apps with AssemblyAI

Speech recognition combined with Large Language Models transforms how organizations extract value from audio content. The combination of AssemblyAI's Speech-to-Text API and LLM Gateway eliminates many of the traditional barriers to building Voice AI applications—technical complexity, integration challenges, and ongoing maintenance requirements.

The business case is clear: organizations implementing Voice AI solutions report substantial improvements in productivity for audio-intensive workflows, with measurable returns typically achieved within months. More importantly, these applications enable new business capabilities that weren't previously feasible.

Whether you're building meeting intelligence tools, sales analytics platforms, or customer service automation, LLM Gateway provides the foundation for transforming speech data into competitive advantage. Organizations implementing Voice AI solutions gain competitive advantages through better customer understanding and data-driven decision making.

Leading companies across industries trust AssemblyAI for their Voice AI implementations. From healthcare innovators like PatientNotes.app to sales intelligence leaders like Clari, organizations choose AssemblyAI for industry-leading accuracy, scalable infrastructure, and comprehensive developer support.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.