What is real-time speech to text?

Real-time speech to text converts spoken words into accurate text instantly for live captions, meeting transcription, and voice commands as you speak.

Real-time speech-to-text converts spoken words into written text as you speak, delivering transcriptions within milliseconds rather than waiting for complete recordings to process. This streaming approach processes audio continuously in small chunks, enabling live captions on video calls, instant voice commands, and meeting notes that appear as conversations unfold.

Understanding how real-time transcription works becomes essential as voice interfaces and live collaboration tools reshape how we interact with technology. This article explains the core concepts behind streaming speech recognition, from audio capture and processing to speaker identification, while covering practical implementation options and performance considerations that determine success in real-world applications.

What is real-time speech-to-text

Real-time speech-to-text converts spoken words into written text as you speak, rather than waiting for a complete recording. This means you see text appearing on your screen within milliseconds of speaking, instead of uploading an audio file and waiting minutes for results.

Unlike batch processing that analyzes entire recordings at once, real-time systems process small chunks of audio continuously. Think of the difference between dictating a letter to someone who writes as you speak versus recording your entire message and handing them the tape afterward.

This technology powers the live captions you see on Zoom calls, the voice commands your phone responds to instantly, and the meeting notes that appear as your colleagues talk. You might also hear it called streaming speech recognition or live transcription.

The key advantage isn't just speed—it's the ability to interact with the transcription as it happens. You can correct mistakes in real-time, search through what's already been said, or trigger actions based on specific words without waiting for the conversation to end.

How streaming speech recognition works

Streaming speech recognition transforms your voice into text through three connected steps that happen simultaneously. Each step builds on the previous one while processing continues in real-time.

Audio capture and streaming

Your microphone captures sound waves and immediately converts them into digital data. This happens continuously—not in a single burst like traditional recording. The system encodes this audio into small chunks, typically lasting 20–100 milliseconds each.

These audio chunks stream directly to the speech recognition service without waiting for silence or sentence breaks. It's like a constant flow of data rather than discrete files. The connection stays open throughout your conversation, creating a pipeline where audio flows up and text results flow back down.

The quality of this initial capture affects everything downstream. Clear audio from a good headset microphone produces much better results than a laptop's built-in mic picking up room noise.

Real-time processing and partial results

As each audio chunk arrives, the AI model starts working immediately. It doesn't wait to hear your complete sentence before making predictions about what you're saying. You'll see partial results appear almost instantly—maybe "The weather" followed by "The weather today" as you continue speaking.

These early guesses aren't final. The system refines them as more audio arrives and it understands more context. A partial result like "to" might change to "two" or "too" once the system hears the surrounding words.

The AI model balances speed with accuracy by making intelligent predictions based on:

- Sound patterns: What phonemes (basic speech sounds) it detects

- Language modeling: Which word sequences make sense in English

- Context awareness: What's been said earlier in the conversation

- Confidence scoring: How certain it is about each word

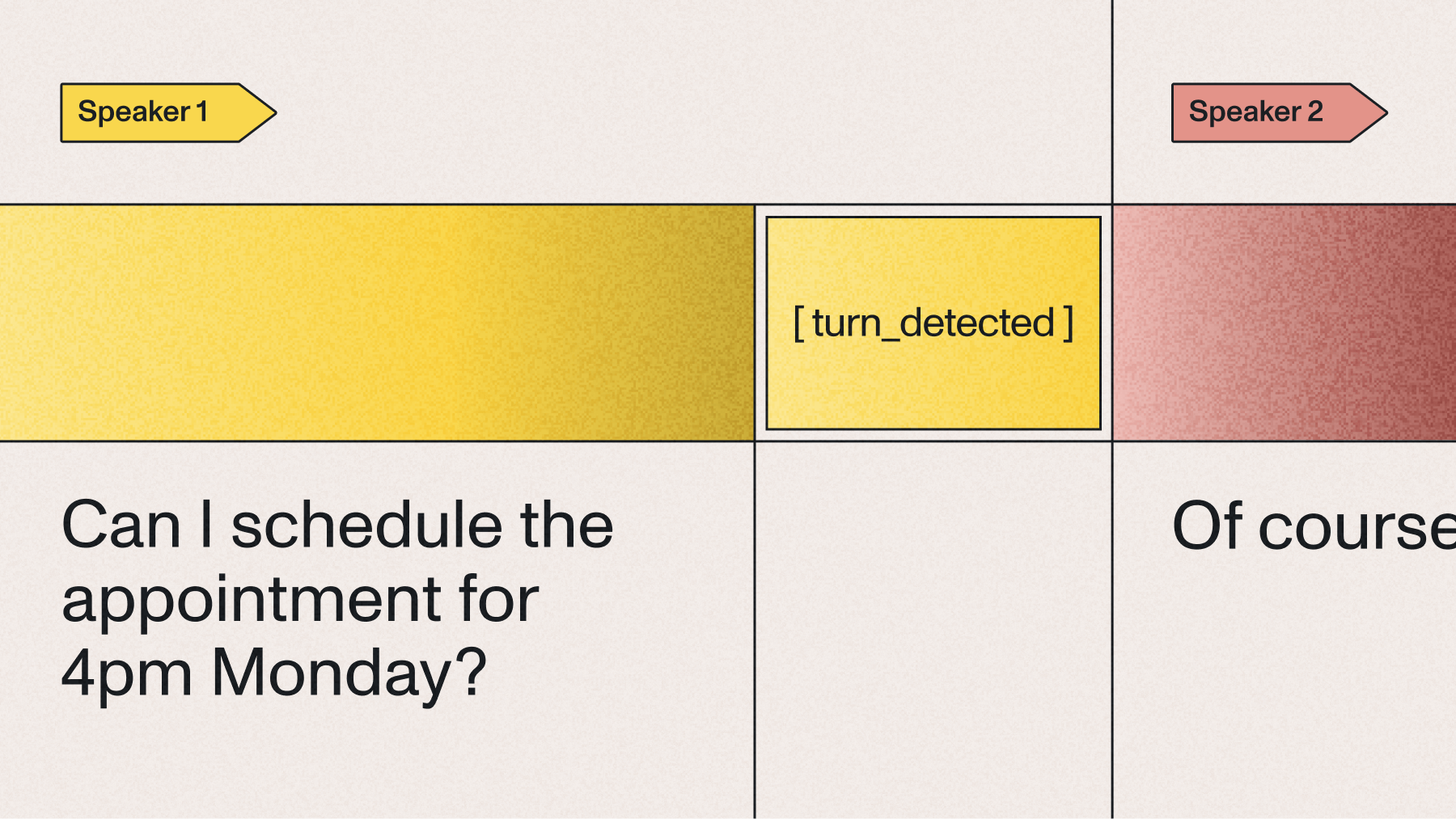

Speaker identification and timestamps

Streaming diarization can be accomplished by using multichannel audio (i.e., an audio stream for each speaker). A separate session is created for each speaker and a single transcript is created from those sessions. This means your meeting transcript shows who said what, not just a wall of mixed-up text from everyone talking.

When using multichannel audio, the system tracks each speaker through their dedicated audio channel. This approach provides reliable speaker separation throughout the conversation.

Word-level timestamps accompany each piece of text, marking exactly when each word was spoken. This precision enables synchronized captions that match lip movement in videos or lets you jump to specific moments in long recordings.

Common use cases and applications

Real-time speech-to-text serves different needs across various scenarios, each with specific requirements that shape how the technology gets implemented.

Live captions and accessibility

Live captioning provides essential access for people who are deaf or hard of hearing. Instead of missing out on spoken content, they see synchronized text appearing as people speak. This application demands high accuracy and proper formatting since errors can change meaning significantly.

Major broadcasters use real-time transcription for live news, sports commentary, and unscripted events where traditional closed captions can't be prepared in advance. Video conferencing platforms like Zoom and Google Meet now integrate this natively, making meetings more inclusive.

The technology handles challenges like multiple speakers, background music, and varying audio quality. Professional captioning services often combine AI transcription with human oversight to ensure accuracy for critical applications.

Key requirements for live captions:

- Low latency: Text appears within 2–3 seconds of speech

- Speaker identification: Clear labels for who's talking

- Proper formatting: Punctuation, capitalization, and line breaks

- Error correction: Ability to fix mistakes in real-time

Meeting transcription and collaboration

Real-time meeting transcription transforms how teams capture and use conversation data. Instead of assigning someone to take notes, participants focus on discussion while the system creates searchable, timestamped records automatically.

These transcripts become living documents where teams can search for specific topics, extract action items, or review decisions made weeks ago. Integration with collaboration platforms means transcripts appear directly in Slack channels or Microsoft Teams, making information instantly accessible.

The real power comes from making spoken information searchable and shareable. You can find that product spec discussion from three meetings ago or share exact quotes from a client call without rewatching hours of recordings.

Voice assistants and commands

Voice assistants rely on real-time speech-to-text as their foundation technology. When you say "Hey Siri, set a timer for 10 minutes," the system must transcribe your words instantly to trigger the appropriate action.

This application demands the lowest latency possible—users expect near-instant responses to voice commands. Even delays of half a second feel sluggish and break the natural interaction flow.

Beyond consumer assistants, enterprise applications use voice commands for hands-free operation:

- Warehouse workers updating inventory while handling packages

- Surgeons accessing patient records during operations

- Factory technicians controlling machinery while their hands stay busy

Performance requirements and technical considerations

Different applications need different levels of performance from real-time speech-to-text systems. Understanding these trade-offs helps you choose the right solution and set realistic expectations.

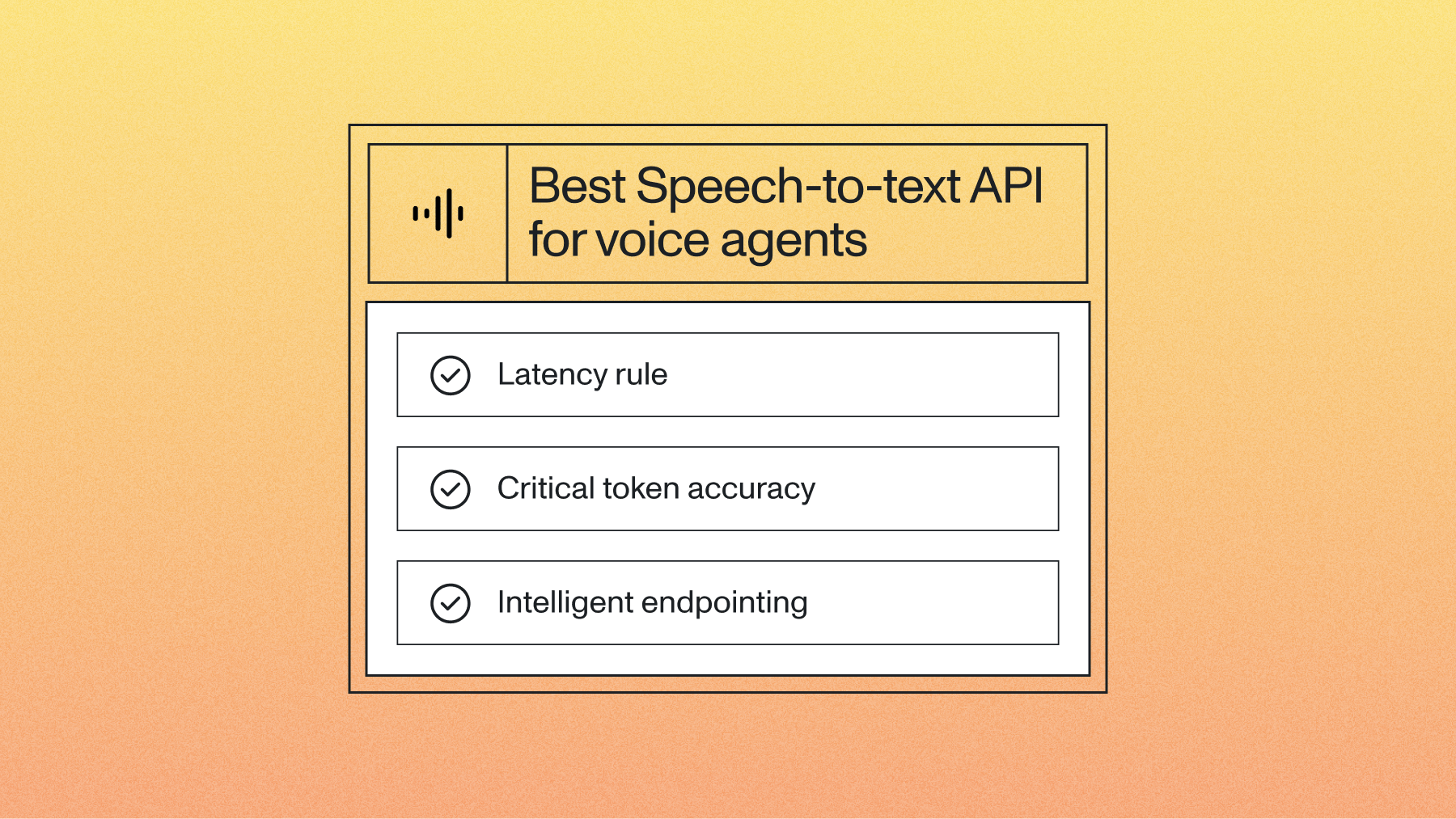

Latency and response time

Latency measures the delay between speaking and seeing text appear. For real-time applications, this delay needs to feel instantaneous—typically under 500 milliseconds for the first partial results.

Voice assistants need the fastest response times since users expect immediate feedback to commands. Meeting transcription can tolerate slightly longer delays if it means higher accuracy. Live captioning falls somewhere in between, prioritizing readability while maintaining reasonable speed.

Network conditions affect latency significantly. A strong internet connection keeps delays minimal, while poor connectivity can add several seconds of lag. Some systems buffer audio locally to maintain smooth operation during network hiccups.

The processing happens in stages:

- Partial results: First guesses appear in 200–300 milliseconds

- Refined results: Updates arrive as more context becomes available

- Final results: Complete accuracy with proper formatting in 1–3 seconds

Accuracy in real-world conditions

Real conversations rarely match the clean audio used in lab tests. Background noise, multiple people talking, strong accents, and technical jargon all challenge transcription accuracy.

Modern systems achieve very high accuracy in good conditions but performance drops with challenging audio. The key is understanding these limitations and choosing systems that handle your specific environment well.

Common accuracy challenges include:

- Background noise: Coffee shops, busy offices, or outdoor environments

- Overlapping speech: Multiple people talking simultaneously

- Accent variation: Different regional or international pronunciations

- Technical vocabulary: Industry-specific terms or proper nouns

- Audio quality: Phone calls, poor microphones, or compressed audio

Systems handle these challenges through specialized training, noise suppression, and context-aware processing. Some providers offer custom models trained on specific industries or audio conditions.

Implementation options and provider selection

You have three main paths for implementing real-time speech-to-text, each suited to different needs and technical capabilities.

Cloud service APIs

Cloud APIs offer the most flexibility for developers building custom applications. Services from Google Cloud, Microsoft Azure, AWS, and specialized providers like AssemblyAI provide robust APIs that handle the complexity of speech recognition infrastructure.

These services offer multiple model options optimized for different scenarios—conversation models for meetings, command models for voice interfaces, or phone call models for customer service applications. Most support dozens of languages and provide additional features like speaker diarization.

Implementation requires handling API authentication, managing streaming connections, and processing results in your application. Most providers offer software development kits (SDKs) in popular programming languages that simplify integration.

Benefits of cloud APIs:

- Scalability: Handle thousands of simultaneous users

- Reliability: Enterprise-grade uptime and redundancy

- Features: Advanced capabilities like speaker identification

- Updates: Continuous model improvements without code changes

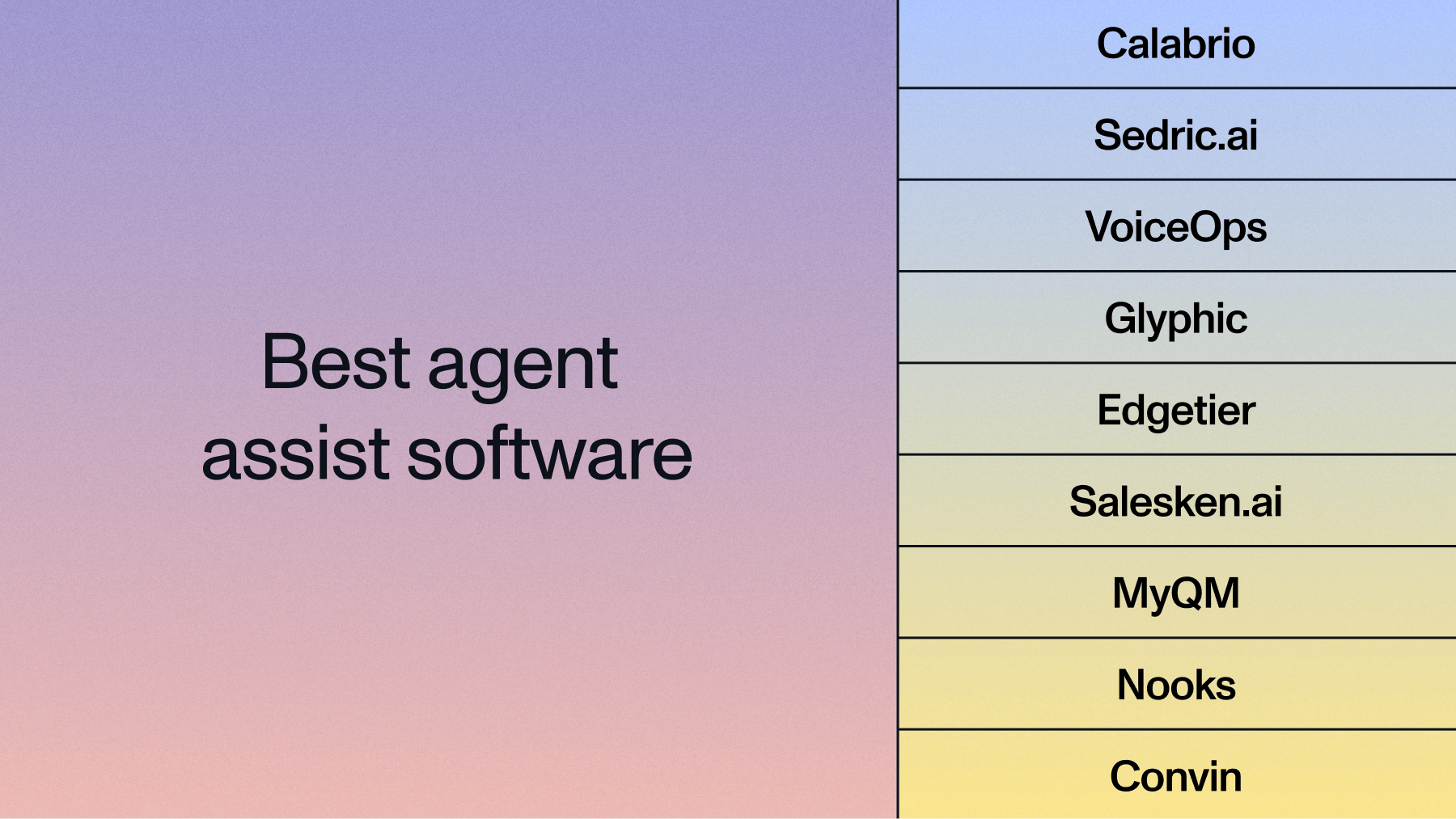

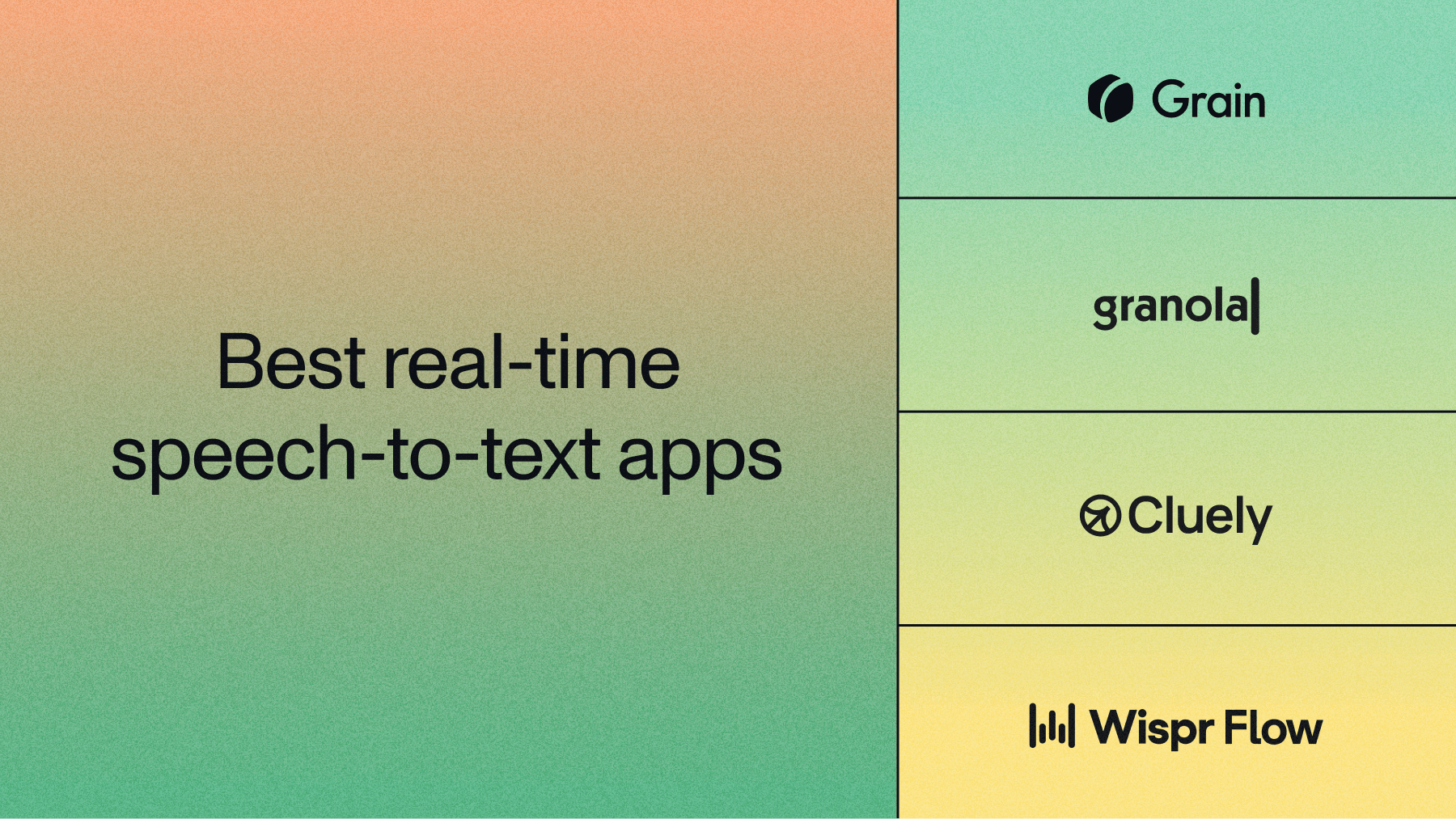

Dedicated transcription applications

Ready-to-use applications provide immediate access to real-time transcription without any development work. Apps like Otter.ai or browser-based tools like Speechnotes work out of the box for personal or small team use.

These applications excel at their specific use cases but offer limited customization. They're perfect for individual users needing quick transcription access or teams wanting to test transcription quality before committing to custom development.

Popular dedicated applications include:

- Live Transcribe on Android for accessibility

- Otter.ai for meeting transcription

- Speechnotes for browser-based dictation

Final words

Real-time speech-to-text transforms live conversations into immediate, searchable text by processing audio in small chunks as you speak. The technology balances speed with accuracy, delivering partial results within milliseconds while refining them as more context arrives, enabling applications from live captions to voice-powered interfaces.

AssemblyAI's Universal-Streaming model provides highly accurate real-time transcription with sub-second latency through advanced Voice AI models. The platform handles challenging audio conditions, identifies multiple speakers, and integrates easily into applications through simple APIs, making it straightforward for developers to add professional-grade speech-to-text capabilities to their products.

FAQ

How accurate is real-time speech-to-text with background noise?

Modern systems maintain good accuracy in moderate background noise by using specialized noise suppression and acoustic models trained on diverse audio conditions. Very noisy environments like construction sites or loud restaurants still present challenges.

What happens when multiple people talk at the same time?

Advanced systems can detect overlapping speech and identify which words came from which speaker, though accuracy decreases when people speak simultaneously. The system typically processes the clearest voice while noting that overlap occurred.

Can real-time speech-to-text work with phone call audio quality?

Yes, many providers offer models specifically optimized for phone audio that handle the compressed, limited-bandwidth conditions typical of phone calls. These models expect lower audio quality and compensate accordingly.

Which programming languages support real-time speech-to-text integration?

Most cloud providers offer SDKs for popular languages including Python, JavaScript, Java, C#, and Go. Streaming Speech-to-Text uses WebSockets to stream audio to AssemblyAI.

How much internet bandwidth does streaming transcription require?

Real-time transcription typically uses 50-100 Kbps for audio upload plus minimal bandwidth for text results. This is comparable to a voice call and works well on most broadband connections.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.