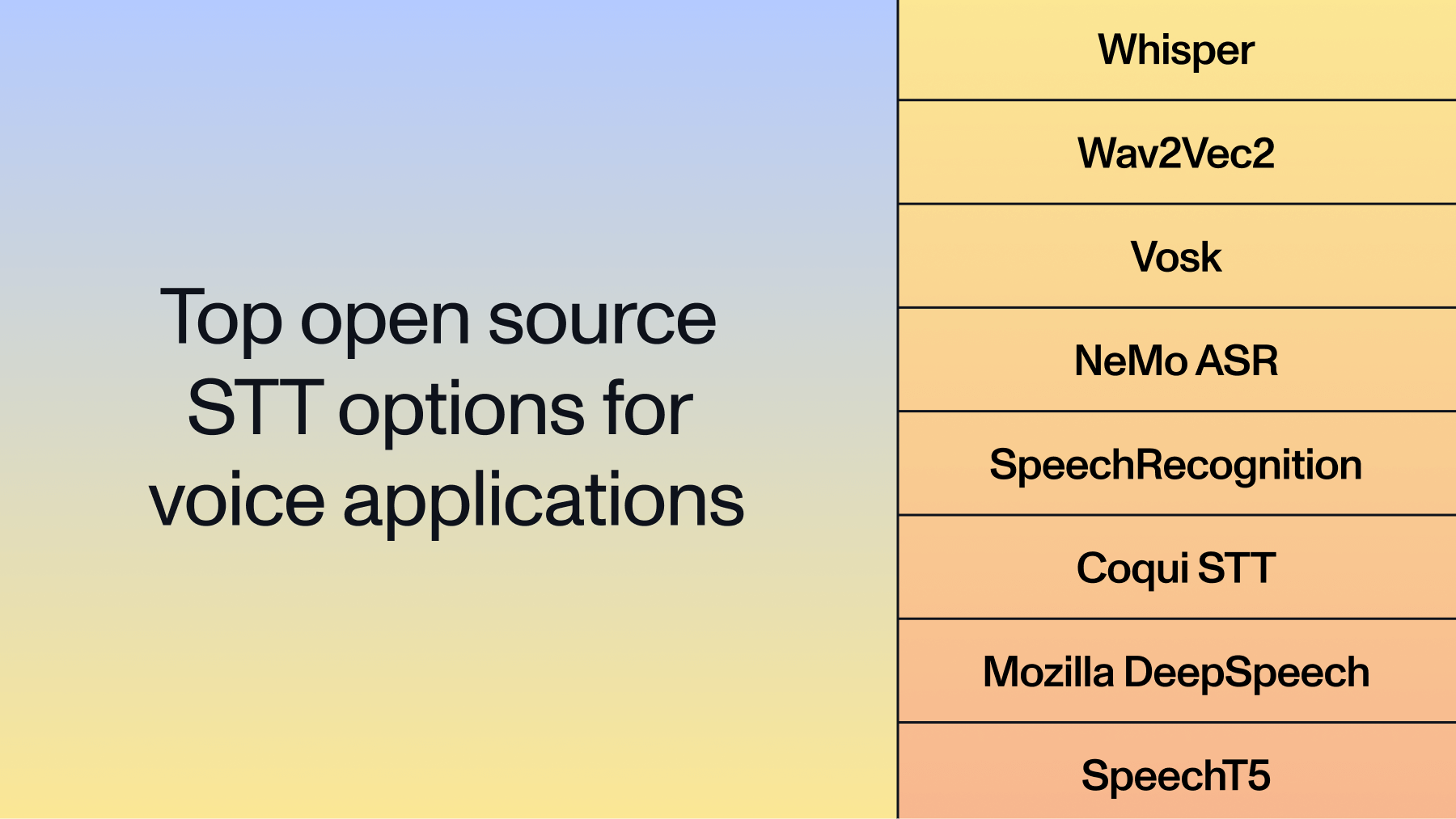

Top 8 open source STT options for voice applications in 2025

This comprehensive comparison examines eight open source STT solutions, analyzing their technical capabilities, implementation requirements, and ideal use cases to help you build voice applications from scratch.

Choosing an open source STT (speech-to-text) model today brings different trade-offs in accuracy, real-time performance, language support, and deployment complexity, though all will require extensive development in order to use in production. Some excel at offline processing, others dominate streaming scenarios, and a few offer extensive customization for specific domains.

This comprehensive comparison examines eight open source STT solutions, analyzing their technical capabilities, implementation requirements, and ideal use cases to help you build voice applications from scratch.

Understanding open source STT requirements

Modern voice applications demand more than basic transcription. They need systems that handle real-world audio conditions while maintaining acceptable performance across diverse hardware environments.

Accuracy under pressure separates production-ready models from academic experiments. Real applications encounter background noise, overlapping speakers, varied accents, and technical terminology. The best open source solutions maintain performance despite these challenges.

Resource efficiency impacts deployment costs and scalability. Some models demand high-end GPUs, others run efficiently on standard CPUs, and a few operate on edge devices with minimal resources.

Customization capability enables domain-specific optimization. Healthcare applications need medical terminology accuracy, while customer service requires emotional tone detection. The most valuable solutions support fine-tuning for specialized use cases.

Technical comparison matrix

The following table compares key performance metrics across eight open source STT solutions. WER (Word Error Rate) indicates transcription errors—lower percentages mean better accuracy. Model size affects memory requirements and inference speed, while hardware requirements determine deployment flexibility.

Use case recommendation chart

Detailed solution analysis

1. OpenAI Whisper

Architecture: Transformer-based encoder-decoder with attention mechanisms Training data: 680,000 hours of multilingual audio from the web

Whisper's robustness comes from massive, diverse training data. The model handles accented speech, background noise, and technical terminology well. Its multilingual capability works zero-shot—no additional training needed for new languages.

Strengths:

- Strong WER performance (10-30%) across challenging audio conditions

- Built-in punctuation, capitalization, and timestamp generation

- Multiple model sizes balancing accuracy vs. speed

- Strong performance on domain-specific terminology

Limitations:

- No native streaming support (requires third-party solutions for real-time use)

- Larger models need substantial GPU memory

- Batch processing introduces latency for interactive apps

Best for: Applications prioritizing accuracy over real-time requirements.

2. Wav2Vec2

Architecture: Self-supervised transformer learning speech representations Training approach: Unsupervised pre-training + supervised fine-tuning

Wav2Vec2's self-supervised approach learns from unlabeled audio, making it highly effective with limited labeled training data. This architecture excels at fine-tuning for specific domains or accents.

Strengths:

- Good streaming performance (requires adaptations like wav2vec-S)

- Strong fine-tuning results with custom data

- Multiple pre-trained checkpoints for different use cases

- Efficient inference on modern GPUs

Limitations:

- Requires streaming adaptations for optimal real-time performance

- Setup complexity higher than plug-and-play solutions

- Requires GPU for optimal real-time performance

- Limited built-in language detection capabilities

Best for: Real-time applications requiring customization.

3. Vosk

Architecture: Kaldi-based DNN-HMM hybrid system optimized for efficiency Focus: Lightweight deployment with reasonable accuracy

Vosk prioritizes practical deployment over cutting-edge accuracy. Its efficient implementation and compact model sizes make it viable for resource-constrained environments while maintaining acceptable transcription quality.

Strengths:

- Compact model sizes (50MB-1.5GB)

- CPU-only operation with good performance

- True offline capability without internet dependency

- Simple integration with multiple programming languages

Limitations:

- WER performance trails transformer-based models on challenging audio

- Limited advanced features like speaker diarization

- Fewer language options than larger frameworks

Best for: Mobile applications, embedded systems, and offline voice interfaces where resource efficiency matters more than perfect accuracy.

4. NVIDIA NeMo ASR

Architecture: Conformer and Transformer models with extensive optimization Focus: Comprehensive tooling

NeMo offers extensive customization capabilities through complete pipelines from data preparation through model deployment.

Strengths:

- Good WER performance (6-20%) with optimized architectures

- Comprehensive training and deployment infrastructure

- Good streaming performance with batching support

- Good documentation and community support

- Models range from 100M parameters (Parakeet-TDT-110M) to 1.1B+ parameters

Limitations:

- Steep learning curve requiring ML expertise

- GPU infrastructure essential for training and inference

- Complex setup may be overkill for simple applications

Best for: ML experts who want serious customization.

5. SpeechRecognition Library

Architecture: Unified interface to multiple recognition engines Purpose: Rapid prototyping and educational use

The SpeechRecognition library abstracts different speech recognition services behind a simple API. While not the most accurate option, its simplicity makes it invaluable for quick experiments and learning.

Strengths:

- Extremely simple API requiring minimal code

- Multiple backend options (CMU Sphinx, Google, etc.)

- No GPU requirements or complex dependencies

- Perfect for educational projects and rapid testing

Limitations:

- WER significantly higher than modern deep learning models

- Limited customization and advanced features

- Dependence on external services for best performance

Best for: Learning projects, proof-of-concept development, and situations where perfect accuracy isn't critical.

6. Coqui STT

Architecture: Improved DeepSpeech with community enhancements Community-driven: Open development with regular updates

Coqui continues Mozilla DeepSpeech's development with active community involvement. It provides updated models, improved tooling, and responsive support for practical deployment challenges.

Strengths:

- Active community development with regular improvements

- Better models and tooling than original DeepSpeech

- Good documentation with practical examples

- Flexible training pipeline for custom models

Limitations:

- Smaller community compared to tech giant projects

- Resource requirements for effective custom training

- WER still lags behind cutting-edge transformer models

Best for: Community-focused projects requiring ongoing support, custom language model development, and applications valuing transparent, community-driven development.

7. Mozilla DeepSpeech

Architecture: RNN-based Deep Speech implementation Status: Discontinued project

Mozilla formally discontinued DeepSpeech in June 2025, archiving the repository. The project is no longer maintained, though the code remains available for reference and educational purposes.

Historical Strengths:

- Complete local processing ensuring data privacy

- TensorFlow Lite support for mobile deployment

- Clear documentation valuable for learning

- Established training pipeline for custom models

Current Limitations:

- Project officially discontinued and archived

- No ongoing development or security updates

- WER significantly lower than modern approaches

- Limited language support and model updates

Best for: Educational projects studying older architectures, historical reference, or scenarios where the existing codebase meets specific legacy requirements (not recommended for new projects).

8. SpeechT5

Architecture: Unified transformer for speech-to-text and text-to-speech Research focus: Experimental unified speech processing

Microsoft's SpeechT5 represents research into unified speech processing frameworks. While primarily academic, it demonstrates interesting capabilities for applications requiring both transcription and synthesis.

Strengths:

- Unified approach to multiple speech tasks

- Strong research foundation and documentation

- Interesting architectural innovations

- Good performance on clean, controlled audio

Limitations:

- High computational requirements limiting practical deployment

- Limited production tooling and support (primarily academic)

- Requires significant expertise for effective implementation

- Not optimized for real-time applications

Best for: Research applications, experimental development, and scenarios exploring unified speech processing approaches.

Implementation decision framework

Choosing the optimal open source STT solution requires balancing multiple factors against your specific requirements.

- Start with accuracy requirements

- Consider real-time needs carefully

- Evaluate resource constraints early

- Plan for customization needs

- Consider maintenance overhead

Production deployment considerations

Moving from evaluation to production requires attention to operational details that academic comparisons often overlook.

Model serving architecture affects scalability and costs. Some solutions integrate naturally with standard web frameworks, others benefit from specialized inference servers. Consider whether you need request batching, model caching, or load balancing for expected traffic patterns.

Audio preprocessing often determines real-world performance more than model choice. Proper noise reduction, volume normalization, and silence detection can dramatically improve WER regardless of your selected solution.

Error handling strategies become critical in production environments. Plan for network interruptions, malformed audio input, and edge cases that can break transcription pipelines. Implement graceful degradation rather than hard failures.

Performance monitoring helps maintain service quality over time. Track WER metrics, processing latency, and resource utilization to identify issues before they affect end users. Consider implementing A/B testing frameworks for model updates.

Data pipeline optimization impacts both accuracy and costs. Efficient audio format handling, proper sampling rate management, and smart chunking strategies can reduce processing costs while improving results.

When open source might not be enough

Open source STT solutions excel in many scenarios, but certain requirements may push you toward commercial alternatives. When accuracy directly impacts business outcomes or user safety, the additional development time and infrastructure costs of self-hosted solutions may not justify the savings.

Commercial services typically provide higher accuracy through access to larger, more diverse training datasets. Commercial services also provide managed scaling, professional support, and advanced features like speaker diarization and sentiment analysis without the implementation overhead.

For applications where transcription quality determines user experience—like accessibility services or customer support analytics—commercial speech recognition services often deliver the reliability and accuracy that open source alternatives may struggle to match consistently across diverse real-world conditions.

Final recommendations

Today's open source STT landscape provides genuine alternatives to commercial services for most voice application needs. The key lies in matching solution capabilities to your specific requirements rather than defaulting to the most popular option.

For maximum accuracy: Choose Whisper when transcription quality matters more than real-time performance. Its robustness across languages and audio conditions justifies the batch processing limitation for many use cases.

For real-time applications: Wav2Vec2 (with proper streaming adaptations) offers the best balance of accuracy and streaming performance, especially when fine-tuned for your specific domain. NeMo ASR provides even better accuracy but requires more infrastructure investment.

For resource-efficient deployment: Vosk delivers surprisingly good results for its computational requirements. It's the clear choice for mobile, embedded, or high-volume applications where efficiency trumps perfect accuracy.

For rapid development: SpeechRecognition gets basic functionality working immediately, making it perfect for prototyping and proof-of-concept development.

Speech recognition represents just one component of effective voice applications. Consider your broader architecture, user experience requirements, and team expertise when selecting solutions. The best technical choice means nothing if your team can't implement and maintain it effectively.

The open source STT ecosystem continues evolving rapidly, with new transformer architectures and efficiency improvements emerging regularly. Choose solutions that align with your team's expertise and infrastructure capabilities—the best technical choice means nothing without effective implementation and maintenance.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.