Large-scale audio transcription: Handling hours of content efficiently

Large-scale audio transcription converts thousands of audio files into accurate, searchable text quickly. Process hours of content efficiently with batch tools.

Processing thousands of audio files simultaneously requires a fundamentally different approach than transcribing files one at a time, especially as the audio transcription market continues expanding with AI-powered solutions. This tutorial shows you how to build an asynchronous batch transcription system that handles massive audio libraries—from entire podcast catalogs to years of meeting recordings—in parallel rather than sequentially.

You'll implement this system using Python and the AssemblyAI SDK, covering concurrent job submission, status polling, and multi-format export. The architecture processes unlimited files simultaneously, with total completion time determined by your longest individual file rather than the sum of all files. By the end, you'll have a production-ready system that transforms hours of audio into searchable transcripts, speaker-labeled text, and subtitle files efficiently.

What architecture handles hours of audio efficiently

Large-scale audio transcription is processing thousands of audio files at once instead of one at a time. This means you can transcribe entire podcast libraries or years of meeting recordings simultaneously rather than waiting for each file to finish before starting the next.

The key difference is asynchronous processing versus synchronous processing. Synchronous processing is like washing dishes one by one—you finish washing one dish completely before starting the next. Asynchronous processing is like loading a dishwasher—you put all the dishes in at once and they all get cleaned simultaneously.

Here's what makes async batch transcription work:

- Concurrent job submission: Upload and start processing thousands of files at the same time

- Status monitoring: Check which jobs are done without stopping the ones still running

- Result collection: Gather completed transcripts as they finish, not in any particular order

- Error handling: Retry failed jobs without affecting successful ones

You have two ways to track job progress: polling and webhooks. Polling means you periodically check job status yourself. Webhooks mean the transcription service notifies you when jobs complete.

Most batch processing scenarios work better with polling because you control when to check status and don't need to set up external endpoints.

How to implement async batch transcription in Python

You'll build this batch transcription system in five steps. Each step handles a specific part of the process, from setup to final export.

Set up the AssemblyAI Python SDK and authenticate

Install the AssemblyAI SDK using pip:

pip install assemblyai

Set your API key as an environment variable for security:

export ASSEMBLYAI_API_KEY="your-api-key-here"

Create your Python script and set up the client:

import assemblyai as aai

import os

import json

import csv

from concurrent.futures import ThreadPoolExecutor, as_completed

import time

# Set up authentication

aai.settings.api_key = os.environ.get("ASSEMBLYAI_API_KEY")

transcriber = aai.Transcriber()

Never put your API key directly in your code. Environment variables keep your credentials secure even if someone sees your source code.

Prepare a manifest of S3/GCS URLs for batch jobs

A manifest is a list of all the audio files you want to transcribe. Think of it like a grocery list—it tells the system exactly what to process.

Your audio files need to be stored online (like Amazon S3 or Google Cloud Storage) so the transcription service can access them. Create a CSV file listing your files:

def create_manifest_from_csv(csv_file):

manifest = []

with open(csv_file, 'r') as file:

reader = csv.DictReader(file)

for row in reader:

manifest.append({

'audio_url': row['audio_url'],

'file_id': row['file_id'],

'duration': float(row.get('duration_estimate', 0))

})

return manifest

Your CSV should look like this:

audio_url,file_id,duration_estimate

https://storage.example.com/meeting1.mp3,meeting_001,3600

https://storage.example.com/meeting2.mp3,meeting_002,2400

For more complex setups, use JSON instead:

def create_manifest_from_json(json_file):

with open(json_file, 'r') as file:

return json.load(file)

# JSON structure example:

[

{

"audio_url": "https://storage.example.com/podcast1.mp3",

"file_id": "episode_001",

"duration": 3600

}

]

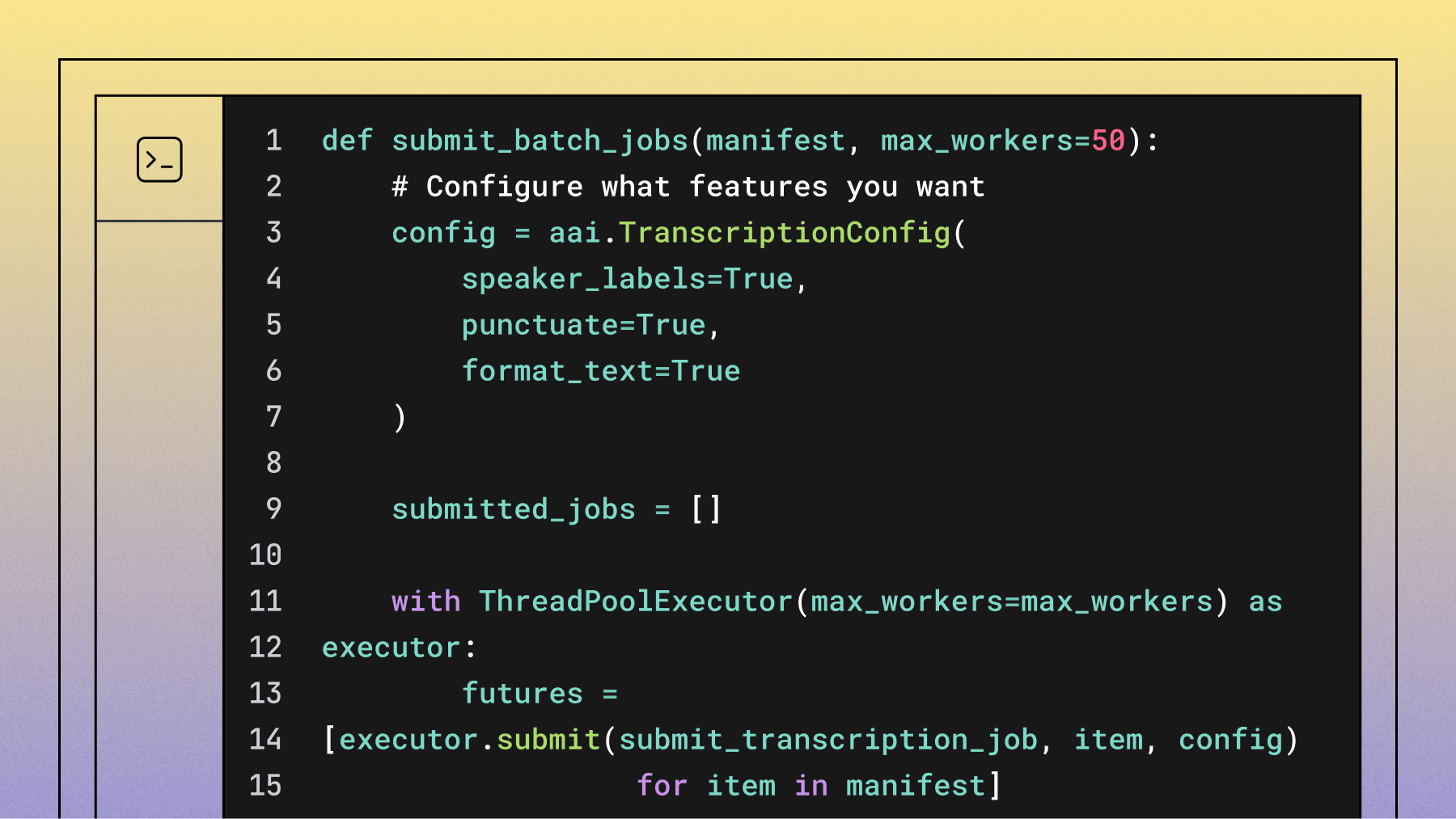

Submit async transcription jobs concurrently

Now you'll submit all your transcription jobs at once. This uses Python's ThreadPoolExecutor to handle multiple submissions simultaneously:

def submit_transcription_job(audio_item, config):

try:

transcript = transcriber.submit(

audio_item['audio_url'],

config=config

)

return {

'file_id': audio_item['file_id'],

'job_id': transcript.id,

'status': transcript.status,

'audio_url': audio_item['audio_url']

}

except Exception as e:

return {

'file_id': audio_item['file_id'],

'error': str(e),

'audio_url': audio_item['audio_url']

}

def submit_batch_jobs(manifest, max_workers=50):

# Configure what features you want

config = aai.TranscriptionConfig(

speaker_labels=True, # Identify different speakers

punctuate=True,

format_text=True

)

submitted_jobs = []

with ThreadPoolExecutor(max_workers=max_workers) as executor:

futures = [executor.submit(submit_transcription_job, item, config)

for item in manifest]

for future in as_completed(futures):

result = future.result()

submitted_jobs.append(result)

if 'error' in result:

print(f"Failed: {result['file_id']}")

else:

print(f"Submitted: {result['file_id']}")

return submitted_jobs

The max_workers=50 setting controls how many jobs you submit at once. Start with 50 and adjust based on your internet connection and system performance.

Key configuration options:

- speaker_labels=True: Identifies who's speaking when

- punctuate=True: Adds proper punctuation

- format_text=True: Capitalizes sentences correctly

Poll job statuses and collect transcripts

After submitting jobs, you need to check when they're done and collect the results. This polling function checks all your jobs periodically:

def poll_and_collect_results(submitted_jobs, poll_interval=30):

completed_transcripts = []

pending_jobs = [job for job in submitted_jobs if 'error' not in job]

while pending_jobs:

print(f"Checking {len(pending_jobs)} jobs...")

still_pending = []

for job in pending_jobs:

try:

transcript = transcriber.get_transcript(job['job_id'])

if transcript.status == aai.TranscriptStatus.completed:

result = {

'file_id': job['file_id'],

'job_id': job['job_id'],

'text': transcript.text,

'speakers': transcript.utterances,

'words': transcript.words,

'confidence': transcript.confidence

}

completed_transcripts.append(result)

print(f"Completed: {job['file_id']}")

elif transcript.status == aai.TranscriptStatus.error:

print(f"Failed: {job['file_id']} - {transcript.error}")

else:

still_pending.append(job)

except Exception as e:

print(f"Error checking {job['file_id']}: {e}")

still_pending.append(job)

pending_jobs = still_pending

if pending_jobs:

time.sleep(poll_interval)

return completed_transcripts

This function keeps checking until all jobs are done. It waits 30 seconds between checks by default—you can make this shorter for faster updates or longer to reduce API calls.

Export JSON, JSONL, SRT, VTT with speaker diarization and timestamps

Once transcription finishes, export your results in the format you need. Different formats serve different purposes:

Here's how to export in multiple formats:

def export_transcripts(completed_transcripts, output_dir):

os.makedirs(output_dir, exist_ok=True)

for transcript in completed_transcripts:

file_id = transcript['file_id']

# Export as JSON (includes everything)

json_path = os.path.join(output_dir, f"{file_id}.json")

with open(json_path, 'w') as f:

json.dump(transcript, f, indent=2)

# Export as plain text

txt_path = os.path.join(output_dir, f"{file_id}.txt")

with open(txt_path, 'w') as f:

f.write(transcript['text'])

# Export with speaker labels

if transcript.get('speakers'):

speakers_path = os.path.join(output_dir, f"{file_id}_speakers.txt")

with open(speakers_path, 'w') as f:

for utterance in transcript['speakers']:

f.write(f"Speaker {utterance.speaker}: {utterance.text}\n")

For subtitle files (SRT format), you need to convert word-level timestamps into subtitle segments:

def export_as_srt(words, output_path):

segments = []

current_segment = []

segment_start = None

for word in words:

if segment_start is None:

segment_start = word.start / 1000

current_segment.append(word.text)

# Create 3-second segments

if (word.end / 1000) - segment_start >= 3.0:

segments.append({

'start': segment_start,

'end': word.end / 1000,

'text': ' '.join(current_segment)

})

current_segment = []

segment_start = None

# Write SRT file

with open(output_path, 'w') as f:

for i, segment in enumerate(segments, 1):

start = format_timestamp(segment['start'])

end = format_timestamp(segment['end'])

f.write(f"{i}\n{start} --> {end}\n{segment['text']}\n\n")

def format_timestamp(seconds):

hours = int(seconds // 3600)

minutes = int((seconds % 3600) // 60)

secs = int(seconds % 60)

millis = int((seconds % 1) * 1000)

return f"{hours:02d}:{minutes:02d}:{secs:02d},{millis:03d}"

How to plan throughput and cost for large batches

Planning your batch processing means understanding how long it takes and how much it costs. The good news: processing time doesn't increase much with more files.

When you process files concurrently, your total time equals roughly the longest individual file. A thousand 1-hour files finish in about the same time as a single 1-hour file—around 15-20 minutes.

Here's how to estimate costs and timing:

def estimate_batch_cost(manifest, price_per_minute=0.01):

total_minutes = sum(item.get('duration', 0) for item in manifest) / 60

estimated_cost = total_minutes * price_per_minute

return {

'total_files': len(manifest),

'total_minutes': round(total_minutes),

'estimated_cost': round(estimated_cost, 2)

}

Cost optimization tips:

- Only enable features you need: Speaker diarization and word timestamps add processing time

- Group similar content: Process similar audio types together for consistency

- Retry failed jobs: Build in automatic retry logic for network issues

Final Words

This async batch transcription approach transforms massive audio libraries into searchable text in hours instead of weeks. You submit thousands of jobs simultaneously, poll for completion, and export results in whatever format your project needs.

AssemblyAI's unlimited concurrency removes the bottlenecks that slow down other transcription services, while industry-leading accuracy handles challenging audio conditions like background noise and diverse accents. The straightforward pricing model makes large-scale projects predictable—you pay per minute of audio with no hidden fees for parallel processing.

FAQ

Can I process more than 10,000 audio files at once with this approach?

Yes, this batch processing method scales to tens of thousands of files since you're not limited by concurrent job restrictions.

What happens if some audio files fail to transcribe during batch processing?

Failed jobs return error messages in your results, and you can automatically retry them or process them separately without affecting successful transcriptions.

Which audio file formats work best for large-scale batch transcription?

MP3, WAV, and M4A files provide the most reliable processing, with MP3 offering the best balance of quality and file size for batch uploads.

How accurate is speaker identification when processing thousands of files with different audio quality?

Speaker diarization accuracy depends on audio quality and number of speakers, typically working well for 2-6 speakers in clear audio but may struggle with overlapping speech or poor quality recordings.

Does enabling word-level timestamps significantly slow down large batch processing?

Word-level timestamps add minimal processing time to batch jobs, usually increasing completion time by only a few minutes per hour of audio.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.