The complete guide to speaker diarization APIs and tools

This guide covers speaker diarization fundamentals, quality evaluation metrics, solution comparisons, and selection criteria for production applications.

In today's audio-driven world, knowing who's speaking isn't just helpful, it's essential for making sense of conversations at scale.

Speaker diarization solves this problem. It's the AI process that takes a continuous audio stream and segments it by speaker, answering the fundamental question: "who spoke when?" Whether you're analyzing customer calls, generating meeting notes, or creating searchable podcast archives, diarization transforms raw audio into structured, actionable insights. This guide covers diarization fundamentals, quality evaluation metrics, solution comparisons, and selection criteria for production applications.

What is speaker diarization?

Speaker diarization identifies who's speaking when in audio recordings, automatically separating conversations by individual voices. Think of it as audio segmentation that creates a map of who's talking throughout a conversation.

Here's how it works in practice:

Without diarization:

"Hey Sarah, how's the project going? It's going well, we should

have the prototype ready by Friday. That's great news, can you

walk me through the timeline?"

With diarization:

Speaker A: "Hey Sarah, how's the project going?"

Speaker B: "It's going well, we should have the prototype ready by Friday."

Speaker A: "That's great news, can you walk me through the timeline?"

The system doesn't necessarily know that "Speaker A" is John and "Speaker B" is Sarah—it just knows there are distinct voices and which parts belong to each speaker.

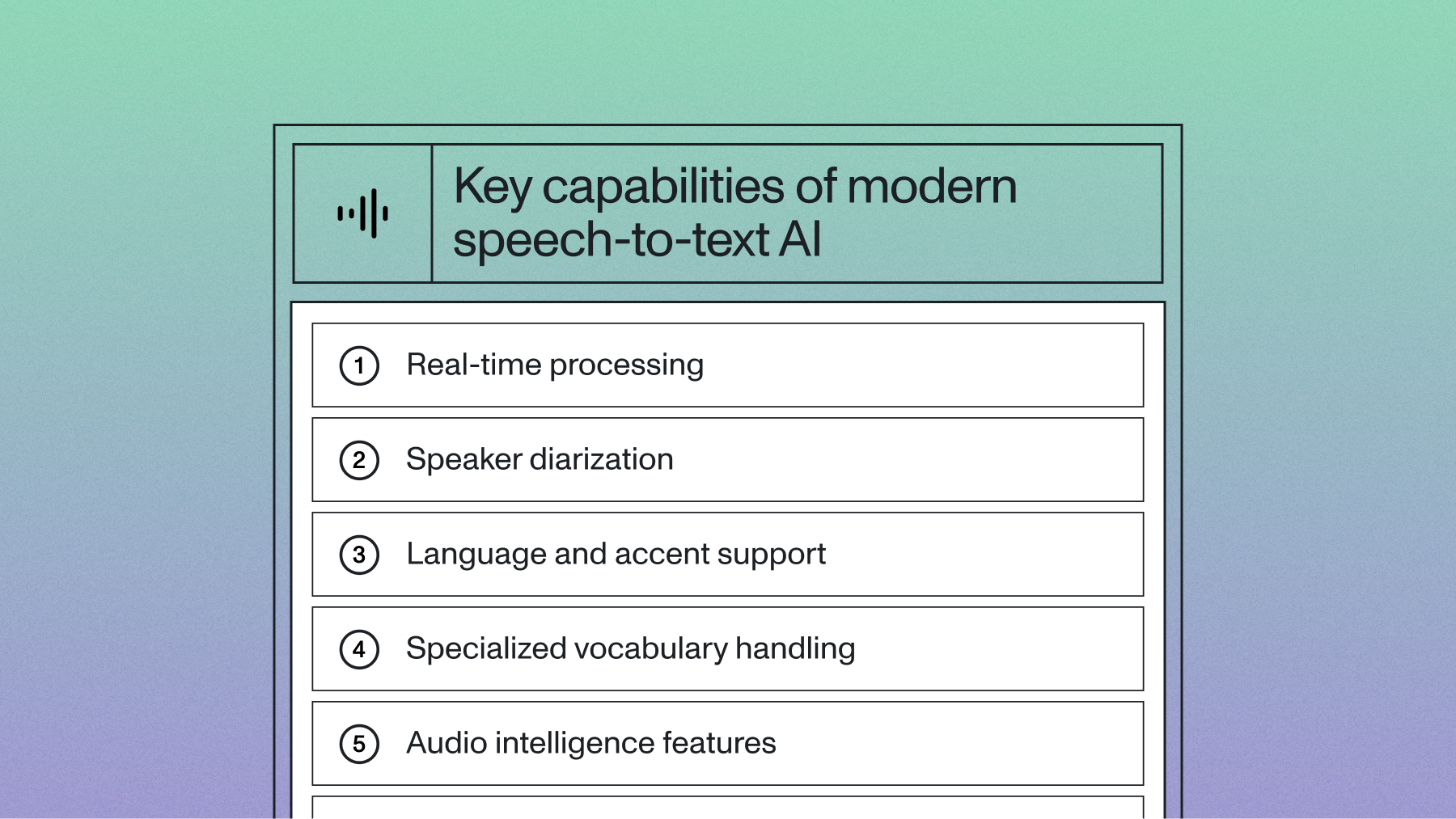

Modern diarization systems combine multiple AI techniques:

- Voice activity detection identifies when speech is happening versus silence

- Speaker segmentation finds boundaries where one speaker stops and another begins

- Speaker clustering groups segments from the same speaker together

- Overlapping speech detection handles moments when multiple people talk simultaneously

Why speaker diarization matters

The applications for accurate speaker diarization extend across industries where conversation analysis drives business value.

Call centers use diarization for quality assurance and coaching. Supervisors can instantly jump to moments when specific representatives were speaking, analyzing their performance without listening to entire calls. Companies like CallMiner and Gong have built entire conversation intelligence platforms around this capability.

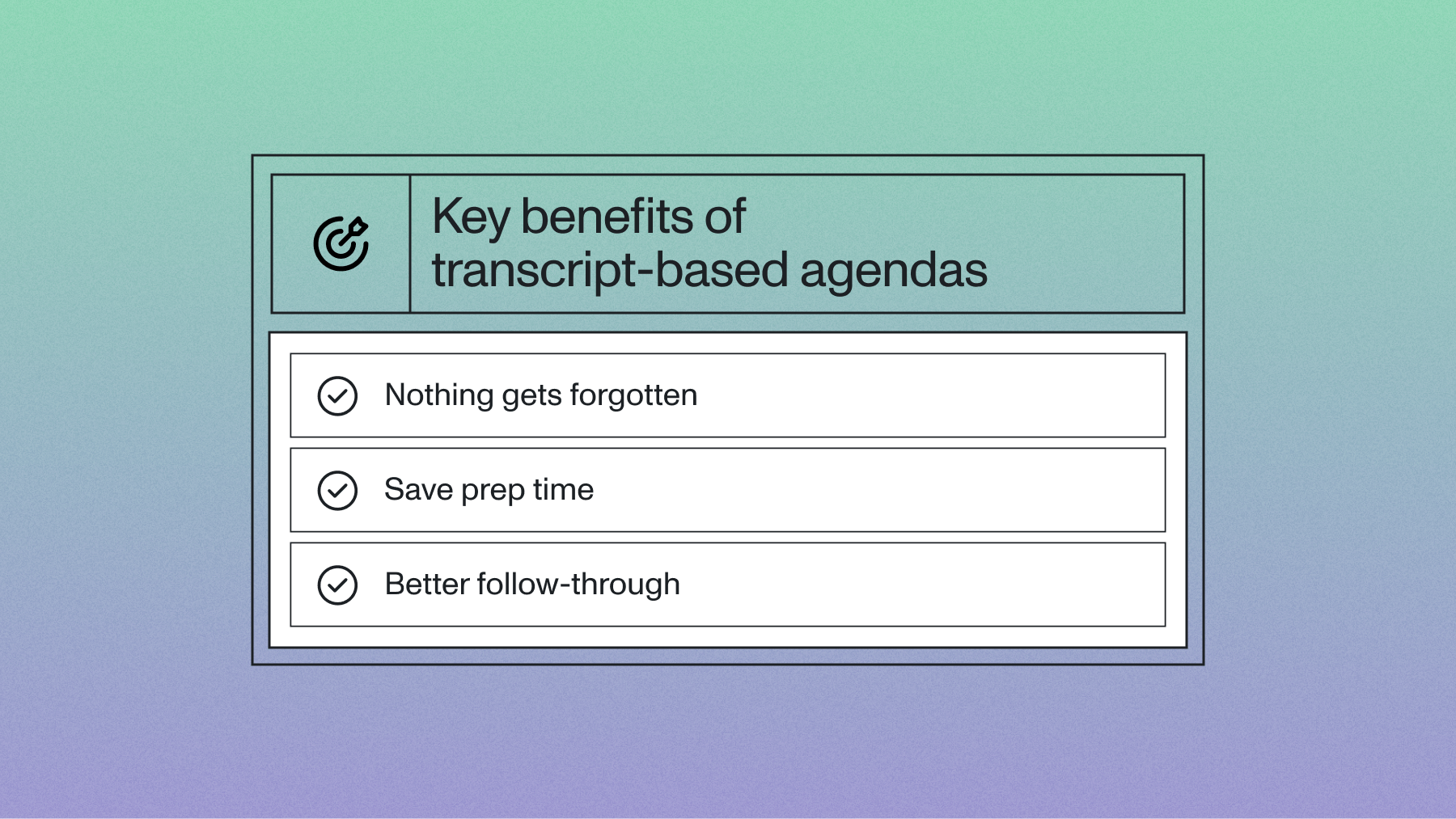

Meeting platforms like Zoom and Google Meet rely on diarization to generate accurate meeting notes. When participants can see who said what, meeting summaries become actionable documents rather than confusing streams of unattributed text.

Media and podcasting companies use diarization for accessibility and engagement. Accurate speaker labels improve captioning for hearing-impaired viewers and enable features like speaker-specific searches—imagine finding every time a particular guest mentioned "artificial intelligence" across dozens of podcast episodes.

Legal and compliance teams need diarization for depositions, hearings, and regulatory documentation where speaker attribution carries legal weight. Misattributing a statement in legal proceedings can have serious consequences.

But here's the challenge: poor diarization creates more problems than it solves. Misattributed quotes lead to confused analytics. Mixed-up speakers generate unreliable insights. When your system can't distinguish between your CEO and an intern, every downstream application suffers.

How to evaluate speaker diarization quality

Not all diarization systems perform equally. Understanding the key metrics helps you choose the right solution for your needs.

Diarization Error Rate (DER)

DER is the standard metric for evaluating speaker diarization performance. It measures the percentage of time that's incorrectly attributed to speakers, combining three types of errors:

- False alarm speech: System detects speech where there is none

- Missed speech: System fails to detect actual speech

- Speaker error: System assigns speech to the wrong speaker

Lower DER means better performance. A DER of 5% means the system incorrectly attributes speakers 5% of the time, while a DER of 15% indicates significant accuracy issues.

Here's what different DER levels mean in practice:

Recent advances have pushed leading systems into the excellent category. Modern Speech AI models now achieve DER rates below 5% in optimal conditions.

Speaker Count Accuracy

Even systems with low DER can struggle with speaker count—incorrectly estimating how many people are in a conversation. This metric measures how often the system gets the total speaker count right.

A 2.9% speaker count error rate means the system correctly identifies the number of speakers in 97.1% of audio files. This seemingly small difference becomes critical at scale—if you're processing thousands of calls daily, even small errors compound quickly.

Overlapping Speech Handling

Real conversations aren't perfectly turn-based. People interrupt, laugh, or make affirmations like "mm-hmm" while others speak. Poor diarization systems either miss overlapping speech entirely or incorrectly merge speakers together.

Advanced systems detect and label overlapping segments, maintaining accuracy even when multiple speakers talk simultaneously. This capability is crucial for natural conversations where interruptions and reactions are common.

Temporal Precision

How accurately does the system identify when speaker changes happen? Some systems are excellent at knowing who's speaking but poor at pinpointing exact transition moments. This matters for applications requiring precise timestamps, like legal transcription or detailed conversation analysis.

Robustness Metrics

Production diarization systems must handle challenging audio conditions:

- Background noise tolerance: Performance in noisy environments like cafes or open offices

- Audio quality resilience: Accuracy with compressed audio, poor phone connections, or low-quality recordings

- Short utterance detection: Ability to correctly attribute brief statements or interjections

- Cross-language performance: Consistent accuracy across different languages and accents

Modern systems have made significant improvements in these challenging scenarios, with documented improvements in noisy environments and enhanced accuracy on short segments as brief as 250ms.

Comparing speaker diarization solutions

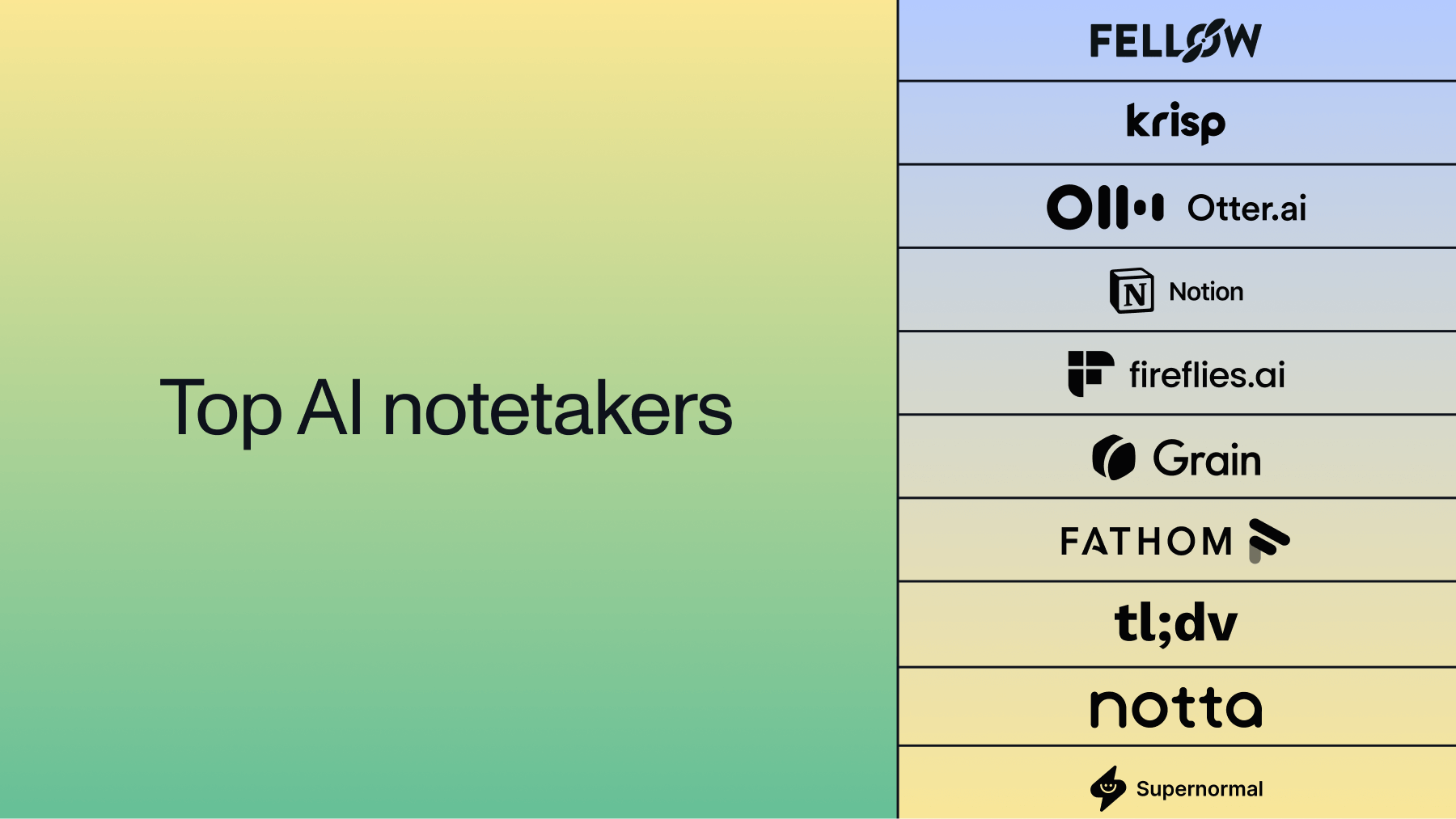

The speaker diarization landscape includes both commercial APIs and open-source tools, each optimized for different use cases and deployment scenarios.

Commercial APIs

AssemblyAI demonstrates strong performance in challenging audio conditions. The company’s models achieve low speaker count error rates and show improved performance in noisy environments and with short segments (250ms or less) compared to baseline systems. With support for speaker diarization in 95 languages and no additional cost for the feature, the system handles production requirements including conference room recordings with ambient noise and multi-speaker discussions with overlapping voices. The platform offers SOC2 Type 2 certification for enterprise deployments.

Gladia combines OpenAI's Whisper transcription with Pyannote's diarization models. This approach makes sense for teams already using Whisper who want to add speaker identification without switching transcription providers. Their enhanced diarization option includes additional processing for edge cases and challenging audio conditions.

Open Source Solutions

Pyannote represents the current state-of-the-art in open-source diarization. Developed by researchers at CNRS, it's widely used in academic research and provides the foundation for several commercial services. The toolkit achieves approximately 10% DER with optimized configurations on standard benchmarks, though real-world performance in challenging conditions may vary. The toolkit offers flexibility but requires significant machine learning expertise to deploy effectively.

NVIDIA NeMo takes an innovative end-to-end transformer approach optimized for GPU deployment. Their Sortformer architecture uses an 18-layer Transformer design that eliminates traditional pipeline stages by treating diarization as a unified problem. Their models can be particularly effective for organizations with existing NVIDIA infrastructure, though setup complexity is higher than API-based solutions.

Kaldi remains a staple in academic research with highly configurable pipelines. While powerful, Kaldi requires deep expertise in speech processing and is better suited for research than production deployments.

SpeechBrain built on PyTorch provides over 200 pre-trained models for various speech tasks including diarization. It's designed for researchers and developers who need flexibility and want to experiment with different approaches.

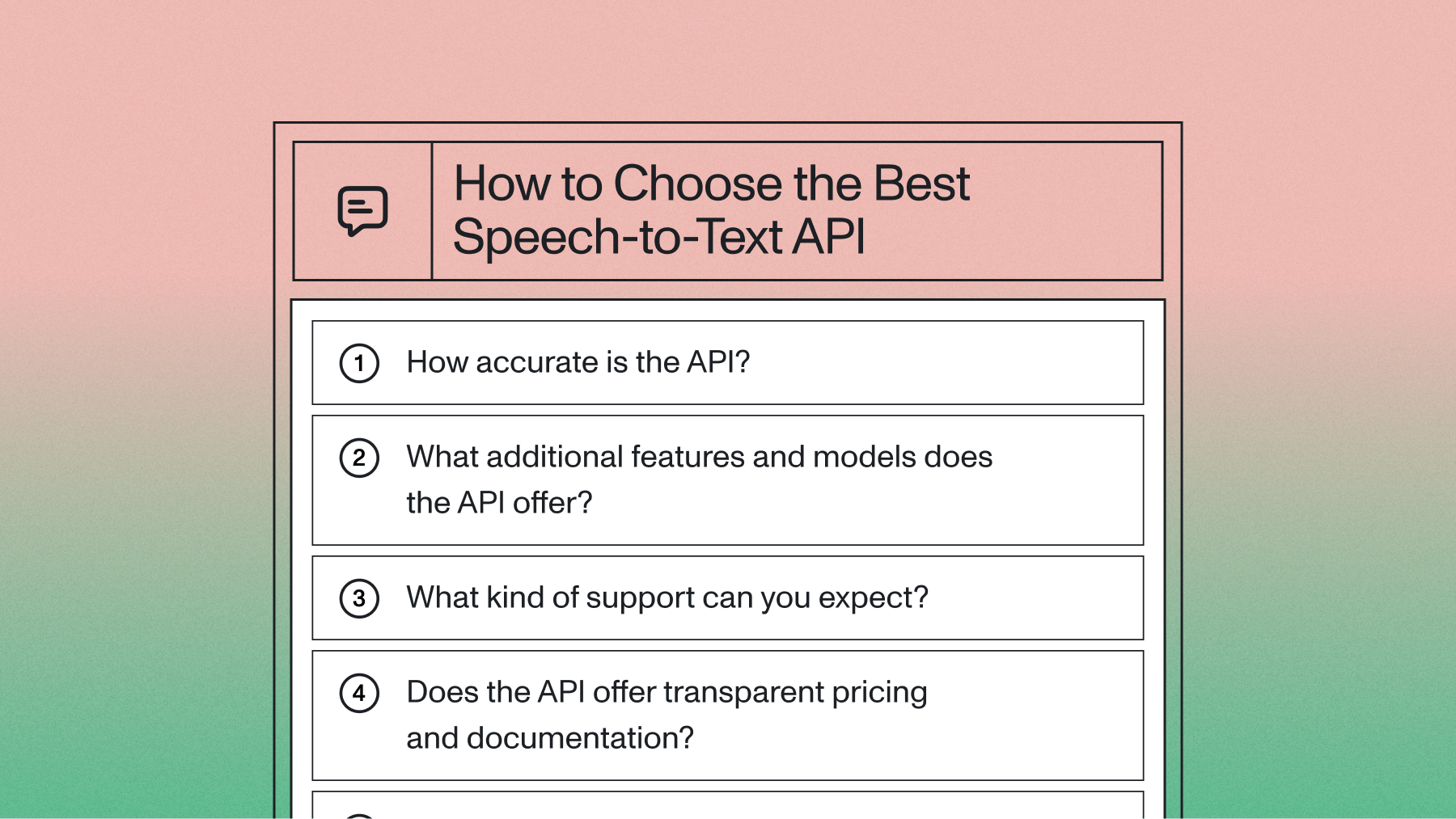

Choosing the right diarization solution

Your choice depends on several key factors that align with your technical requirements and business constraints.

For production applications requiring high accuracy in challenging conditions, commercial APIs like AssemblyAI offer the fastest path to deployment with proven performance improvements in noisy and far-field audio scenarios. These services handle model optimization, infrastructure scaling, and ongoing improvements, letting you focus on your core application logic.

For research and experimentation, open-source tools like Pyannote or SpeechBrain provide maximum flexibility. You can modify models, experiment with different architectures, and contribute improvements back to the community. However, expect significant setup time and ongoing maintenance overhead.

For real-time applications like live captioning or streaming platforms, prioritize solutions with proven low-latency performance and robust handling of varying audio conditions.

For enterprise deployments with compliance requirements, consider solutions with enterprise-grade security certifications. AssemblyAI offers SOC2 Type 2 certification and Business Associate Agreements for healthcare applications.

For cost-sensitive applications processing large audio volumes, evaluate total cost of ownership carefully. While some solutions have no direct API costs, factor in infrastructure, maintenance, and expertise requirements. AssemblyAI includes speaker diarization at no additional cost, which can significantly impact overall project economics.

For challenging audio conditions, prioritize solutions with documented improvements in noisy environments, overlapping speech, and short utterances. Recent advances in transformer architectures and self-supervised learning are pushing accuracy higher while reducing computational requirements.

Final words

The speaker diarization landscape continues evolving rapidly. Modern systems increasingly handle real-world audio challenges that would have stumped earlier generations of technology. When choosing a solution, test with your specific audio types and conditions rather than relying solely on benchmark scores.

Modern diarization systems handle real-world audio challenges that would have challenged earlier technology generations. Understanding these capabilities helps you build more effective audio AI applications that accurately separate speakers and deliver reliable conversation insights.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.