How to build and deploy a voice agent using Pipecat and AssemblyAI

Ship voice AI agents with millisecond latency using Pipecat and AssemblyAI's Universal-Streaming. This complete tutorial walks you through setup, real-time transcription, testing, and cloud deployment.

Building a voice AI agent that responds in milliseconds used to require months of complex audio engineering. Today, you can ship production-ready voice agents in hours.

Modern voice agents require millisecond-level latency, accurate transcription even with background noise, and intelligent conversation management to feel natural. Building production-ready voice agents comes with specific challenges: ensuring accurate transcription of names and numbers, managing natural conversation flow without awkward interruptions, and maintaining low latency for real-time interactions.

In this tutorial, we'll build a production-ready voice agent that meets these requirements using Pipecat's orchestration framework, AssemblyAI's Universal-Streaming speech-to-text, OpenAI's reasoning capabilities, and Cartesia's natural voice synthesis. You'll learn how to create, test locally, and deploy a fully functional voice assistant to the cloud.

Here's what we're building: a conversational AI that understands spoken questions, processes them through a large language model, and responds with natural-sounding speech—all in real-time.

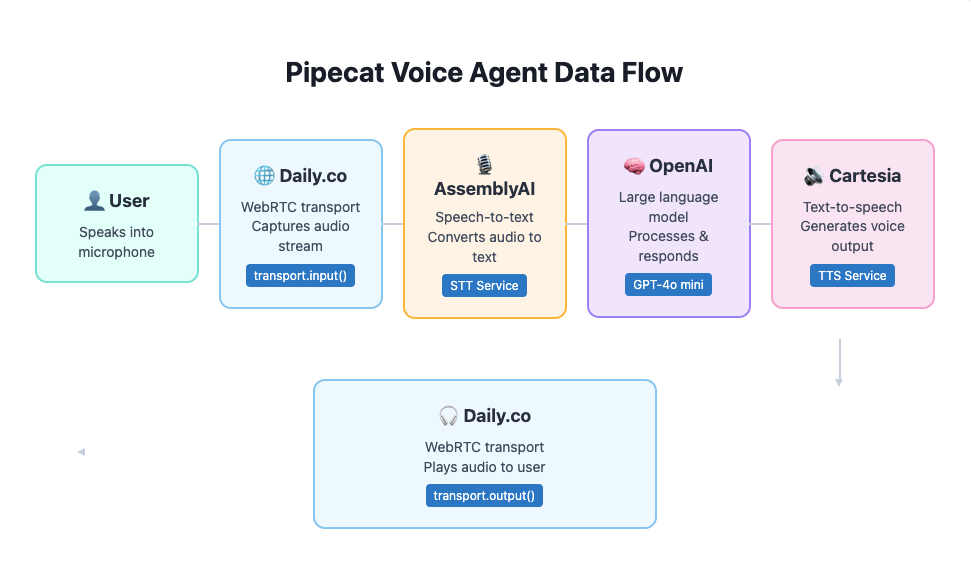

Understanding the architecture

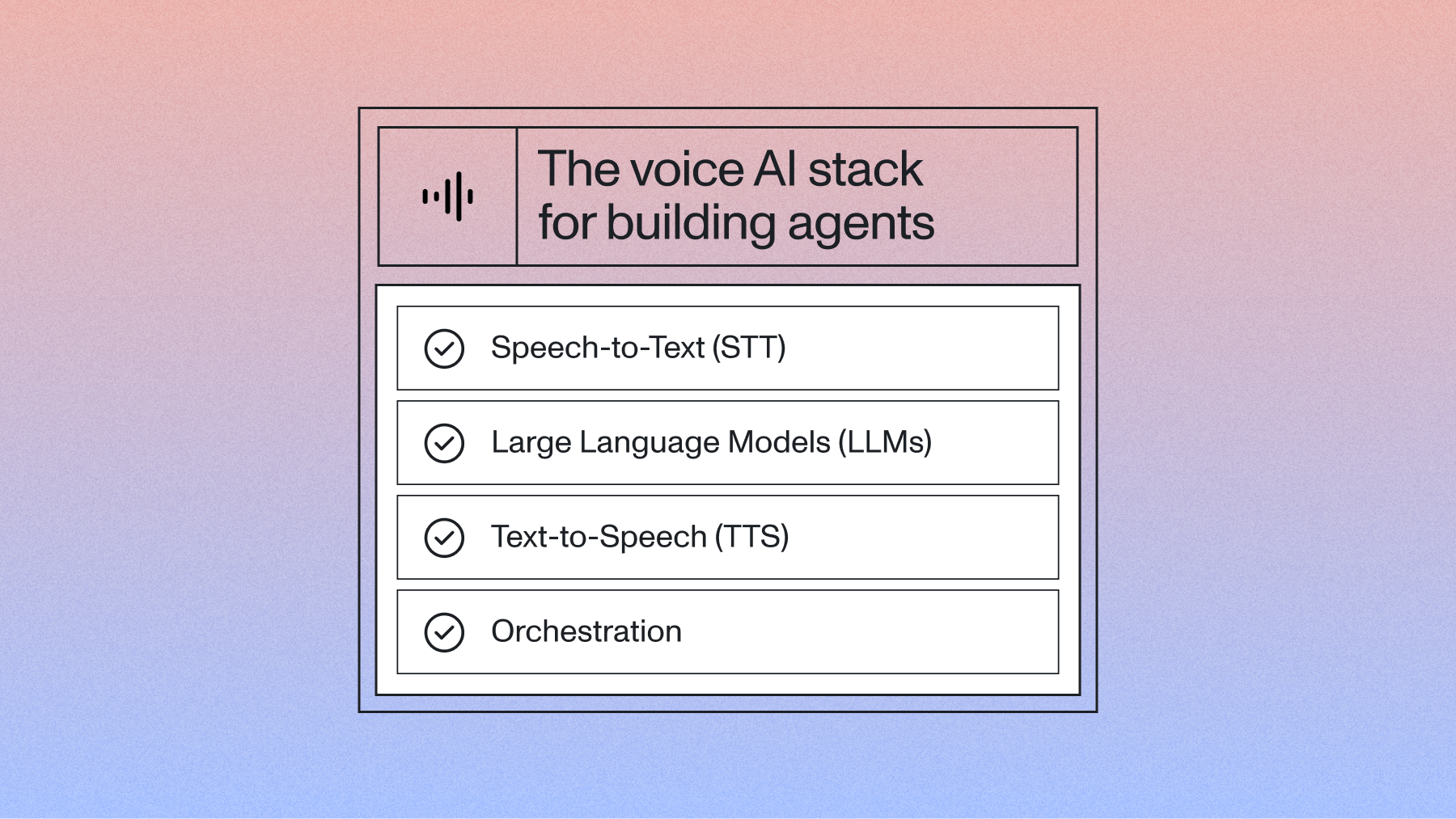

Many modern voice agents use a cascading model approach where each specialized AI service handles one part of the conversation flow. Think of it as a production line where your voice passes through different stages:

- Speech Recognition: AssemblyAI's Universal-Streaming speech-to-text converts spoken words into text with intelligent turn detection

- Processing: Pipecat orchestrates the data flow between services

- Understanding: OpenAI's LLM interprets the text and generates responses

- Speech Synthesis: Cartesia transforms the response back into natural speech

- Delivery: Daily's WebRTC infrastructure ensures real-time communication

This modular architecture lets you swap components, optimize for specific use cases, and scale each service independently. Pipecat acts as the conductor, managing timing, interruptions, and the complex dance of real-time conversation.

When building production voice agents, consider the reliability requirements of your infrastructure. Services like AssemblyAI typically provide SLAs with specific uptime guarantees—important for business-critical applications.

Prerequisites and setup

Before we start building, ensure you have these tools installed:

- Python 3.10 or higher

- UV package manager for dependency management

- Docker Desktop for containerization

- A terminal with shell access

Important: Pipecat Cloud requires ARM64 architecture for deployment. If you're on an Intel Mac or Windows machine, you'll need to build multi-architecture Docker images.

Let's create our project:

mkdir pipecat-voice-agent && cd pipecat-voice-agent

uv tool install pipecatcloud

pcc auth login

pcc init

The pcc init command generates a pre-configured project structure. Open the project in your editor and update the requirements.txt file:

pipecat-ai[assemblyai,webrtc]

pipecatcloud

python-dotenv

Initialize your environment:

uv venv

uv pip install -r requirements.txt

Configuring API keys

Our voice agent requires API keys from four services. Here's where to find each one:

Copy env.example to .env and add your keys:

cp env.example .env

Your .env file should look like this:

ASSEMBLYAI_API_KEY=your_assemblyai_key_here

OPENAI_API_KEY=your_openai_key_here

CARTESIA_API_KEY=your_cartesia_key_here

DAILY_API_KEY=your_daily_key_here

Implementing AssemblyAI speech recognition

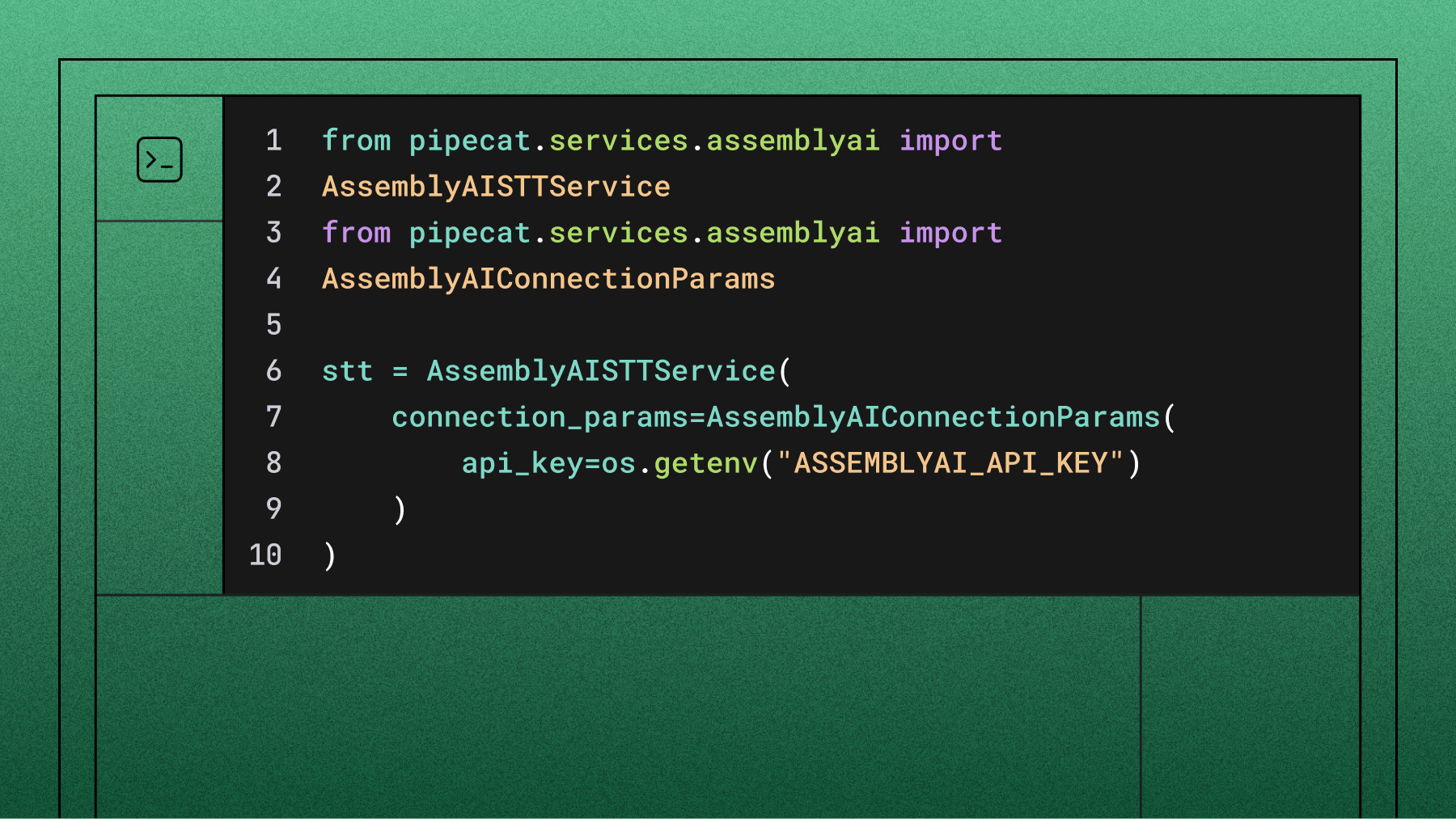

Now let's integrate AssemblyAI's Universal-Streaming speech-to-text service, which provides real-time transcription optimized for conversational AI. Open bot.py and add these imports at the top:

from pipecat.services.assemblyai import AssemblyAISTTService

from pipecat.services.assemblyai import

AssemblyAIConnectionParams

Initialize the STT service with your API key:

stt = AssemblyAISTTService(

connection_params=AssemblyAIConnectionParams(

api_key=os.getenv("ASSEMBLYAI_API_KEY")

)

)

For more natural conversations, you can customize the turn detection parameters:

# Tip: Customize turn detection for more natural conversations

stt = AssemblyAISTTService(

connection_params=AssemblyAIConnectionParams(

api_key=os.getenv("ASSEMBLYAI_API_KEY"),

end_of_turn_confidence_threshold=0.8, # Higher = wait

for more certainty

min_end_of_turn_silence_when_confident=300, # ms of

silence when confident

max_turn_silence=1000 # Maximum silence before forcing

turn end

)

)

The magic happens in the pipeline configuration. Pipecat processes audio through a series of services, and positioning matters. Add AssemblyAI after the context aggregator but before the LLM:

pipeline = Pipeline([

context_aggregator.user,

stt, # AssemblyAI processes audio here

llm,

tts,

transport.output,

context_aggregator.assistant,

])

To monitor transcriptions during development, let's add a transcript processor:

from pipecat.processors.transcript_processor import TranscriptProcessor

# Before creating the pipeline

transcript_processor = TranscriptProcessor()

# Update pipeline to include transcript monitoring

pipeline = Pipeline([

transport.input()

context_aggregator.user(),

stt,

transcript.user(),

llm,

tts,

transport.output(),

transcript_processor.assistant()

context_aggregator.assistant(),

])

# Add event handler

@transcript_processor.event_handler("on_transcript_update")

async def on_transcript_update(processor, data):

for msg in frame.messages:

print(f"{msg.role}: {msg.content}")

Testing locally

Before deploying, let's ensure everything works on your machine. The generated code includes a local testing flag:

env LOCAL_RUN=1 uv run bot.py

You should see the bot initialize and connect to Daily's WebRTC service. Speak into your microphone and watch the transcripts appear in your console. The agent should respond naturally to questions like "What is Pipecat?" or "Tell me about AI voice agents."

Common issues during local testing:

Building and deploying to the cloud

Pipecat Cloud simplifies deployment but requires specific configuration. First, update pcc-deploy.toml:

agent_name = "my-voice-agent"

image = "yourdockerhub/my-voice-agent:latest"

secret_set = "my-voice-agent-secrets"

Critical: Build your Docker image for ARM64 architecture:

docker build --platform=linux/arm64 -t my-voice-agent .

docker tag my-voice-agent yourdockerhub/my-voice-agent:latest

docker push yourdockerhub/my-voice-agent:latest

If you're on an x86 machine, use Docker's buildx for multi-platform builds:

docker buildx build --platform=linux/arm64 -t

yourdockerhub/my-voice-agent:latest --push .

Upload your secrets to Pipecat Cloud:

pcc secrets set my-voice-agent-secrets --file .env

Deploy your agent:

pcc deploy

Start your agent with Daily's interface:

pcc agent start my-voice-agent --use-daily --api-key

YOUR_PIPECAT_API_KEY

You'll receive a URL to interact with your deployed agent through a web interface.

Next steps

You've built a production-ready voice agent that combines best-in-class AI services. The complete code for this tutorial includes additional features and optimizations.

Consider enhancing your agent with:

- Multi-language support: Configure language detection and response

- Advanced turn detection: Fine-tune conversation flow parameters

- Speech Understanding: Add sentiment analysis or content moderation

Join the Pipecat Discord community for support and explore AssemblyAI's documentation for advanced speech recognition features.

Voice AI is rapidly evolving—what will you build next?

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.