AI Voice Agents are becoming a key part of how businesses handle customer interactions, and a recent survey found that 76% of companies now embed conversation intelligence in more than half of their customer interactions. But while AI-powered voice interactions have come a long way, building an AI Voice Agent that feels human-like, adapts in real-time, and integrates seamlessly into business workflows is still a complex challenge.

During our YouTube livestream with Jordan Dearsley, CEO of Vapi, we discussed some of the biggest technical challenges developers face when building AI Voice Agents and how modern AI solutions—like Vapi's Workflows and AssemblyAI's Streaming Speech-to-Text API—are solving them.

This guide covers what AI voice agents are, how they work technically, their real-world applications, and the specific engineering challenges you'll face when building them. Whether you're a developer, AI researcher, or business leader, understanding these concepts and challenges is crucial to building better AI-powered voice interactions.

What are AI voice agents?

An AI voice agent is an automated system that understands and responds to human speech in real-time, handling customer service, sales calls, and appointment scheduling without human intervention. Unlike traditional phone systems that require button presses, AI voice agents process natural language and complete complex tasks through conversation.

Unlike chatbots that only handle text, AI voice agents engage in spoken conversations and integrate with business systems to complete tasks.

Key capabilities:

- Context retention: Remember information across entire conversations

- Natural interruptions: Handle overlapping speech like humans

- System integration: Execute actions beyond answering questions

- Emotional awareness: Adapt responses to caller sentiment

How AI voice agents work

AI voice agents orchestrate three AI models working together in milliseconds:

- Speech-to-Text (STT): Converts spoken words into text using streaming APIs like AssemblyAI's for near real-time processing.

- Language Model (LLM): Analyzes text to understand intent and generate contextually relevant responses.

- Text-to-Speech (TTS): Converts text responses into natural-sounding audio output.

The technical pipeline

The magic is getting all three steps to happen so quickly that the conversation feels natural, with no awkward pauses. Modern platforms like Vapi orchestrate these components with sub-second latency, enabling conversations that flow as smoothly as human-to-human interactions.

Component impact on user experience

- Speech-to-Text: Misunderstood words immediately break conversations

- Language Model: Irrelevant responses frustrate users and reduce trust

- Text-to-Speech: Robotic voices decrease user engagement, as research shows that natural-sounding voices are rated as more trustworthy and competent.

Use cases and applications for AI voice agents

AI voice agents are more than just a futuristic concept—they're already handling real-world business functions. Developers are building them for a wide range of applications that demonstrate the versatility of Voice AI technology.

Test real-time Speech-to-Text in your browser

Try AssemblyAI’s Playground with your own audio. See how fast, accurate streaming transcription can power the use cases above.

Open playground

Customer support automation

Companies deploy voice agents for customer support automation, handling inquiries through natural language instead of menu trees.

Key benefits:

- Faster resolution times

- Natural language processing ("I need to change my delivery address")

- Improved customer satisfaction, with market survey data indicating that over 70% of companies see a measurable increase in end-user satisfaction after implementing conversation intelligence.

Outbound sales and lead qualification

Voice agents automatically call prospects to qualify leads, schedule demos, or conduct surveys. They adapt their pitch based on responses, handle objections, and seamlessly transfer warm leads to human sales representatives. This allows sales teams to focus on high-value conversations while automation handles initial outreach.

Appointment scheduling

Healthcare clinics, home service providers, and professional services use voice agents to book, reschedule, or cancel appointments. These agents integrate directly with calendar systems, check availability in real-time, and send confirmations—all through natural conversation. Patients and customers appreciate the convenience of scheduling without waiting on hold.

Order placement and tracking

Restaurants, retailers, and logistics companies enable customers to place orders or check order status through voice agents. The agents handle complex requests like "I'd like my usual pizza but make it gluten-free this time" by accessing customer history and preferences.

Interruptions and overlapping speech: making AI conversations feel natural

In human conversations, interruptions happen all the time. People talk over each other, change topics mid-sentence, and pause before finishing a thought. A well-designed AI Voice Agent needs to handle interruptions naturally—but that's much harder than it sounds.

Many AI systems struggle with rigid, turn-based conversation models, meaning they either talk over the user or wait too long to respond, making interactions feel robotic.

How modern AI voice agents handle interruptions

Vapi solves this by orchestrating real-time speech processing with low-latency models. This means the AI Voice Agent can:

- ✔ Detect when a user starts speaking and immediately stop talking

- ✔ Use AssemblyAI's Streaming Speech-to-Text API, which transcribes speech in milliseconds, so the AI can react as quickly as a human

- ✔ Adjust its conversation flow based on intent—if a user says "Wait, I need to change that," the AI can pause and respond accordingly

The result? Conversations that feel fluid and responsive, rather than frustrating and rigid.

Maintaining context in conversations

One of the biggest frustrations with AI Voice Agents is when they forget what was said earlier in the conversation. Imagine booking an appointment, providing your name at the start, and then being asked for it again later.

This happens because many AI models process conversations in short bursts. They don't inherently remember past interactions unless they have a structured way to store and recall information.

The power of AI conversation workflows

Vapi solves this by introducing structured workflows that let developers define how an AI Voice Agent should:

- ✔ Store important details (like a user's name or appointment time) throughout a session

- ✔ Retrieve context when needed, rather than relying on an LLM to "remember"

- ✔ Reduce hallucinations—LLMs sometimes make up details when trying to recall past information, so structured memory prevents errors

Power workflows with accurate streaming STT

Get an API key to stream low-latency, noise-resilient transcription from AssemblyAI. Feed reliable text into your agent’s memory and intent logic.

Get free API key

With these improvements, AI Voice Agents can handle long, multi-turn conversations more intelligently. Whether helping a customer book a service or answering follow-up questions about a previous request, context retention is critical.

Background noise: filtering out distractions

AI Voice Agents often operate in noisy environments—call centers, busy offices, or homes with background conversations. Unlike humans, who can instinctively focus on one speaker, AI models tend to transcribe everything they hear, leading to messy and inaccurate results. In fact, one experiment found that while traditional noise suppression can degrade audio quality, modern deep learning models can significantly improve it.

To improve accuracy in real-world conditions, Vapi relies on high-quality speech-to-text models like AssemblyAI to handle noisy audio effectively. AssemblyAI's Streaming Speech-to-Text API is designed to differentiate speech from background noise, ensuring that AI Voice Agents can focus on the primary speaker even in challenging environments. By leveraging accurate transcription and noise-adaptive speech recognition, Vapi enables AI Voice Agents to process conversations more reliably.

These improvements allow AI Voice Agents to work in dynamic, real-world settings without getting confused by background chatter.

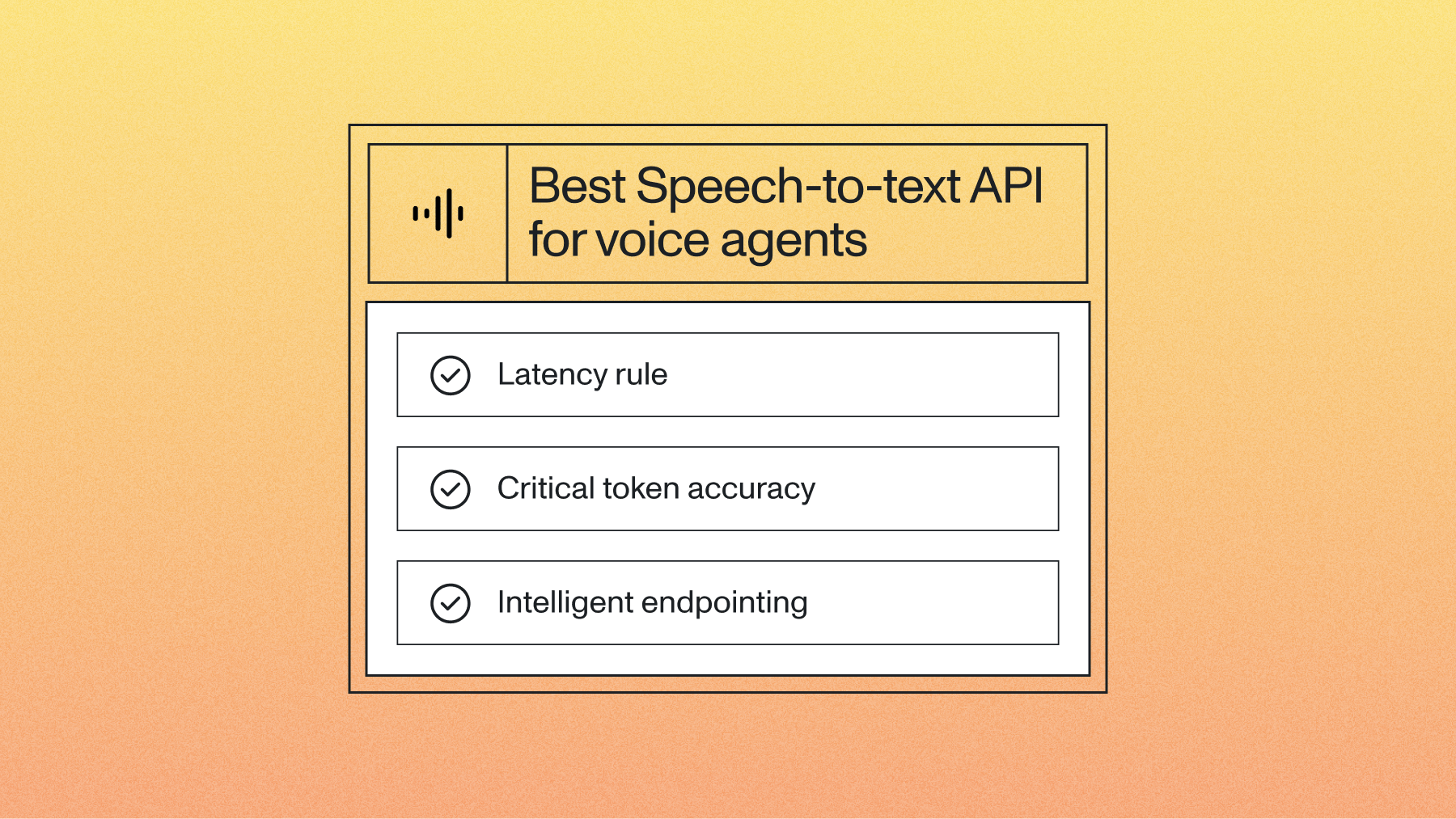

Latency: the key to human-like conversations

One of the biggest factors that make an AI Voice Agent feel natural or frustrating is latency. Delays of even a second can break the flow of conversation, making interactions feel robotic, especially since research on conversational flow shows that natural human conversations have pauses of only 200-500 milliseconds.

This is particularly challenging because AI Voice Agents combine multiple steps in real time:

- Speech-to-Text (STT) – Converting speech into text

- Processing & AI Logic (LLM or other models) – Understanding intent and generating a response

- Text-to-Speech (TTS) – Converting the AI-generated response back into speech

If any step introduces too much delay, the entire experience suffers. Technical analyses show that while all components contribute, LLM inference is often the largest bottleneck, responsible for 40-60% of the total latency.

How Vapi optimizes AI voice agent latency

- ✔ Low-latency STT: AssemblyAI's Streaming Speech-to-Text API transcribes speech in just a few hundred milliseconds so AI Voice Agents can react quickly

- ✔ Optimized Orchestration: Vapi allows developers to choose their own models (STT, LLM, TTS) based on performance needs, ensuring minimal processing delays

- ✔ Efficient AI Processing: By structuring conversations into step-by-step workflows, Vapi reduces the need for repeated API calls and unnecessary processing time

The end result? A faster, more responsive AI Voice Agent that keeps the conversation fluid and natural.

How to implement AI voice agents

Building a voice agent from scratch is complex, but modern platforms have made it much more accessible. Here's a practical approach to implementation that balances speed with quality.

Define the goal and workflow

Start by identifying the specific problem you're solving—successful voice agents address focused pain points rather than attempting everything.

Define success metrics:

- Cost reduction through fewer customer complaints

- Automated resolution rate targets

- Reduced human-customer interaction volume

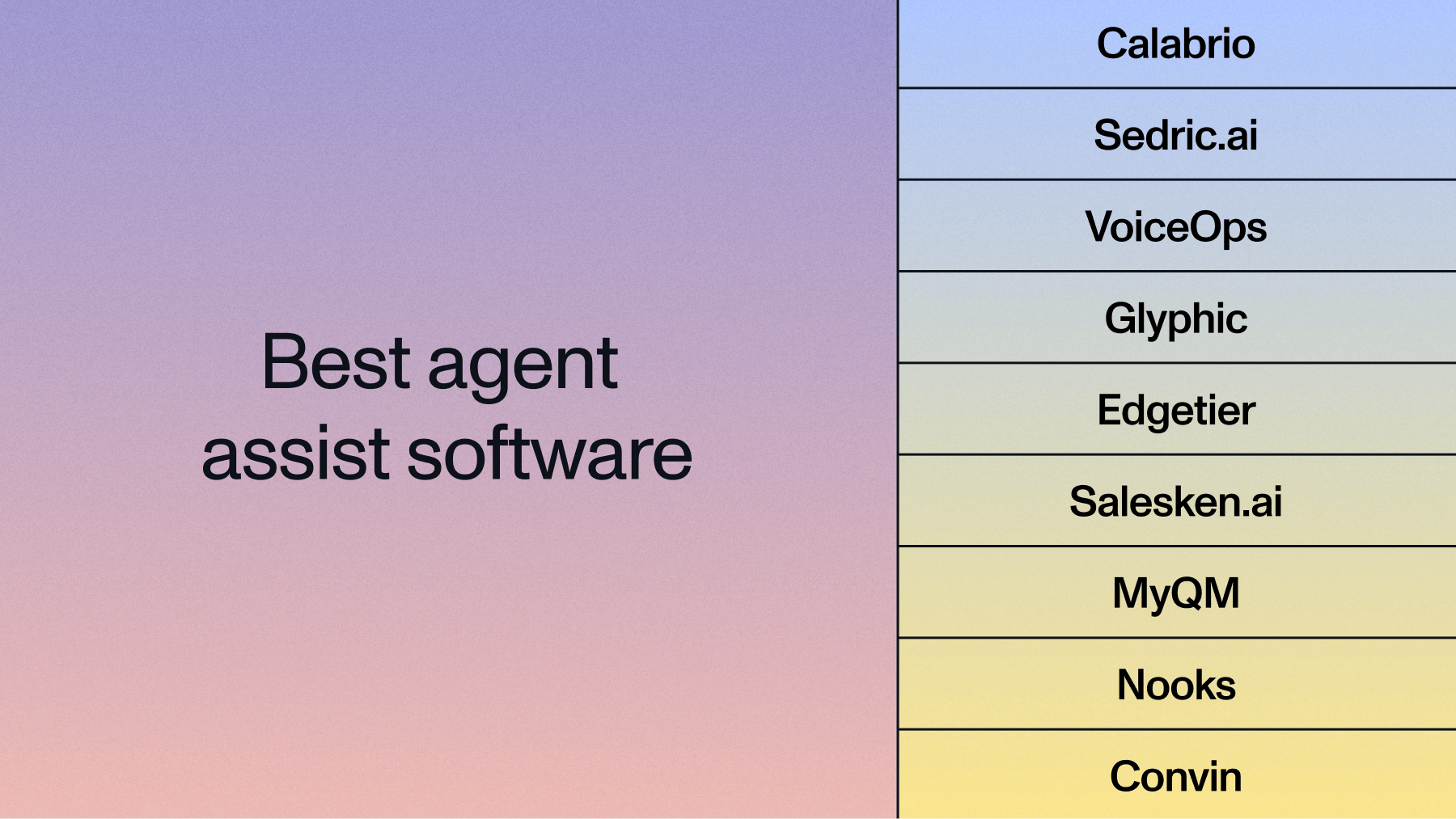

Choose your technology stack

Select the core components for your agent. Your choices here directly impact performance and cost:

Component | Key Considerations | Example Providers |

|---|

Speech-to-Text | Accuracy, latency, language support | AssemblyAI, Google Cloud STT |

LLM | Response quality, cost per token | GPT-4, Claude, Llama |

Text-to-Speech | Voice quality, emotional range | ElevenLabs, Azure TTS |

Orchestration | Integration complexity, scalability | Vapi, custom solution |

Integrate the components

Use a platform like Vapi to orchestrate the different APIs. This handles the complex real-time communication between STT, LLM, and TTS services. Vapi's approach of allowing developers to bring their own models provides flexibility while abstracting away orchestration complexity.

Start building your voice agent today

Sign up for AssemblyAI’s Streaming Speech-to-Text API to orchestrate real-time conversations. Use it with Vapi or your own stack.

Start free

Test and refine

Deploy the agent in a controlled environment first. Test with real users to identify areas for improvement.

Common refinements include:

- Adjusting prompts to handle edge cases better

- Fine-tuning interruption handling for more natural conversations

- Optimizing latency by choosing faster models or adjusting timeouts

- Improving context retention for longer conversations

Use conversation data to continuously improve the agent's performance. The best voice agents evolve based on real-world usage patterns.

The future of AI voice agents: more flexibility and smarter workflows

AI Voice Agents are evolving rapidly. While technology is improving, flexibility remains key for companies that want to build custom AI solutions.

Vapi's approach is to orchestrate different AI components—not replace them. Companies can choose their own STT, LLM, and TTS providers, allowing for granular control over performance, cost, and accuracy.

Rather than pushing toward a single speech-to-speech model, this modularity ensures that businesses can fine-tune AI Voice Agents to their exact needs. As the technology continues to advance, we're seeing:

- Better handling of emotional nuance and conversational context

- Reduced latency bringing response times closer to human conversation speeds

- Improved accuracy in challenging audio conditions

- More sophisticated workflow capabilities for complex business logic

The next generation of voice agents will blur the line between automated and human interactions. This enables businesses to scale personalized communication in ways that weren't possible before.

Get started: build your AI voice agent today

Want to start building AI Voice Agents that handle interruptions, maintain context, and work in noisy environments? Here's where to start:

✅ Try AssemblyAI's Streaming Speech-to-Text API → https://www.assemblyai.com/docs/speech-to-text/streaming✅ Explore Vapi's AI Voice Agent platform → https://vapi.ai/✅ Watch the full livestream here → https://youtu.be/8bfX79VC4GU

The next generation of AI Voice Agents is here—faster, more flexible, and more human-like than ever. Try our API for free to start building voice agents that deliver real business value.

Frequently asked questions about AI voice agents

What's the difference between an AI voice agent and a chatbot?

AI voice agents communicate through spoken language over phone calls, while chatbots use text in messaging apps or websites.

How much latency is acceptable for AI voice agents?

Total end-to-end latency should be under one second for natural conversations.

Can AI voice agents handle multiple languages?

Yes, but language support depends on the specific Speech-to-Text model used. For real-time voice agents, AssemblyAI's Streaming API has a multilingual model that supports English, Spanish, French, German, Italian, and Portuguese. For analyzing pre-recorded audio after a call, AssemblyAI's asynchronous API supports over 95 languages.

What happens when an AI voice agent doesn't understand something?

As best practices suggest, well-designed agents acknowledge their limitations by asking for clarification, offering related options, or escalating to human agents rather than failing abruptly.

How do AI voice agents integrate with existing business systems?

Voice agents connect through APIs and webhooks to query CRMs, update databases, and trigger workflows in real-time.

Title goes here

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.