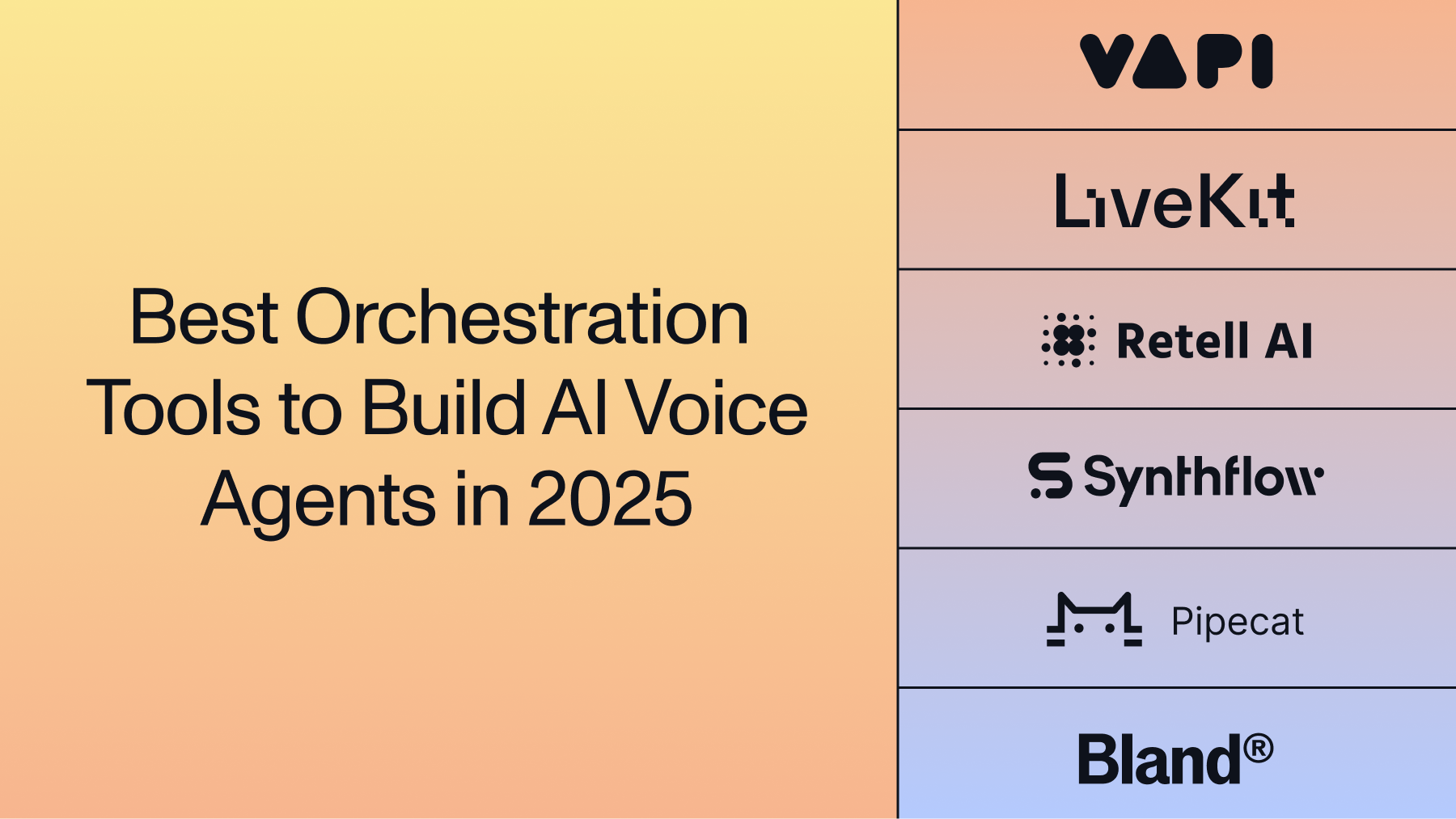

6 best orchestration tools to build AI voice agents in 2026

Build better AI voice agents with the right orchestration tool. Compare platforms, features, integrations, and real-world performance.

AI voice agents, which a recent survey finds 62% of organizations are now experimenting with, turn frustrating IVR trees into actual conversations that get things done. These systems understand natural speech, maintain context throughout interactions, and respond with voices that sound (sometimes indistinguishably) human.

Behind most great voice agents is an orchestration tool that connects the necessary models—speech-to-text (STT) that captures what customers say, large language models (LLMs) that understand intent, and text-to-speech (TTS) that delivers natural responses. When these pieces work in harmony, customers get the help they need without the friction.

This guide covers what AI voice agents are, how they work technically, and the orchestration tools you need to build them successfully. You'll learn about the business value they deliver, implementation approaches, and how to choose the right platform for your specific use case.

What are AI voice agents

AI voice agents are conversational AI systems that understand and respond to human speech in real time using a stack of AI models. They handle complex, multi-turn conversations and adapt to natural language, unlike rigid IVR systems that force callers through predefined menu paths.

Think of it as the difference between following a strict flowchart and having an actual conversation. While a traditional IVR asks you to "press one for sales," a voice agent lets you say, "I need to check the status of my recent order and also ask about your return policy." The agent understands both intents and can switch context seamlessly.

Voice vs. Text Interface: Voice agents process spoken language, while chatbots handle text. This creates unique challenges around accent recognition, background noise, and interruption handling.

Key Distinction: Voice agents maintain conversational context, handle interruptions, and execute complex tasks while sounding increasingly human.

How AI voice agents work

AI voice agents operate on a three-part pipeline that executes in milliseconds. This orchestration separates them from simpler voice commands.

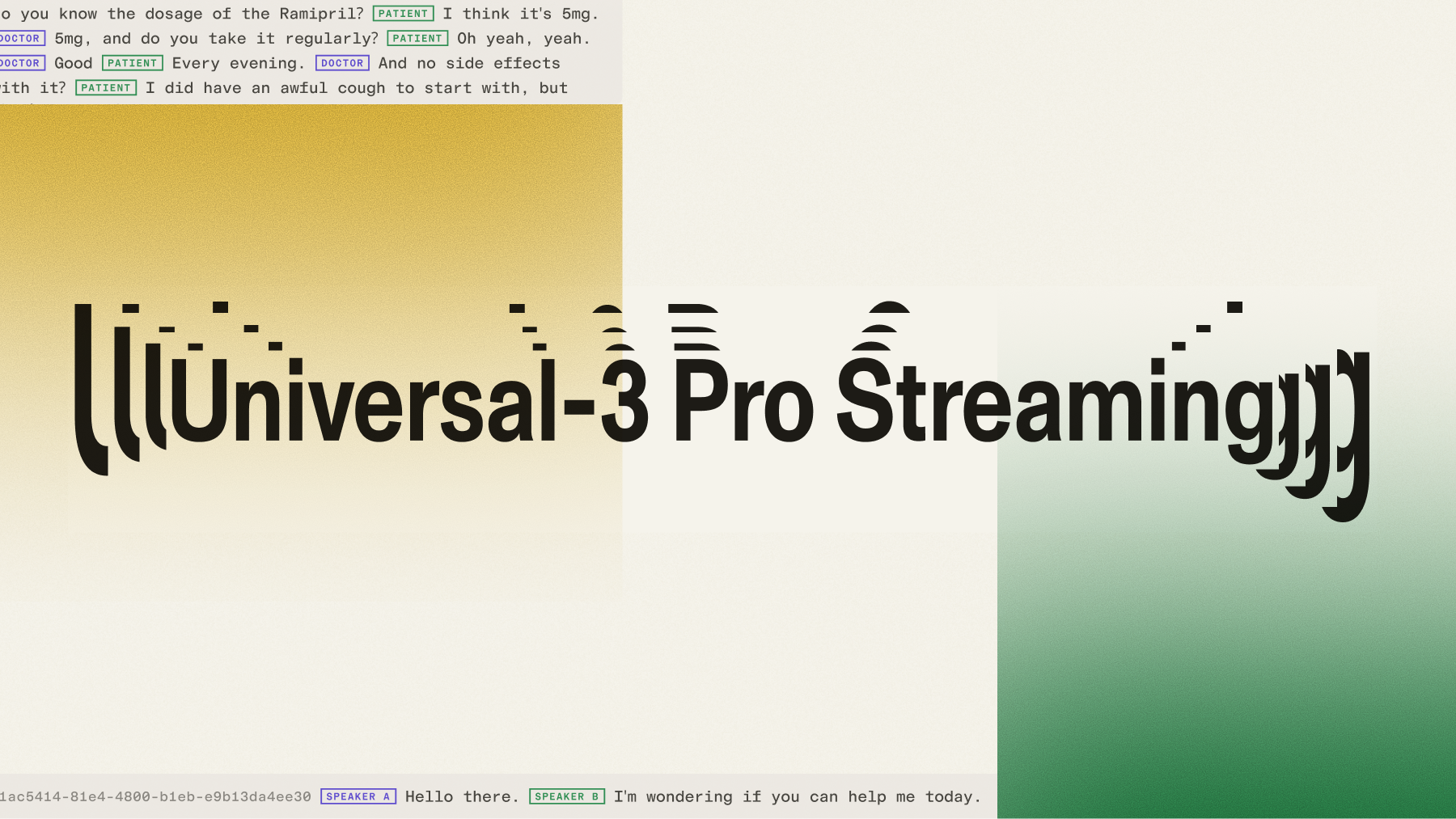

The Voice AI pipeline

Speech-to-text (STT): Transcribes spoken words with high accuracy and extremely low latency. The model must detect when speakers finish thoughts through endpointing.

Large Language Model (LLM): Acts as the agent's brain, determining intent and generating appropriate responses. Can call external tools or APIs when needed.

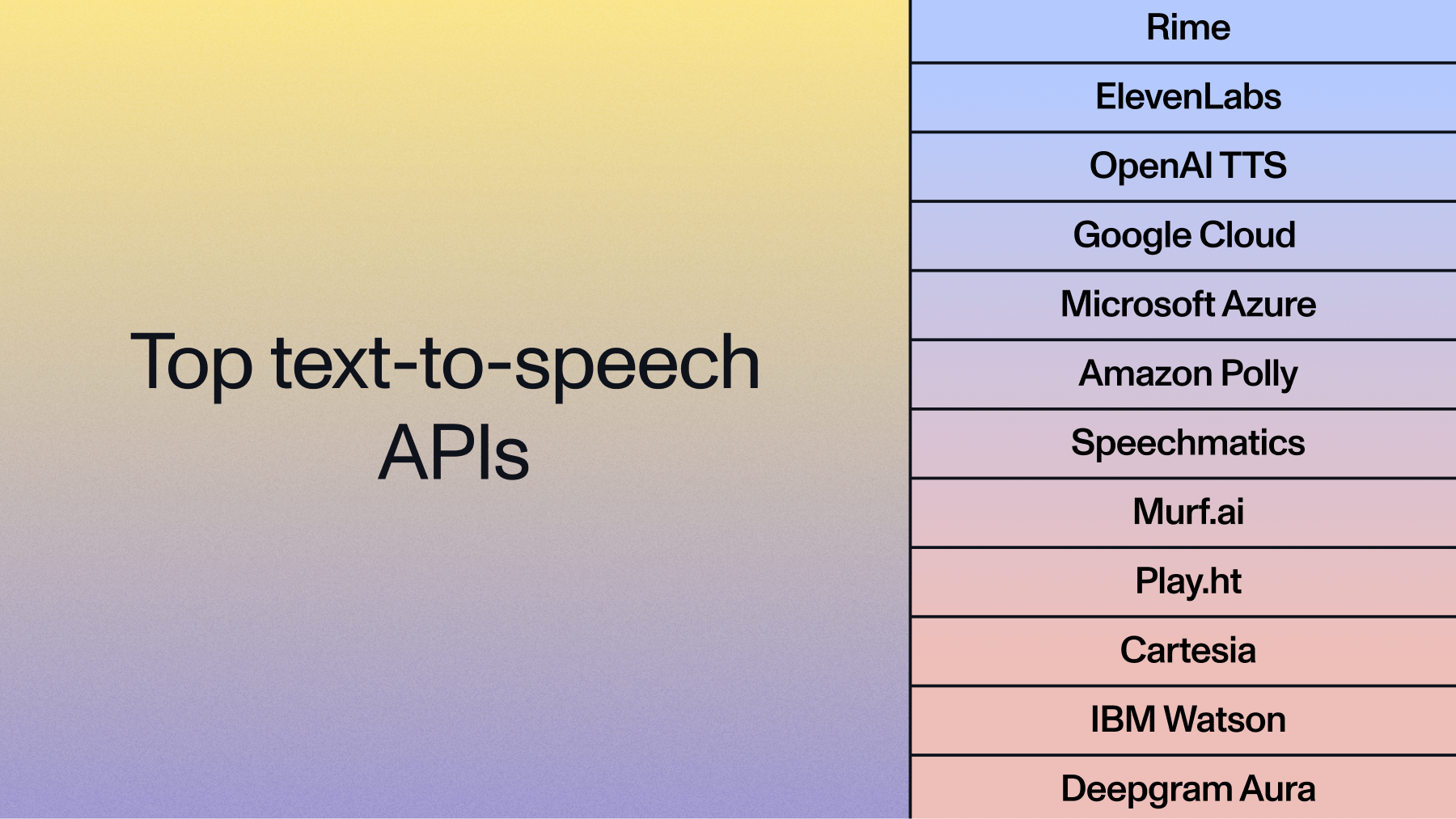

Text-to-speech (TTS): Converts responses into natural-sounding audio. Voice quality and speed are critical for maintaining human-like interactions.

Additional components for production systems

Beyond the core pipeline, production voice agents require additional capabilities:

- Turn-taking models: Determine when the user has finished speaking and when it's appropriate for the agent to respond

- Interruption handling: Allows users to cut in mid-response without breaking the conversation flow

- Context management: Maintains conversation history across multiple turns and remembers key information

- Tool calling: Connects to external APIs and databases to fetch information or perform actions

- Error recovery: Gracefully handles misunderstandings and guides conversations back on track

Use cases and applications for AI voice agents

Developers are building AI voice agents across industries for diverse use cases. Each application leverages the core technology differently.

Customer Service:

24/7 technical support, order management, account services

Sales Operations:

Lead qualification, appointment setting, product recommendations

Healthcare:

Appointment scheduling, medication adherence, pre-visit screening

Internal Operations:

Data collection, field service support, employee services

Customer service and support

- 24/7 Technical Support: Voice agents walk users through troubleshooting steps at any time of day, freeing up human experts for more complex problems

- Order Management: Handle order status checks, modifications, and cancellations without human intervention

- Account Services: Process password resets, billing inquiries, and account updates securely through voice verification

Sales and revenue operations

- Inbound Lead Qualification: Agents can answer initial calls, ask qualifying questions, and route high-intent leads directly to sales teams

- Outbound Appointment Setting: Companies use voice agents to call leads, find convenient times, and book appointments directly on calendars

- Product Recommendations: Guide customers through product selection based on their needs and preferences

Healthcare coordination

- Appointment Scheduling: Medical practices use voice agents to manage booking, rescheduling, and appointment reminders

- Medication Adherence: Automated check-in calls ensure patients are taking prescribed medications

- Pre-visit Screening: Collect patient symptoms and medical history before appointments

Internal operations

- Data Collection and Surveys: Voice agents conduct customer satisfaction surveys or gather feedback conversationally

- Field Service Support: Technicians use voice agents to access manuals, log work, and order parts hands-free

- Employee Services: Handle HR inquiries, time-off requests, and benefits questions for internal teams

The business value of AI voice agents

AI voice agents deliver significant business impact with measurable returns. Companies report quantifiable improvements across key metrics.

Key Benefits:

- Cost Reduction: Scale operations without proportional staffing increases

- Customer Satisfaction: 24/7 availability eliminates frustrating menu trees

- Revenue Growth: Systematic lead follow-up increases conversion rates

- Quality Assurance: Consistent service delivery and automatic documentation

Operational cost reduction

According to McKinsey estimates, applying generative AI to customer care can increase productivity by a value of 30 to 45 percent of current function costs, allowing voice agents to handle high volumes of routine calls without requiring proportional increases in staffing. This scalability transforms the economics of customer service, particularly for businesses with seasonal peaks or rapid growth. Rather than hiring and training temporary staff, companies can instantly scale voice agent capacity based on demand.

Enhanced customer experience

Customers consistently report higher satisfaction with voice agents compared to traditional IVR systems, with one 2025 report finding that 69% of companies saw improved customer service after implementing conversation intelligence. The elimination of frustrating menu trees, immediate 24/7 availability, and ability to handle complex requests in natural language all contribute to improved customer perception. Voice agents also reduce average handling time by efficiently gathering information and routing complex issues to the right human agent when needed.

Revenue acceleration

In sales contexts, voice agents ensure no lead goes uncontacted. They qualify prospects outside business hours, schedule demos at optimal times, and handle initial product education. This systematic follow-up increases conversion rates while allowing human sales teams to focus on high-value activities like closing deals and relationship building. The efficiency gains are significant; a notable case study found that implementing generative AI reduced the time agents spent on an issue by 9 percent.