How to build an AI medical scribe with AssemblyAI

Build a production-ready AI medical scribe with Python. Learn speaker identification, PII redaction, SOAP note generation, and automatic data deletion.

AI medical scribes are transforming healthcare documentation, but building one that works in clinical settings requires more than basic transcription. You need accurate medical terminology capture, reliable speaker identification, and privacy safeguards that protect patient data.

This tutorial walks you through building a functional AI medical scribe in Python. You'll start with basic transcription and progressively add speaker identification, PII redaction, SOAP note generation, and automatic data deletion. By the end, you'll have a working prototype that handles real-world clinical scenarios.

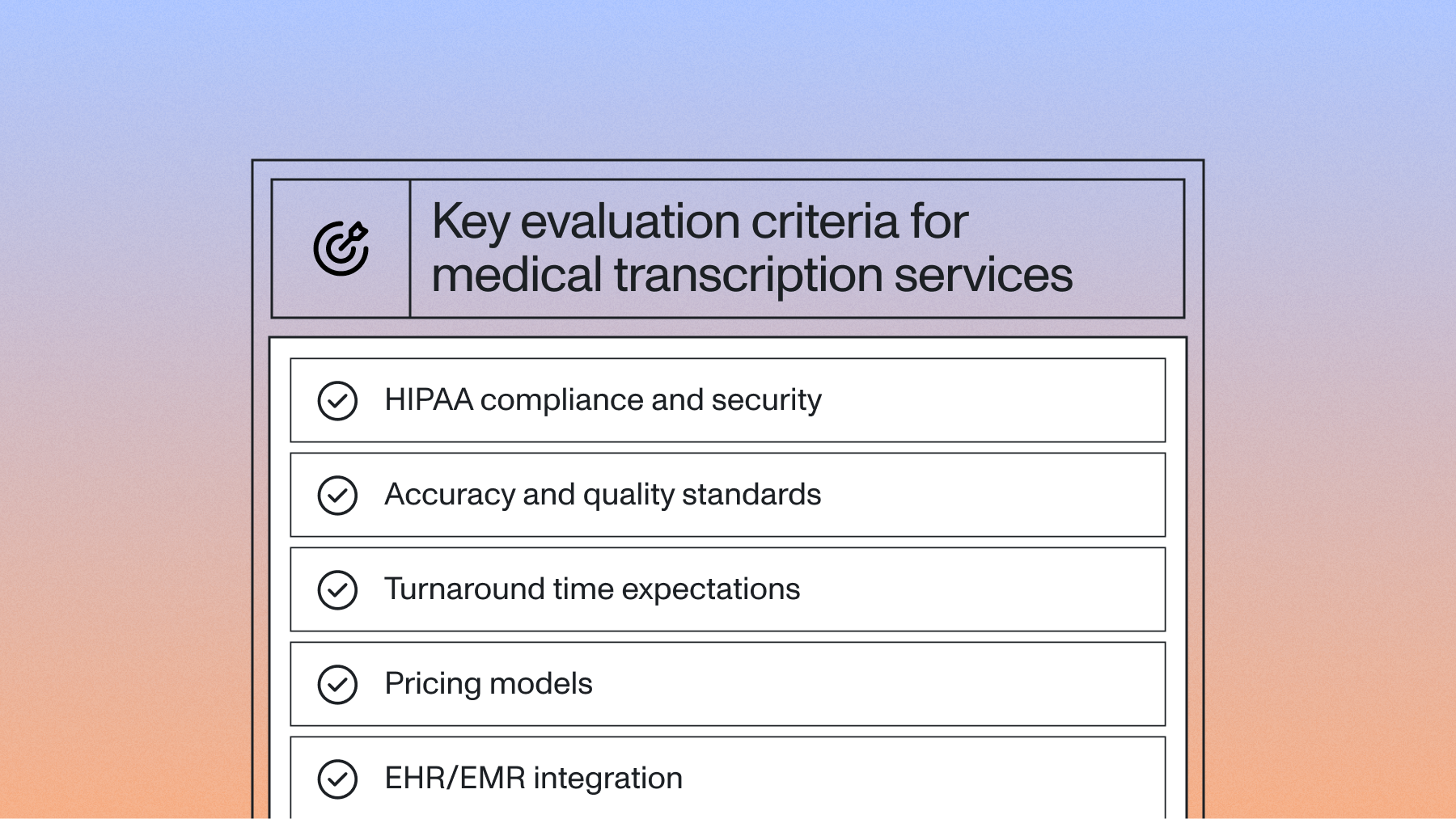

What makes a production-ready medical scribe?

Before diving into code, understand what separates a proof-of-concept from a deployable healthcare solution.

Essential features checklist

Modern Speech AI APIs like AssemblyAI handle most of this complexity. Here's how.

AssemblyAI provides SOC2 Type 2 certification, ISO 27001:2022 certification, and BAA availability for healthcare applications, ensuring your medical scribe meets compliance requirements from day one.

Step 1: Basic transcription setup

Start with a simple transcription request. This establishes the foundation before layering on medical-specific features.

import requests

import time

import dotenv

import os

dotenv.load_dotenv()

base_url = "https://api.assemblyai.com"

headers = {"authorization": os.getenv("AAI_API_KEY")}

Step 2: Adding speaker identification

Medical documentation requires knowing who said what. Was it the doctor prescribing medication or the patient requesting it? Speaker diarization automatically separates different speakers in the conversation.

AssemblyAI's speaker diarization identifies when different people are speaking and labels them as Speaker A, Speaker B, and so on. To separate speakers further, we can use AssemblyAI's separate Speaker Identification feature to match specific names to speakers. Here, we’ll map these speaker labels to roles like "Doctor" and "Patient".

data = {

"audio_url":

"https://storage.googleapis.com/aai-web-samples/doctor-patient-co

nvo.mp4",

"speaker_labels": True,

"speech_understanding": {

"request": {

"speaker_identification": {

"speaker_type": "role",

"known_values": ["Doctor", "Patient"]

}The speaker diarization works best when each speaker talks for at least 30 seconds uninterrupted, though the model handles real-world conversations with cross-talk and short phrases.

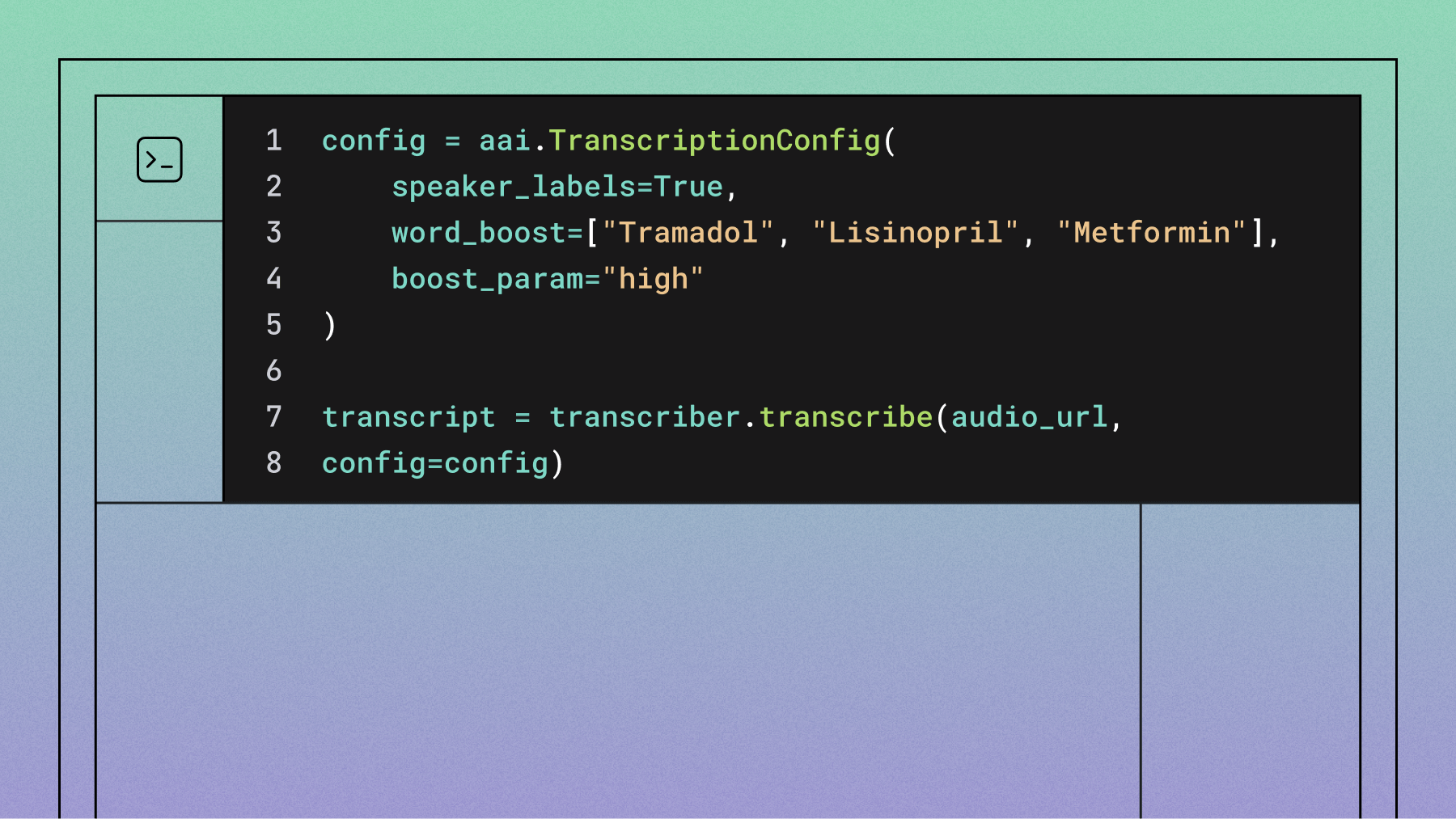

Step 3: Improving medical terminology accuracy with word boost and protecting patient privacy with PII redaction

A common problem: doctors prescribe "Tramadol," but transcription shows "tramadol" in lowercase. Small formatting issues like this matter in medical records where precision is critical.

AssemblyAI's word boost feature lets you bias the model toward specific terms and their proper formatting. You provide a list of medical terms, drug names, or procedures, and the model

Medical conversations also contain protected health information (PHI): patient names, birthdates, social security numbers, addresses. If this data appears in your transcripts and you store them on third-party servers, you're potentially violating privacy regulations.

AssemblyAI's PII redaction automatically identifies and removes personally identifiable information from transcripts. You can configure what gets redacted and how.

}},

"redact_pii": True,

"redact_pii_policies": ["person_name", "organization",

"occupation"],

"redact_pii_sub": "hash",

"keyterms_prompt": ["Tramadol"]

}

response = requests.post(base_url + "/v2/transcript",

headers=headers, json=data)

transcript_json = response.json()

transcript_id = transcript_json["id"]

polling_endpoint = f"{base_url}/v2/transcript/{transcript_id}"

while True:

transcript = requests.get(polling_endpoint,

headers=headers).json()

if transcript["status"] == "completed":

for utterance in transcript["utterances"]:

print(f"{utterance['speaker']}: {utterance['text']}")

break

else:

time.sleep(3)

Step 4: Generating SOAP notes with LLM Gateway

Raw transcripts are useful, but doctors need structured documentation. SOAP notes (Subjective, Objective, Assessment, Plan) are the clinical standard for organizing patient encounters.

This is where large language models come in. AssemblyAI's LLM Gateway provides a unified interface to various LLMs. Call it with your existing AssemblyAI API key.

prompt = "Create a SOAP note summary of the consultation."

llm_gateway_data = {

"model": "gemini-3-pro-preview",

"messages": [

{"role": "user", "content": f"{prompt}\n\nTranscript:

{transcript['text']}"}

]

}

llm_gateway_response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers=headers,

json=llm_gateway_data

)

print(llm_gateway_response.json()["choices"][0]["message"]["conte

nt"])

The LLM processes the conversation and outputs structured clinical notes. The doctor gets documentation ready for the EHR without manual transcription.

Step 5: Automatic data deletion

The most reliable way to protect patient data is to ensure it doesn't persist on third-party infrastructure. After retrieving your transcript and generating SOAP notes, delete the transcript from AssemblyAI's servers.

delete_request = requests.delete(polling_endpoint,

headers=headers).json()

delete_llm_gateway_response =

requests.delete(f"https://llm-gateway.assemblyai.com/v1/chat/comp

letions/{llm_gateway_response.json()['request_id']}",

headers=headers)

transcript = requests.get(polling_endpoint,

headers=headers).json()

print(transcript)

Data retention for LLM Gateway

When using LLM Gateway with an executed Business Associate Agreement (BAA) and Anthropic or Google inference models, AssemblyAI provides zero data retention for inputs and outputs. The LLM Gateway processes requests ephemerally. Your transcript text and the generated SOAP notes are not stored beyond the immediate API call. Only minimal metadata is retained for logging and billing purposes.

Time-to-live for transcripts

For additional protection of transcript data, AssemblyAI offers time-to-live (TTL) settings. As of November 26, 2024, customers with a signed Business Associate Agreement (BAA) automatically have a 3-day TTL applied to all transcripts. This TTL is subject to change. Transcripts automatically delete after this period, even if you forget to send the delete request manually.

If you're processing Protected Health Information (PHI) and require a BAA, reach out to sales@assemblyai.com.

Putting it all together

Here's the complete implementation with all features enabled:

import requests

import time

import dotenv

import os

dotenv.load_dotenv()

base_url = "https://api.assemblyai.com"

headers = {"authorization": os.getenv("AAI_API_KEY")}

data = {

"audio_url": "https://storage.googleapis.com/aai-web-samples/doctor-patient-co

nvo.mp4",

"speaker_labels": True,

"speech_understanding": {

"request": {

"speaker_identification": {

"speaker_type": "role",

"known_values": ["Doctor", "Patient"]

}

}},

"redact_pii": True,

"redact_pii_policies": ["person_name", "organization",

"occupation"],

"redact_pii_sub": "hash",

"keyterms_prompt": ["Tramadol"]

}

response = requests.post(base_url + "/v2/transcript",

headers=headers, json=data)

transcript_json = response.json()

transcript_id = transcript_json["id"]

polling_endpoint = f"{base_url}/v2/transcript/{transcript_id}"

while True:

transcript = requests.get(polling_endpoint,

headers=headers).json()

if transcript["status"] == "completed":

for utterance in transcript["utterances"]:

print(f"{utterance['speaker']}: {utterance['text']}")

break

else:

time.sleep(3)

prompt = "Create a SOAP note summary of the consultation."

llm_gateway_data = {

"model": "gemini-3-pro-preview",

"messages": [

{"role": "user", "content": f"{prompt}\n\nTranscript:

{transcript['text']}"}

]

}

llm_gateway_response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers=headers,

json=llm_gateway_data

)

print(llm_gateway_response.json()["choices"][0]["message"]["conte

nt"])

delete_request = requests.delete(polling_endpoint,

headers=headers).json()

delete_llm_gateway_response =

requests.delete(f"https://llm-gateway.assemblyai.com/v1/chat/completions/{llm_gateway_response.json()['request_id']}", headers=headers)

transcript = requests.get(polling_endpoint,

headers=headers).json()

print(transcript)

Why this approach works for medical AI

Building a medical scribe requires more than transcription accuracy. It's about creating a system that fits into clinical workflows while respecting patient privacy.

This implementation handles the real-world challenges:

- Medical terminology accuracy through word boost

- Clear speaker attribution with speaker diarization

- Privacy protection via PII redaction and automatic deletion

- Structured output through LLM-generated SOAP notes

- Data minimization by removing all traces from third-party servers

The result: a functional prototype you can test with clinical audio. From here, add features like real-time transcription for live consultations, integration with EHR systems, or custom SOAP note templates for different specialties.

This implementation addresses the core challenges of medical AI: terminology accuracy through word boost, clear speaker attribution with diarization, privacy protection via PII redaction and automatic deletion, and structured output through LLM-generated SOAP notes. The combination creates a foundation for clinical-grade medical scribes that respect patient privacy while delivering documentation that fits into real healthcare workflows. From here, add real-time transcription, EHR integration, or specialty-specific templates.

Next steps

Want to build on this foundation? Check out these resources:

- AssemblyAI's Speech-to-Text documentation

- PII redaction policies and configuration

- LLM Gateway for medical use cases

If you're building a healthcare AI product and need help with compliance, accuracy benchmarks, or production deployment, the AssemblyAI team has experience with medical AI companies and can help navigate technical and regulatory challenges.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.