INTRODUCING UNIVERSAL-3 Pro

The first of its kind promptable

Speech Language Model

Introducing Universal-3 Pro, a first of its kind promptable speech language model. We’re so confident you’ll be blown away by its performance that we're giving it to you for free for all of February.

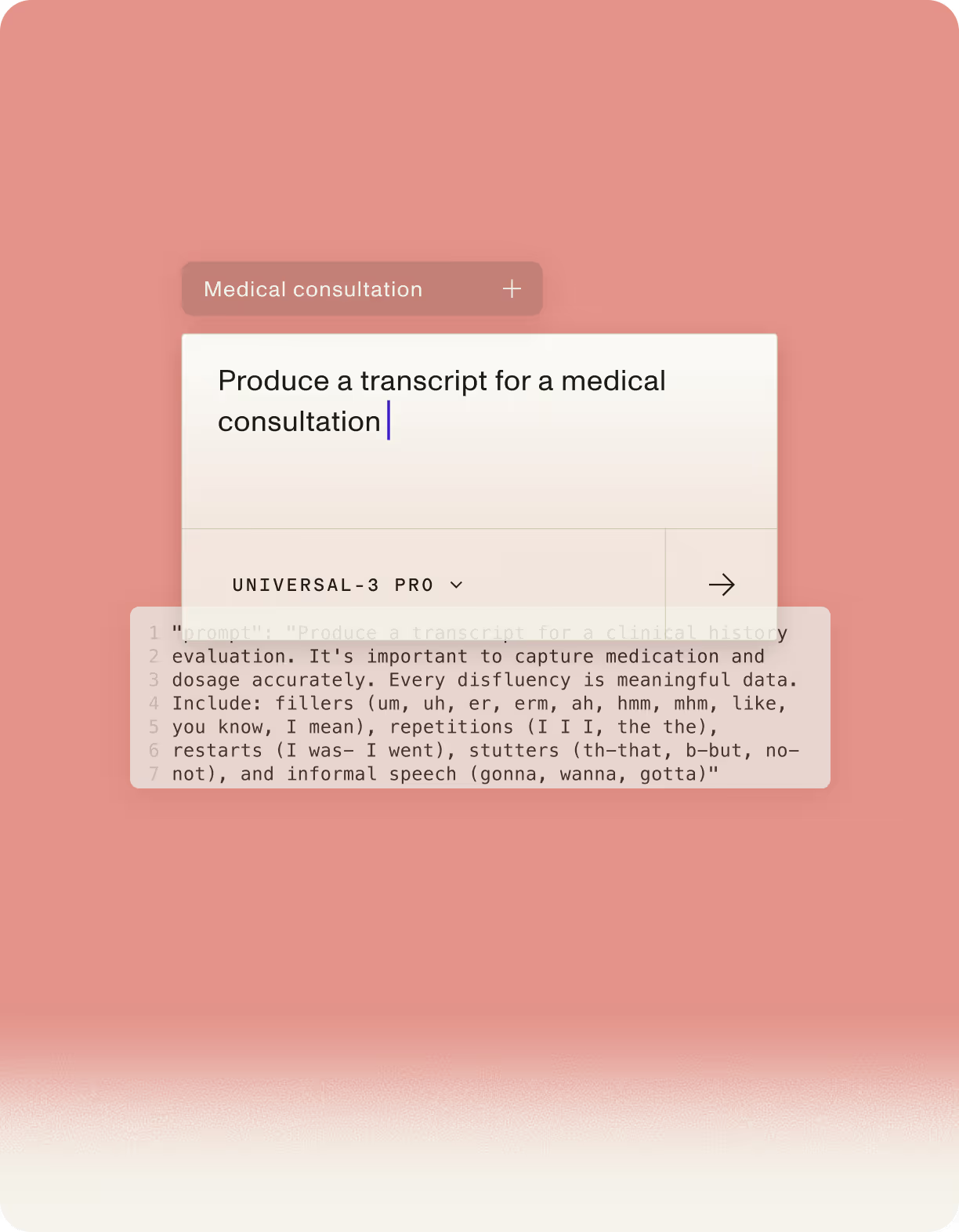

Full control with prompting

Give the model context about names, terminology, topics, and format before processing, and it delivers transcriptions that understand your specific content.

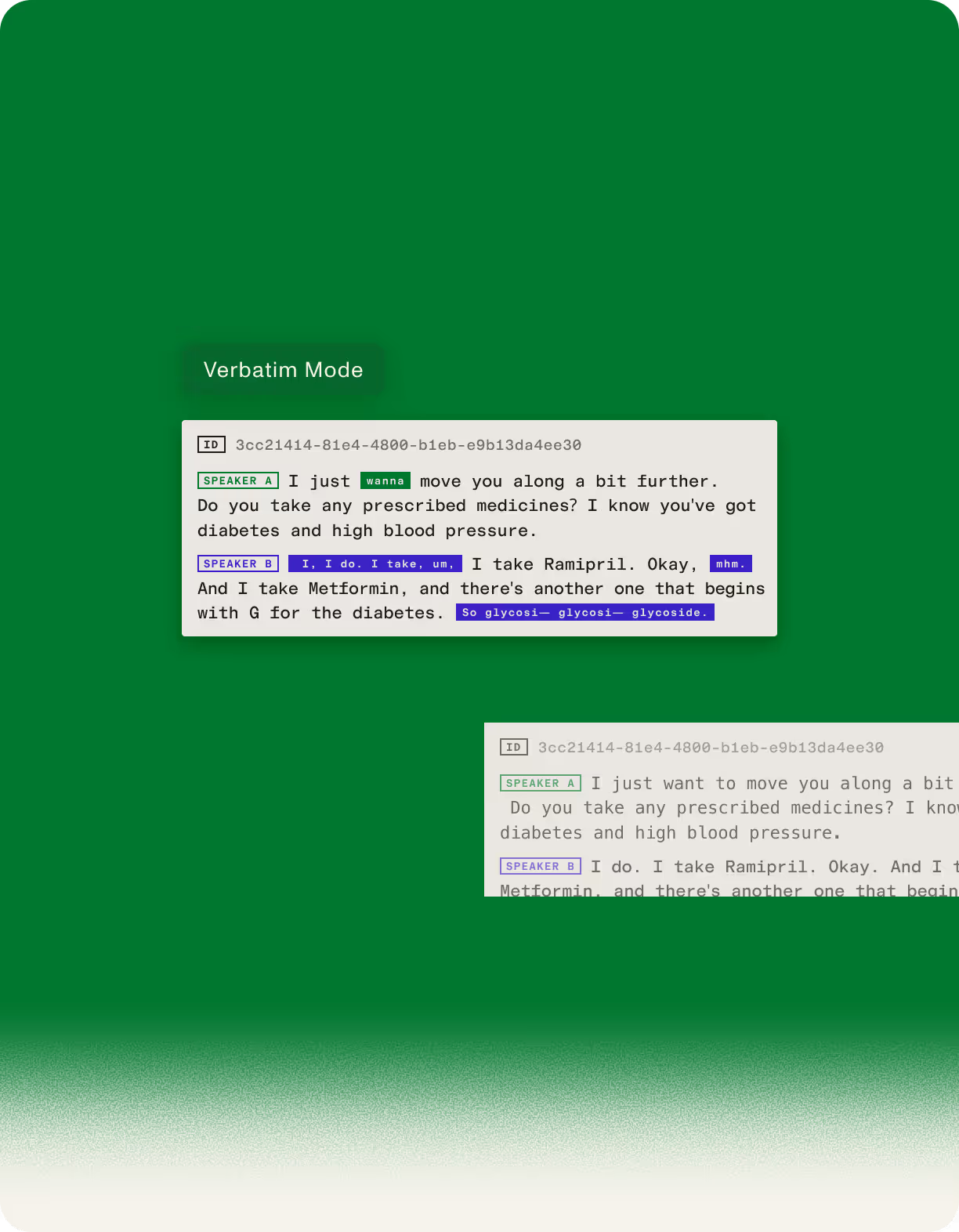

"prompt": "Produce a transcript for a clinical history evaluation. It's important to capture medication and dosage accurately. Every disfluency is meaningful data. Include: fillers (um, uh, er, erm, ah, hmm, mhm, like, you know, I mean), repetitions (I I I, the the), restarts (I was- I went), stutters (th-that, b-but, no-not), and informal speech (gonna, wanna, gotta)""I just want to move you along a bit further. Do you take any prescribed medicines? I know you've got diabetes and high blood pressure. I do. I take Ramipril. Okay. And I take Metformin, and there's another one that begins with G for the diabetes. Glicoside."

"I just wanna move you along a bit further. Do you take any prescribed medicines? I know you've got diabetes and high blood pressure. I, I do. I take, um, I take Ramipril. Okay, mhm. And I take Metformin, and there's another one that begins with G for the diabetes. So glycosi — glycosi— glycoside."

"prompt": "Produce a transcript suitable for conversational analysis. Every disfluency is meaningful data. Include: Tag sounds: [beep]""Your call has been forwarded to an automatic voice message system. At the tone, please record your message. When you have finished recording, you may hang up or press 1 for more options."

"Your call has been forwarded to an automatic voice message system. At the tone, please record your message. When you have finished recording, you may hang up or press 1 for more options. [beep]"

"prompt": "Produce a transcript suitable for conversational analysis. Every disfluency is meaningful data. Include: fillers (um, uh, er, ah, hmm, mhm, like, you know, I mean), repetitions (I I, the the), restarts (I was- I went), stutters (th-that, b-but, no-not), and informal speech (gonna, wanna, gotta)"Do you and Quentin still socialize when you come to Los Angeles, or is it like he's so used to having you here? No, no, no, we're friends. What do you do with him?

Do you and Quentin still socialize, uh, when you come to Los Angeles, or is it like he's so used to having you here? No, no, no, we, we, we're friends. What do you do with him?

"keyterms_prompt": ["Kelly Byrne-Donoghue"]"Hi, this is Kelly Byrne Donahue"

"Hi, this is Kelly Byrne-Donoghue"

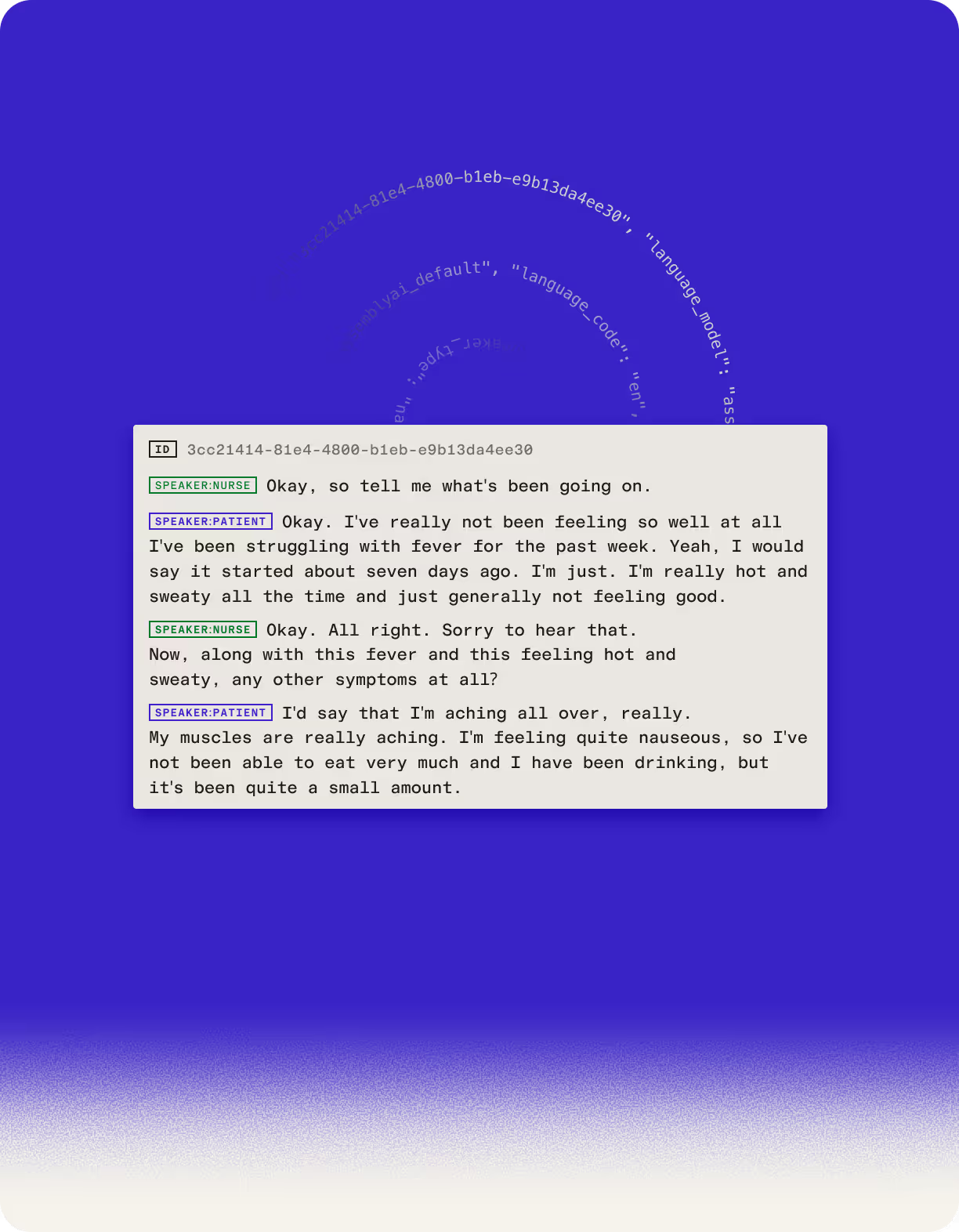

"prompt": "Produce a transcript with every disfluency data. Additionally, label speakers with their respective roles. 1. Place [Speaker:role] at the start of each speaker turn. Example format: [Speaker:NURSE] Hello there. How can I help you today? [Speaker:PATIENT] I'm feeling unwell. I have a headache."}Speaker A: 5Mg. And do you take it regularly?

Speaker B: Oh yeah, yeah.

Speaker A: Good.

Speaker B: Every evening.

Speaker A: And no side effects with it?

Speaker [Nurse]: 5Mg. And do you take it regularly?

Speaker [Patient]: Oh yeah, yeah.

Speaker [Nurse]: Good.

Speaker [Patient]: Every evening.

Speaker [Nurse]: And no side effects with it?

"prompt": Preserve natural code-switching between English and Spanish. Retain spokenlanguage as-is (correct "I was hablando con mi manager").Would definitely think I spoke Spanish if you heard me speak Spanish. But I still make mistakes. Soy wines. Paltro Soy. La fundadora de goop. Thank you. Thank you for doing that.

You would definitely think I spoke Spanish if you heard me speak Spanish, but I still make mistakes. Soy Gwyneth Paltrow, soy la fundadora de Goop. Thank you. Thank you for doing that.

A powerful new way to build with voice

Get transcripts that capture what matters for your application. Provide context to preserve disfluencies, audio events, and speech patterns that standard transcription misses.

Get domain-specific accuracy without custom models

Describe your audio in plain language and get specialized outputs across medical, legal, customer intelligence, and business applications, all from one model.

- Get the details right the first time: "This is a diabetes management conversation" gives you pharmaceutical-grade accuracy right away

- Improve accuracy automatically: Include 1,000 domain terms and see up to 45% fewer errors on specialized vocabulary

- Handle production reality: Describe accent patterns, audio quality, or background noise and the model adapts

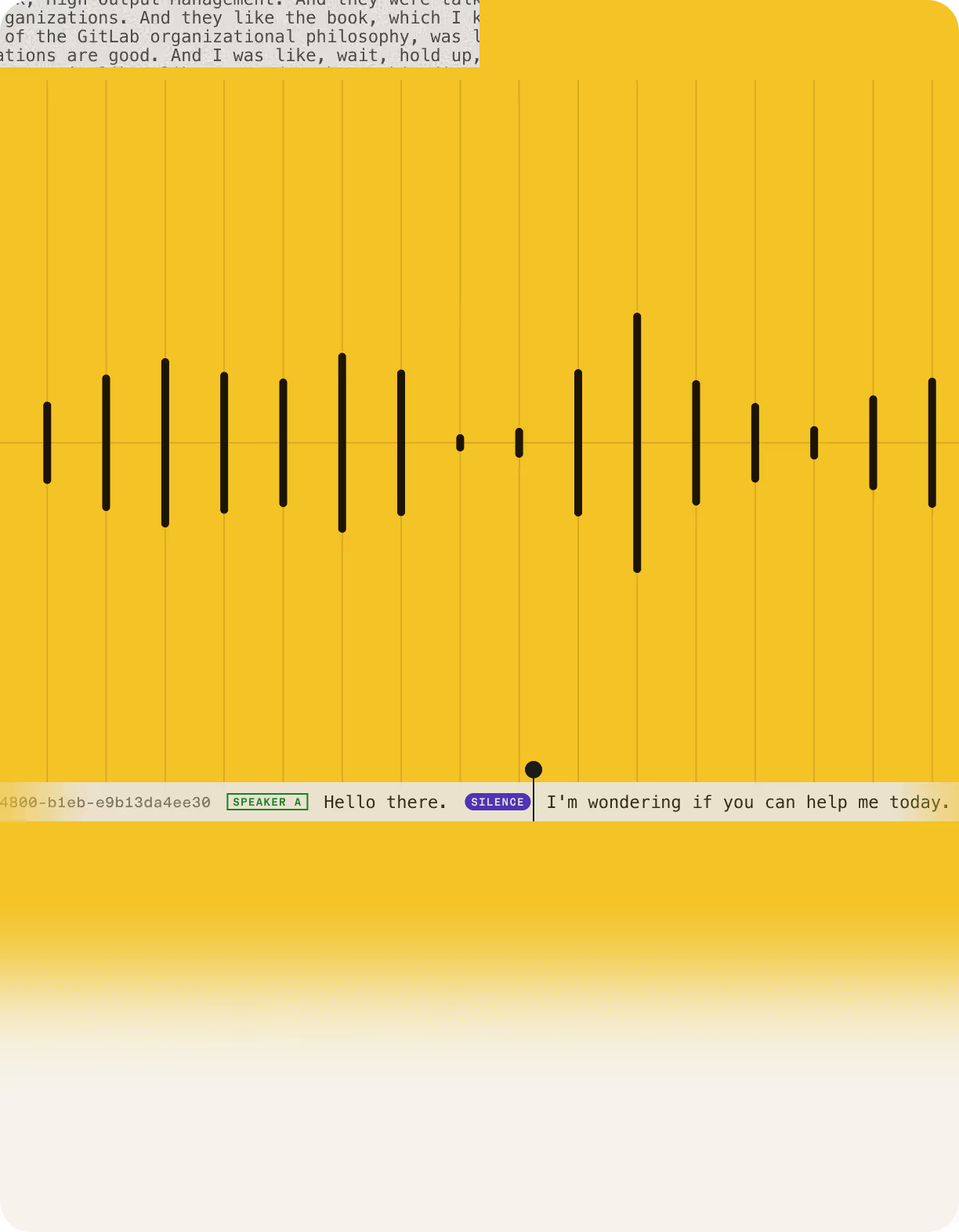

Capture the nuance, not just the transcript

Tag meaningful audio events and filter system artifacts with simple instructions so you can capture the acoustic information that matters for your use case.

- Maximize analysis quality: Tag [beep] and [hold music] so your sentiment analysis extracts insights from actual conversations

- Capture context that matters: Include [laughter], [silence], or custom domain events that affect how conversations are interpreted

- Support multiple use cases: Toggle between verbatim legal records and clean summaries through prompting to serve different customer needs

Label speakers by role, not just by voice

Track every speaker turn with role-based identification and attribute even the shortest interjections to get the full picture.

- Build role-aware products from the start: Understand your medical scribes and support intelligence conversation dynamics from day one

- Capture the real view of a conversation: Handle frequent interjections and single-word responses, capturing complete dialogue the way it actually happened

- Eliminate post-processing logic: Deliver value immediately by including speaker roles directly in transcripts through prompting

Switch between verbatim and clean transcription

Capture legally-defensible records and readable summaries simultaneously without building post-processing logic

- Ship compliant products faster: Verbatim mode preserves fillers, repetitions, false starts, and stutters for transcript you can trust

- Deliver better user experiences: Clean mode removes conversational patterns for polished, readable output—meeting notes and summaries your users actually want to read

- Context determines format: Legal proceedings need verbatim hesitation, business summaries need clarity. Deliver both from one model

Leading the industry with promptable Voice AI

Universal-3 Pro delivers the highest accuracy on real-world audio at 35-50% lower cost than competing solutions.

Metric | AssemblyAI Universal-3 Pro | Elevenlabs Scribe V2 | OpenAI GPT-4o-Transcribe | Speechmatics Enhanced | Microsoft Batch Transcription | Amazon Transcribe | Deepgram Nova-3 |

|---|---|---|---|---|---|---|---|

Word accuracy rate | 94.07% | 93.48% | 93.13% | 92.79% | 92.40% | 92.40% | 92.10 |

Optimized for real world conversations

Tested on diverse data sets, Universal-3 Pro delivers low missed entity rates on real world audio.

Missed entity rate by entity type (Lower is better) | AssemblyAI Universal-3 Pro | ElevenLabs Scribe V2 | OpenAI GPT-4o-Transcribe | Speechmatics Enhanced | Microsoft Batch Transcription | Amazon Transcribe | Deepgram Nova-3 |

|---|---|---|---|---|---|---|---|

Date and time | 7.50% | 11.941% | 12.29% | 17.33% | 10.48% | 20.76% | 18.69% |

Locations | 8.26% | 13.58% | 12.15% | 19.03% | 15.91% | 10.49% | 13.94% |

Medical Terms | 13.61% | 11.39% | 16.50% | 23.87% | 24.93% | 13.94% | 16.95% |

Built on Voice AI infrastructure that scales with you

Universal-3 Pro is part of AssemblyAI's complete Voice AI platform, featuring everything you need to build, deploy, and scale Voice Applications

Predictable usage-based pricing

Pay only for what you use with transparent per-second billing. No minimum commitments, annual contracts, or surprise infrastructure costs.

Complete Voice AI

Platform

Access constantly improving models plus the complete toolchain for every voice use case, from transcription to speech-to-speech, all through our Voice AI Cloud.

Proven reliability and security

Deploy with confidence on infrastructure that processes millions of hours daily. Built for production scale with 99.9% uptime and SOC 2 compliance.

More on Universal-3 Pro

What's next

We’ll be releasing new updates and improvements to Universal-3 Pro over the coming weeks, including more languages, better instruction following, and real-time support.

Unlock the value of voice data

Build what’s next on the platform powering thousands of the industry’s leading of Voice AI apps.