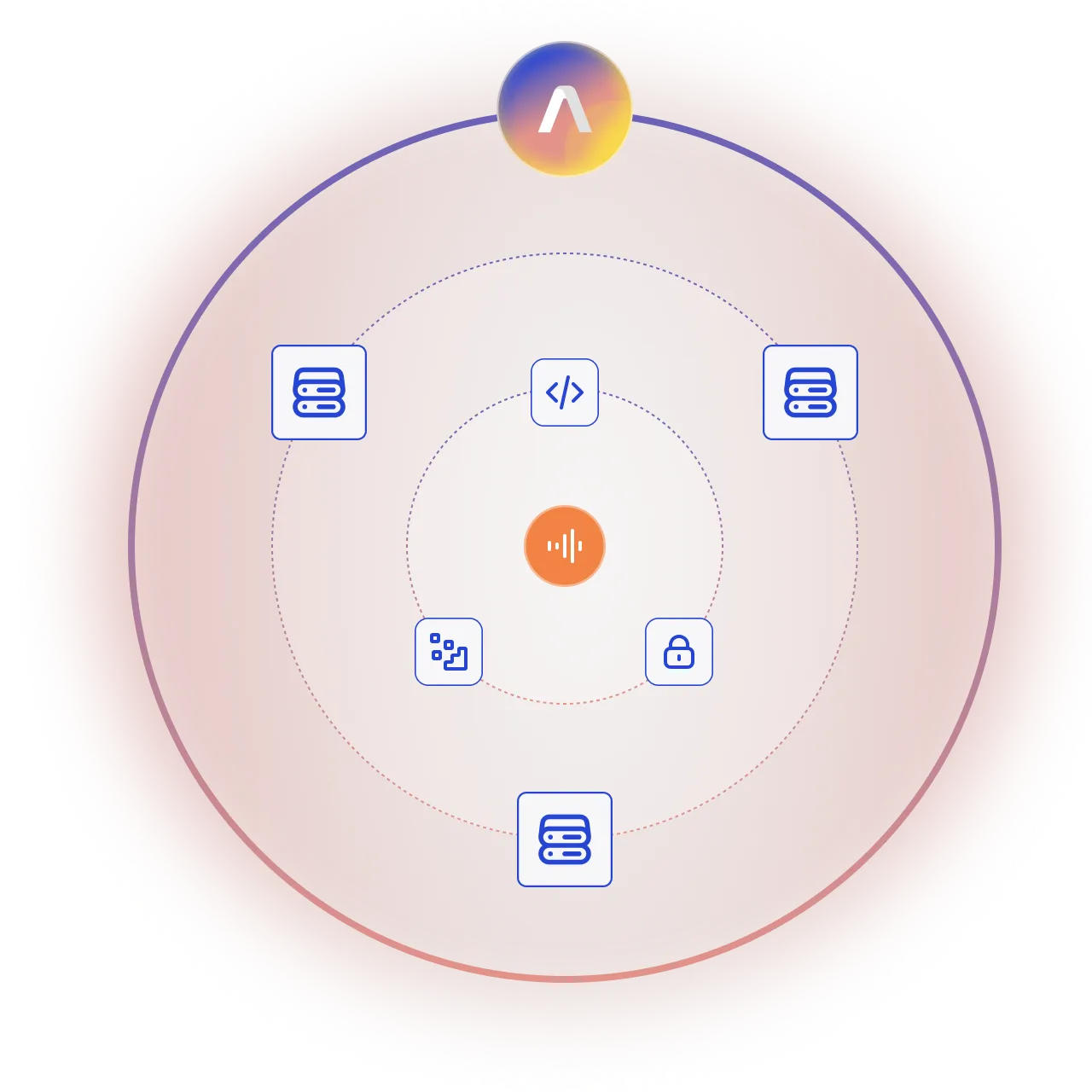

Self-Hosted Voice AI

Deploy AssemblyAI's industry-leading Voice AI models and capabilities on your own infrastructure to optimize performance, meet compliance requirements, and maintain specific control over your stack.

Our industry-leading Voice AI models on your infrastructure

Self-host our speech-to-text models with the same quality and price performance you expect from AssemblyAI.

Optimized latency

Reduce network latency by co-locating your Voice AI stack with your other infrastructure and hosting it close to where your traffic originates.

Complete Data Sovereignty

Process all audio within your infrastructure to maintain data sovereignty while serving customers globally with AssemblyAI's speech recognition.

Infrastructure Control

Configure scaling to match your exact traffic patterns. We provide metrics and observability to support your autoscaling implementation.

Universal Deployment

Deploy with any container orchestration platform, such as Kubernetes and AWS ECS.

Cloud Integration

Count your usage toward your cloud provider's committed spend program and maximize the discounts you've already negotiated.

Regulatory Compliance

Meet stringent regulatory requirements and data residency mandates by processing all audio within your controlled perimeter.

Industry’s Best Models

Access our Universal-Streaming model with the same performance you know and trust from our cloud API.

Session-Based Pricing

Session-based pricing with no self-hosting premium. Includes daily billing options and volume-based discounts.

GPU Flexibility

Get full GPU support for maximum performance, with options available for regions with hardware limitations.

Enterprise Cloud Savings

AssemblyAI agreements can be structured through AWS Marketplace. Your AssemblyAI usage counts toward your committed spend programs, helping you maximize cloud discounts and meet budget commitments.

Frequently Asked Questions

Self-hosting uses the same usage-based pricing as our cloud service with no additional fees. You only pay for active sessions, and are eligible for volume-based discounts.

Self-hosting can reduce latency by 50-200ms in regions distant from our cloud endpoints, such as Australia, Singapore, or South America. For users already close to our cloud infrastructure, our standard cloud API with Global Edge Routing typically delivers comparable performance without operational overhead.

Our containerized deployment supports GPU configurations. Each instance handles up to 48 concurrent streams with no degradation in runtime

AssemblyAI provides a Docker Compose demo for initial setup. For production deployments on Kubernetes, AWS ECS, or other orchestration platforms, our Applied AI Engineers work directly with your team to design and implement infrastructure that fits your requirements.

Yes. Self-hosting enables deployment in any region, including government clouds (GovCloud), and countries with data sovereignty requirements or hardware import restrictions.

Unlock the value of voice data

Build what’s next on the platform powering thousands of the industry’s leading of Voice AI apps.