Building Ambient AI Scribes

Your guide to evaluating Voice AI for healthcare

Ambient AI scribes are rapidly transforming healthcare by automatically generating clinical notes from conversations, wiping out the documentation burden that's been plaguing clinicians for years.

But building an Ambient AI Scribe that physicians trust isn’t easy. It requires exceptional Voice AI technology like accurate speech recognition, medical terminology transcription, and true semantic understanding.

This guide walks you through the essential evaluation criteria for choosing the right Voice AI platform to build ambient AI scribes.

What to look for:

Key Voice AI evaluation criteria

Clinical speech-to-text accuracy

Here's the thing about patients: they don't speak in medical textbooks. They say "water pills" when they mean diuretics. They mix up medication names. And your scribe needs to handle all of that accurately.

Most ambient AI scribes use batch speech transcription, or processing recordings after the visit wraps up rather than transcribing in real-time. This approach puts accuracy first, which is exactly what you want when medical jargon is involved.

What to zero in on:

Streaming transcription

Streaming transcription processes speech in real-time as the conversation unfolds, displaying text within seconds. While batch processing is still the standard for most ambient scribes, streaming capabilities are getting better fast—and we'll see real-time ambient scribes taking off soon.

True speech understanding

It's not enough for scribes to just transcribe words. They need to actually understand what's being said and organize it properly. This is where key Speech Understanding models come in:

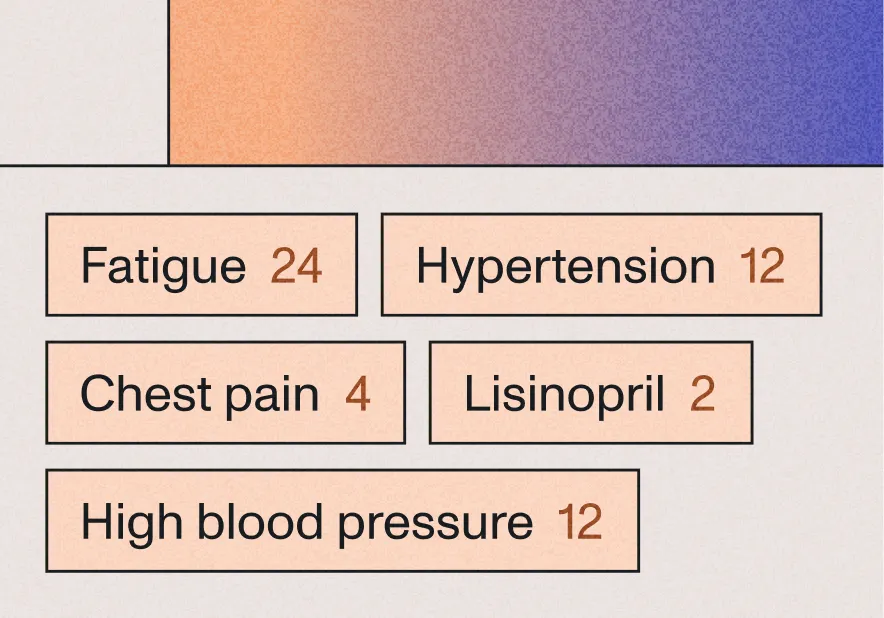

Entity detection

Spots medications, diagnoses, symptoms, and procedures from conversational speech. So when someone says "high blood pressure medication," it automatically knows to categorize that as an antihypertensive.

Summarization

Takes those meandering conversations and turns them into structured clinical notes, neatly organized by chief complaint, history, assessment, and plan.

Sentiment Analysis

Picks up on patient emotional state and urgency from tone and word choice, flagging situations that need immediate attention.

Voice Guardrails: Privacy, security, and compliance

Data security and privacy? Table stakes. But here's what else you need to look for:

Technical capabilities that actually matter

Speaker diarization, speaker identification, and conversation dynamics

Speaker diarization breaks down who's saying what when multiple people are in the room while speaker identification actually labels those speakers by role or name. You definitely don't want patient statements ending up attributed to clinicians in the medical record.

But it goes beyond just identifying speakers. The system also needs to handle the chaos of real conversations like interruptions, overlapping speech, the nurse walking in to take vitals while you're mid-history. The AI should be able to track both conversations separately without getting confused.

Handling medical edge cases

Real-world clinical conversations are messy. Your system needs to handle:

Keyterms prompting

Keyterms prompting is your secret weapon for specialty-specific accuracy. It lets you customize the transcription for particular fields. For example, cardiologists can boost terms like "echocardiogram" or "ejection fraction" to make sure they're recognized correctly. This dramatically improves accuracy for specialty-specific terminology that might otherwise get confused with similar-sounding words.

Multilingual support and accessibility

Let's be real: most practices see patients who speak languages other than English, making multilingual support essential.

But here's the catch: the system needs to actually understand medical concepts in different languages and translate them correctly. "Dolor de cabeza" should become "headache" in your note, not stay as untranslated Spanish text.

Understanding code-switching

Code-switching, or when patients flip between languages mid-sentence, is incredibly common in multilingual communities. A patient might say "Tengo dolor in my chest" or "My abuela tiene diabetes." Advanced systems maintain context across these language switches, so you get accurate medical documentation no matter how your patients naturally communicate.

Building with Voice AI models

If you're thinking about building custom ambient scribes or any type of ambient clinical intelligence (ACI), here's what matters most: the underlying Voice AI models determine everything else. The quality of your speech-to-text and speech understanding directly impacts note generation, coding accuracy, and physician satisfaction.

JotPsych launched a behavioral health AI scribe purpose-built for mental health professionals, and saw:

customer growth in first commercial year

reduction in documentation time for clinicians

engineering time savings on infrastructure through partnership with AssemblyAI

FAQ

Expect to pay somewhere between $200-600 per physician monthly, depending on usage volume, specialty requirements, and how complex your integration needs are. But here's the full picture: total cost of ownership includes licensing fees, implementation expenses, training costs, and ongoing support. When you factor in all expenses, you're looking at $15,000-30,000 per physician annually.

This is where the rubber meets the road, and quality varies significantly between systems. The best scribes are built specifically to handle challenging audio—bustling emergency rooms, operating theaters with multiple speakers, diverse accents. Look for systems that have been tested in real clinical environments, not just quiet demo rooms.

Ambient AI scribes automatically transcribe and document patient-clinician conversations using speech recognition and NLP. Ambient Clinical Intelligence (ACI) goes beyond documentation to analyze conversations for clinical insights, extract structured data for quality metrics and billing, integrate with EHRs, and provide decision support.

Unlock the value of voice data

Build what’s next on the platform powering thousands of the industry’s leading of Voice AI apps.