How to summarize meetings with LLMs

Learn how to generate detailed, structured meeting summaries powered by LLMs like Claude 3.5 Sonnet

In today's remote-first world, organizations conduct millions of virtual meetings daily, but crucial information often slips through the cracks. Important decisions get forgotten, action items go untracked, and valuable insights remain buried in recordings that nobody has time to review. These problems create a massive efficiency gap, and industry analysis shows that most organizations struggle to extract meaningful intelligence from their meetings and calls as they collaborate.

In this tutorial, you'll learn how to use AssemblyAI's LLM Gateway to automatically capture and analyze your meetings, allowing you to turn hours of conversations into structured summaries, clear action items, and actionable insights - all powered by large language models.

Getting Started

Meeting summarization with LLMs requires two components: speech-to-text transcription and LLM analysis through our LLM Gateway. Get your AssemblyAI API key here. Using the LLM Gateway may incur additional costs, so ensure your account has billing enabled.

Install the Python SDK to get started:

pip install -U assemblyai

Step 1: Run Speech-to-Text

To generate a meeting summary, you first need to get a transcript of the meeting audio. Create a file named main.py and add the following code. This script will import the necessary libraries, configure your API key, and transcribe the meeting audio.

While we set the API key inline here for simplicity, you should store it securely as an environment variable in production code and never check it into source control.

import assemblyai as aai

import requests

import sys

# Set your API key

aai.settings.api_key = "YOUR_API_KEY"

# URL of the meeting audio to be transcribed

MEETING_URL = "https://storage.googleapis.com/aai-web-samples/meeting.mp3"

# Configure the transcriber

transcriber = aai.Transcriber()

# Transcribe the audio file

transcript = transcriber.transcribe(MEETING_URL)

# Check for transcription errors

if transcript.status == aai.TranscriptStatus.error:

print(f"Transcription failed: {transcript.error}", file=sys.stderr)

sys.exit(1)

Step 2: Generate a meeting summary

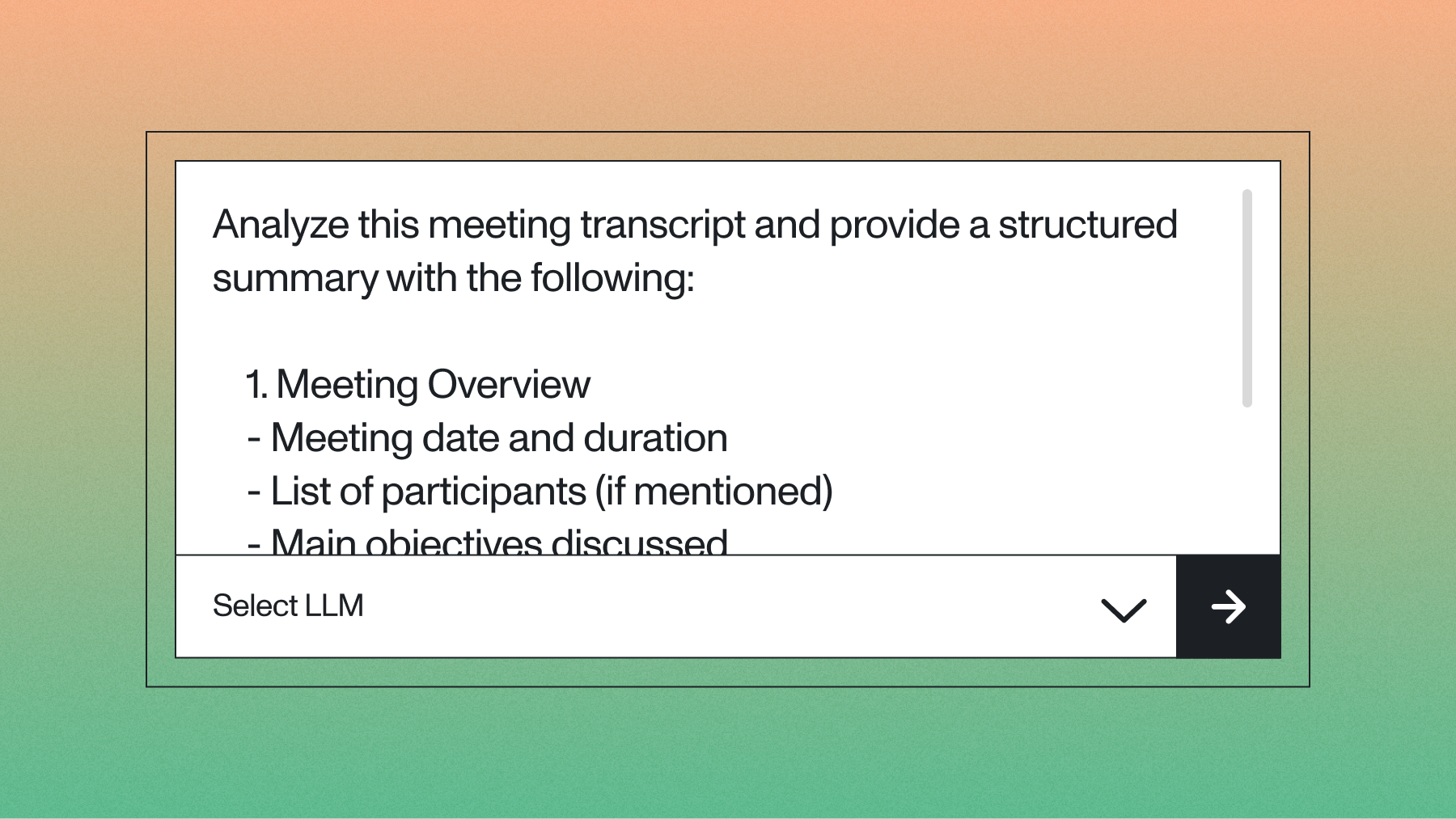

With the transcript ready, you can analyze it using AssemblyAI's LLM Gateway. Create a structured prompt that defines exactly what information to extract:

prompt = """

Analyze this meeting transcript and provide a structured summary with the following:

1. Meeting Overview

- Meeting date and duration

- List of participants (if mentioned)

- Main objectives discussed

1. Key Decisions

- Document all final decisions made

- Include any deadlines or timelines established

- Note any budgets or resources allocated

1. Action Items

- List each action item with:

* Assigned owner

* Due date (if specified)

* Dependencies or prerequisites

* Current status (if mentioned)

1. Discussion Topics

- Summarize main points for each topic

- Highlight any challenges or risks identified

- Note any unresolved questions requiring follow-up

1. Next Steps

- Upcoming milestones

- Scheduled follow-up meetings

- Required preparations for next discussion

ROLE: You are a professional meeting analyst focused on extracting actionable insights.

FORMAT: Present the information in clear sections with bullet points for easy scanning.

Keep descriptions concise but include specific details like names, dates, and numbers when mentioned.

If any of these elements are not discussed in the meeting, note their absence rather than making assumptions.

""".strip()

Now, send the transcript text and your prompt to the LLM Gateway. This requires a separate API call. Add the following code to the end of your main.py file to send the request and print the summary:

# Prepare the request for the LLM Gateway

llm_gateway_payload = {

"model": "claude-sonnet-4-5-20250929", # A powerful and current model

"messages": [

{

"role": "user",

"content": f"{prompt}\n\nTranscript:\n{transcript.text}"

}

],

"max_tokens": 2048,

"temperature": 0.0

}

try:

# Send the request to the LLM Gateway

response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers={"authorization": aai.settings.api_key},

json=llm_gateway_payload

)

response.raise_for_status() # Raise an exception for bad status codes

result = response.json()

print(result['choices'][0]['message']['content'])

except requests.exceptions.RequestException as e:

print(f"Error calling LLM Gateway: {e}", file=sys.stderr)

sys.exit(1)

Step 3: Run the code

Execute this script in your terminal by running python main.py. This will transcribe the meeting audio, analyze the transcript, and generate a structured meeting summary based on your prompt. The output will be printed to your console. For example, here is an example output for the example file used above:

Here's a structured summary of the meeting transcript:

1. Meeting Overview

- Date: February 18, 2021

- Participants mentioned: Eric Johnson, Sid, Lily, Mac, Christopher, Steve, Craig, Christy, Rob

- Main objectives: Engineering key review, discussing KPIs, metrics, and organizational changes

2. Key Decisions

- Break up the engineering key review into four department key reviews

- Implement a two-month rotation for department reviews

- Change the R&D wider MR Rate KPI to track percentage of total MRs that come from the community

- Measure S1/S2 SLO achievement based on open bugs rather than closed bugs

3. Action Items

- Lily: Work with Mac to transition to new community contribution KPI

- Mac: Provide an update on the Postgres replication issue in next week's infra key review

- Mac/Data team: Develop new measurement for average open bugs age

- Mac/Data team: Adjust metrics to measure percentage of open bugs within SLO

- Christopher: Continue monitoring narrow MR Rate and expect rebound in March

4. Discussion Topics

a) Department Key Reviews

- Proposal to split engineering review into development, quality, security, and UX

- Two-month rotation proposed to avoid adding too many meetings

b) R&D MR Rate Metrics

- Confusion about current R&D wider MR Rate calculation

- Decision to simplify and track percentage of MRs from community

c) Postgres Replication Issue

- Lag in data updates affecting February metrics

- Need for dedicated computational resources and potential database tuning

d) Defect Tracking and SLOs

- S1 defects at 80% SLO achievement, S2 at 60%

- Spike in mean time to close for S2 bugs noted

e) SUS (Satisfaction) Metric

- Smallest decline in Q4 compared to previous quarters

- Cautious optimism about trend, but continued monitoring needed

f) Narrow MR Rate

- Currently below target but higher than previous year

- Expectation to rebound in March after short February and power outages in Texas

5. Next Steps

- Implement new department key review structure

- Monitor effects of changes to KPI measurements

- Continue focus on improving security work prioritization

- Expect potential temporary jump in SLO achievement as backlog is cleared

Note: Specific due dates for action items were not mentioned in the transcript.

Available LLM models

The LLM Gateway supports various language models from leading providers. Here is a selection of currently available models, but you can always check our Docs for the most up-to-date information:

claude-sonnet-4-5-20250929: (Anthropic) Claude's best model for complex agents and coding.gpt-5: (OpenAI) OpenAI's best model for coding and agentic tasks across domains.gemini-2.5-pro: (Google) Gemini's state-of-the-art thinking model, capable of reasoning over complex problems.claude-haiku-4-5-20251001: (Anthropic) Claude's fastest and most intelligent Haiku model, ideal for near-instant responsiveness.gpt-oss-120b: (OpenAI) OpenAI's most powerful open-weight model.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.