How to choose the best speech-to-text API for voice agents

Choose the right speech-to-text API for voice agents. Learn the latency, accuracy, and integration requirements that actually matter for real conversations.

Standard speech-to-text benchmarks don't predict voice agent performance in real conversations. As expert analysis confirms, standard metrics like Word Error Rate don't capture what's crucial for voice agents, such as correct punctuation and domain-specific accuracy. Generic accuracy scores and processing speeds don't tell you how your API handles real-time interactions, as industry analysis confirms that a lower error rate doesn't always prevent severe misinformation.

We'll walk through the voice agent-specific evaluation criteria that actually matter for building responsive, reliable voice experiences. For a comprehensive introduction to the technology, explore our complete guide to AI voice agents.

What are speech-to-text APIs

Speech-to-text APIs convert spoken language into written text through AI models, enabling developers to build voice-enabled applications without extensive in-house development. These APIs reduce time-to-market from months to weeks while delivering enterprise-grade accuracy for production voice applications.

These APIs use neural networks trained on millions of hours of audio to handle different accents, speaking speeds, and background noise. Performance varies based on audio quality and specific vocabulary needs.

There are two primary types of speech-to-text APIs:

- Batch APIs: Process pre-recorded audio files and return complete transcripts after processing. Ideal for podcasts, video files, and recorded meetings.

- Streaming APIs: Process live audio in real-time, essential for voice commands, live captioning, and conversational AI agents.

Streaming APIs make decisions with limited context, while batch APIs use entire files for better accuracy; as this guide explains, batch processing can see the full context of a recording, often leading to the highest possible accuracy. This affects pricing and integration complexity.

Key features and capabilities to evaluate

Key features determine speech-to-text API performance for your specific use case. Focus on accuracy, latency, language support, and advanced processing capabilities rather than marketing claims.

Core transcription features

- Accuracy: The most fundamental requirement. In fact, a survey of builders found that 76% consider speech-to-text accuracy a non-negotiable requirement for voice agents. How well does the model transcribe speech into text? Look for benchmarks on your specific use case—medical transcription accuracy differs vastly from casual conversation accuracy.

- Speed and Latency: How quickly does the API return a transcript? For real-time applications, low latency is non-negotiable. Batch processing speed affects user wait times and system throughput.

- Language Support: Does the API support the languages, dialects, and accents of your user base? Some APIs excel at American English but struggle with international accents.

Advanced processing capabilities

- Speaker Diarization: Can the model distinguish between multiple speakers and label who said what? Essential for meeting transcription and call analytics.

- Automatic Punctuation and Casing: Does the transcript include proper punctuation and capitalization for readability? This dramatically affects transcript usability.

- Number Formatting: How does the API handle spoken numbers? Consistent formatting matters for addresses, phone numbers, and financial data.

Customization and intelligence features

- Keyterms Prompting: Can you provide a list of domain-specific jargon, unique names, or product terms to improve their recognition accuracy? Critical for specialized industries.

- Entity Detection: Does the API automatically identify important information like dates, locations, or person names? This enables downstream processing without additional NLP steps.

- Sentiment Analysis: Can the system detect emotional tone in speech? This is valuable for customer service and sales applications, a trend reflected by widespread market adoption that has seen the emotional AI market projected to grow to $37.1 billion by 2026.

Common use cases and applications

Speech-to-text APIs power a growing ecosystem of voice-enabled applications across industries, and with the global market expected to reach $53.67 billion by 2030 according to new market analysis, their importance is rapidly accelerating. Understanding these use cases helps identify which features and performance characteristics matter most for your specific needs.

Contact center intelligence

Companies like CallSource and Ringostat use speech-to-text APIs to transform customer service operations. Every customer call becomes a data source for quality assurance, agent coaching, and customer sentiment analysis.

The business impact is measurable:

- Improved agent performance: Recent industry data shows that real-time insights can reduce call handling time by 35% and increase customer satisfaction by 30%.

- Higher customer satisfaction: Better call resolution through conversation insights

- Operational efficiency: Automated compliance monitoring eliminates manual call reviews

Contact center intelligence requires:

- High accuracy on phone-quality audio

- Speaker diarization to separate agent and customer voices

- Domain-specific terminology handling

- Real-time transcription for live agent assistance

Media transcription and captioning

Media platforms use speech-to-text for accessibility compliance and content discovery. Accurate transcripts improve SEO and make content accessible to hearing-impaired viewers.

Media applications demand support for multiple speakers, background music handling, and proper formatting for readability. The ability to generate time-coded transcripts that sync with video playback is essential.

AI meeting assistants

The explosion of remote work created demand for automated meeting documentation. Companies like Circleback AI use speech-to-text APIs to automatically transcribe virtual meetings, extract action items, and generate summaries.

ROI for meeting automation:

- Time savings: Reduces post-meeting admin work by 75%

- Better follow-through: Automated action item extraction improves task completion rates

- Searchable insights: Transform meetings into strategic knowledge bases

Meeting transcription requires excellent speaker diarization, handling of overlapping speech, and the ability to process various audio qualities from different participant setups. Integration with video conferencing platforms and calendar systems is crucial for seamless workflows.

Voice agents and conversational AI

Interactive voice response (IVR) systems and AI assistants rely on speech-to-text as their ears. The API must process speech in real-time, understand commands or questions, and feed that understanding to downstream AI systems for response generation.

Critical voice agent requirements:

- Ultra-low latency: Sub-300ms response times for natural conversation flow

- High accuracy: Precise capture of short utterances and commands

- Context awareness: Maintain conversation history throughout interactions

- Interruption handling: Process natural speech patterns and corrections

Healthcare documentation

Medical professionals spend hours on documentation, a burden so significant that economic projections suggest Voice AI could save the U.S. healthcare economy $150 billion annually by automating these tasks. Companies like PatientNotes.app use speech-to-text to transcribe doctor-patient conversations and clinical dictation, dramatically reducing administrative burden. The technology must handle medical terminology accurately while maintaining HIPAA compliance.

Healthcare applications require specialized medical vocabulary support, extreme accuracy on drug names and dosages, and strict security and compliance certifications. The cost of transcription errors in healthcare can be severe.

ROI and business outcomes from speech-to-text implementation

Companies implementing speech-to-text APIs see measurable business outcomes that justify investment costs. Organizations report operational improvements within 90 days of deployment, with ROI typically achieved in the first year.

Quantified business benefits include:

- 30-45% reduction in service costs, according to a McKinsey estimate

- 60% faster content production workflows

- 25% improvement in customer satisfaction scores

- 3x increase in data accessibility and searchability

Quantifying the return on investment

The ROI of high-quality speech-to-text APIs manifests differently across industries, but common benefits include reduced operational costs, improved customer experiences, and enhanced business intelligence.

For contact centers, accurate transcription enables better agent coaching and quality assurance. Companies like CallSource and Ringostat leverage these capabilities to identify performance gaps, improve script compliance, and ultimately increase conversion rates. The ability to analyze every customer interaction transforms call centers from cost centers into strategic assets.

Healthcare organizations see dramatic reductions in administrative burden. Medical professionals using solutions from companies like PatientNotes.app spend less time on documentation and more time with patients. This improved efficiency translates to better patient care and higher provider satisfaction.

Business transformation through Voice AI

Leading organizations across industries trust AssemblyAI for their speech intelligence needs. From media companies like Veed enhancing content accessibility to innovative startups like Circleback AI revolutionizing meeting productivity, businesses are discovering that accurate speech-to-text is more than a feature—it's a competitive advantage. A Gartner prediction reinforces this, forecasting that 40% of enterprise apps will integrate task-specific AI agents, a significant increase from less than 5% in 2025.

Measuring success beyond accuracy metrics

While Word Error Rate provides a technical baseline, business success depends on broader outcomes. Organizations report improvements in key performance indicators that directly impact revenue and growth:

- Customer Experience: Faster issue resolution, reduced hold times, and more personalized interactions lead to higher Net Promoter Scores and customer retention.

- Operational Efficiency: Automated transcription and analysis reduce manual work, allowing teams to focus on higher-value activities.

- Compliance and Risk Management: Complete conversation records support regulatory compliance and reduce legal exposure through accurate documentation.

- Business Intelligence: Voice data analysis reveals customer trends, product issues, and market opportunities that drive strategic decisions.

Companies implementing speech-to-text APIs consistently report that the technology pays for itself through efficiency gains alone, with additional value coming from improved customer experiences and new capabilities that weren't previously possible.

How to evaluate accuracy and performance

Choosing an API based on marketing claims alone leads to disappointment. Effective evaluation requires understanding key metrics and testing with your specific use case.

Understanding Word Error Rate (WER)

Word Error Rate remains the industry-standard metric for measuring transcription accuracy. WER calculates the percentage of words that need correction to match the reference transcript, accounting for substitutions, deletions, and insertions.

A WER of 5% means the system gets 95 out of 100 words correct. Context matters—a 5% error rate on medical terminology has different implications than 5% errors on casual conversation.

Critical token accuracy

WER doesn't tell the whole story. What matters more is accuracy on the specific information critical to your business.

Critical token accuracy measures performance on high-value terms like product names, customer IDs, or industry terminology. Test potential APIs with audio containing your actual business vocabulary—an error on an email address or account number is a business problem.

Real-world testing methodology

The only reliable way to evaluate APIs is through real-world testing with your audio. Here's an effective evaluation approach:

- Gather representative audio samples: Collect 10-20 examples of actual audio your system will process, including edge cases and challenging conditions.

- Create reference transcripts: Manually transcribe these samples, paying special attention to critical business terms.

- Test multiple APIs: Run your samples through your top 2-3 API choices using their free tiers or trials.

- Measure what matters: Calculate both overall WER and accuracy on your critical tokens.

- Evaluate the full experience: Consider integration complexity, documentation quality, and support responsiveness alongside accuracy.

Remember that benchmark scores on standard datasets don't predict performance on your specific use case. An API optimized for podcast transcription might struggle with customer service calls, despite impressive benchmark numbers.

What makes speech-to-text different for voice agents

Voice agent speech-to-text requires sub-300ms latency, intelligent endpointing, and real-time processing—capabilities that standard transcription APIs lack. Unlike batch transcription where speed is convenient, voice agents need instant responses to maintain conversational flow. This is because human conversation studies show that the typical response time in dialogue is around 200ms.

The requirements extend beyond just speed. Voice agents must handle the messiness of natural conversation—interruptions, corrections, thinking pauses, and overlapping speech. A transcription API designed for recorded podcasts won't capture the dynamic nature of live interaction.

Key technical differences include:

- Real-time processing: Immediate transcription without buffering delays. The system must balance speed with accuracy, making decisions with limited future context.

- Intelligent endpointing: Understanding conversational pauses vs. completion. The system must distinguish between someone pausing to think and finishing their turn.

- Critical token accuracy: Perfect capture of business-critical information like emails and phone numbers. Errors on these tokens directly impact user experience.

- Immutable transcripts: No revision cycles that force agents to backtrack. Once words are spoken and processed, they shouldn't change.

The choice of API directly impacts whether your voice agent feels helpful and human or robotic and frustrating. Users judge voice agents within seconds—slow responses, misunderstood commands, or awkward interruptions immediately erode trust. This is a widespread issue, as a survey of builders found that 95% of respondents have been frustrated with voice agents at some point.

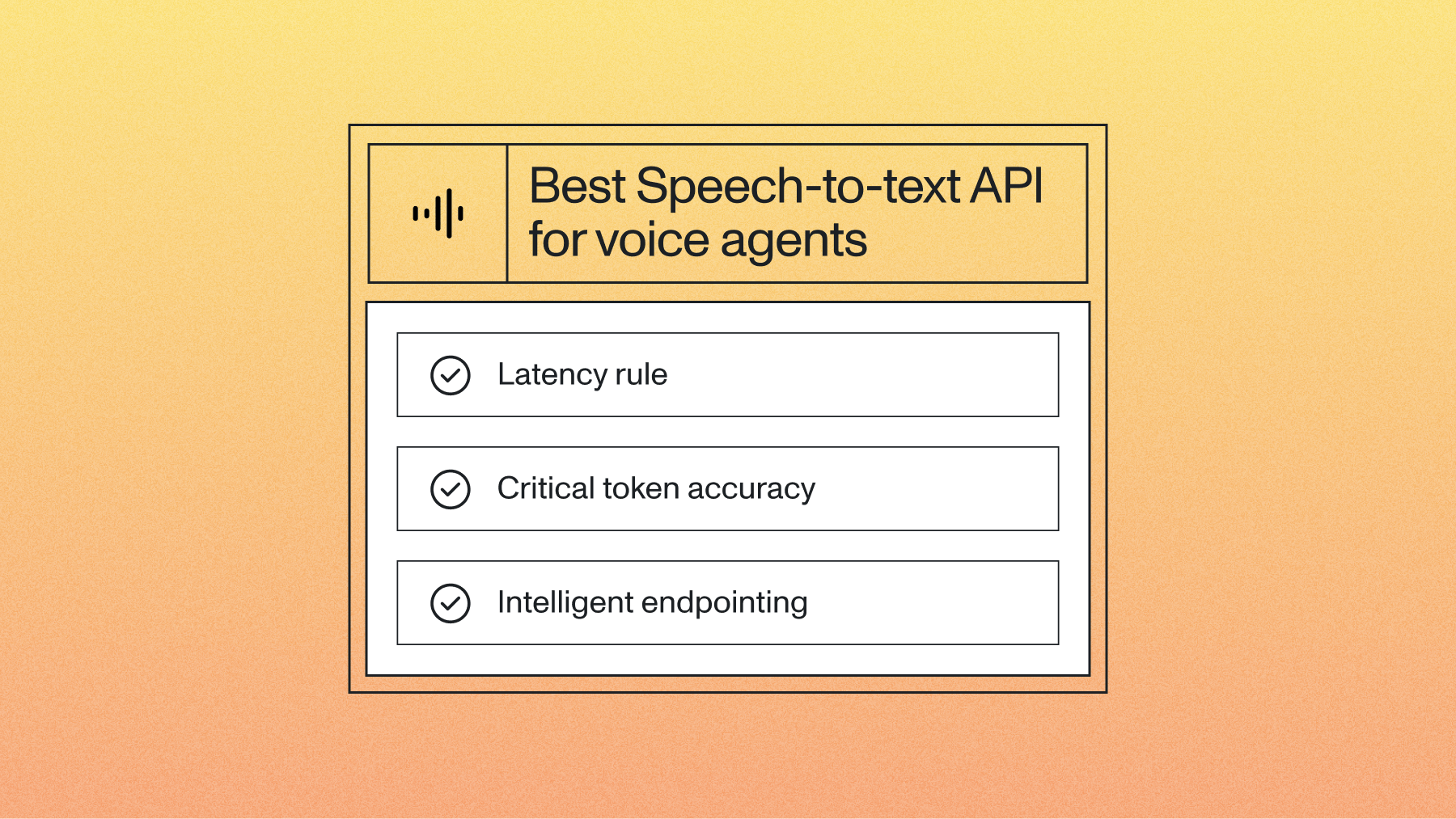

The voice agent speech-to-text core requirements

Voice agents have fundamentally different requirements than traditional transcription applications. Success depends on three non-negotiable technical foundations.

Latency rule: Demand sub-300ms response times

Humans respond within 200ms in natural conversation, so anything over 300ms feels robotic and breaks the conversational flow. Research on conversational dynamics shows that faster response times directly correlate with feelings of enjoyment and social connection between speakers. This isn't just about processing speed—it's about end-to-end latency from speech input to actionable transcript.

The red flag here is APIs that only quote "processing time" without addressing end-to-end latency. Look for immutable transcripts that don't require revision cycles. When your speech-to-text API 'revises' transcripts after delivery, your voice agent has to backtrack and say 'actually, let me rephrase that.' For example, AssemblyAI's Universal-3-Pro streaming model provides immutable transcripts in ~300ms, eliminating these awkward moments entirely.

Critical token accuracy: Test with your actual business data

Generic word error rates tell you nothing about voice agent performance. What matters is accuracy on the specific information your voice agent needs to capture and act upon.

Test what actually matters to your business: email addresses, phone numbers, product IDs, customer names. When your voice agent mishears 'john.smith@company.com' as 'johnsmith@company.calm,' you've lost a customer.

Demand high accuracy on these business-critical tokens in your specific industry context. Universal-3-Pro for streaming delivers state-of-the-art accuracy on entities like order numbers and IDs—a significant improvement when every mistake costs customer confidence. See the detailed performance benchmarks for complete accuracy analysis.

Intelligent endpointing: Move beyond basic silence detection

Basic Voice Activity Detection treats every pause like a conversation ending, but this is a flawed approach. According to conversational analysis, nearly a quarter of speech segments are self-continuations after a pause, not the end of a turn. Picture this: someone says 'My email is... john.smith@company.com' with natural hesitation, and your agent interrupts with 'How can I help you?' before they finish.

Look for endpointing that combines configurable silence thresholds with model confidence, going beyond basic VAD to reduce false turn-endings. Basic VAD fires on any pause regardless of context; a smarter system waits until the model is confident the utterance is complete before closing the turn. Picture this: someone says 'My email is... john.smith@company.com' with natural hesitation, and your agent interrupts with 'How can I help you?' before they finish.

Test this immediately with natural speech patterns. Have someone provide information with realistic hesitation, interruptions, and clarifications. Learn more about these common voice agent challenges and how modern solutions address them.

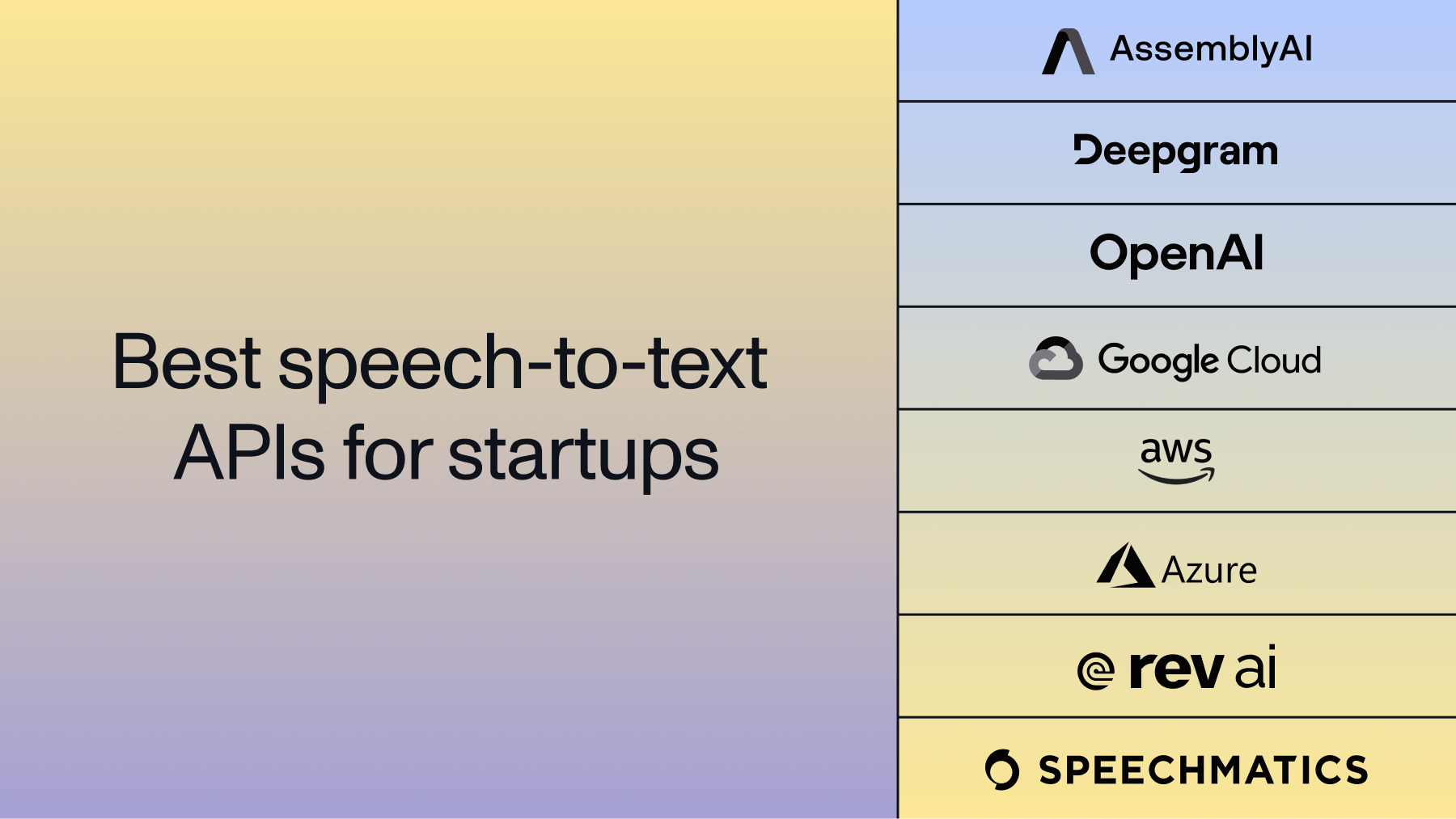

Top speech-to-text API providers comparison

The speech-to-text API landscape includes providers with different strengths, architectures, and ideal use cases. Understanding these differences helps you match capabilities to your specific requirements.

Voice agent-optimized providers

- AssemblyAI: Offers Universal-3-Pro for streaming, a purpose-built model with intelligent endpointing and state-of-the-art accuracy on critical tokens like emails and IDs. Designed specifically for real-time conversational applications.

- Deepgram: Speed-focused solution for some real-time applications.

General-purpose providers

- Google Cloud Speech-to-Text: Robust service with extensive language support and multiple model options. Requires configuration tuning for voice agent optimization.

- Microsoft Azure Speech Services: Comprehensive platform with strong enterprise integration. Best suited for organizations already invested in the Azure ecosystem.

- Amazon Transcribe: AWS-integrated service with solid accuracy and streaming capabilities. Natural choice for AWS-heavy infrastructures.

- OpenAI Whisper: Excellent accuracy for recorded audio with broad language support. Requires significant engineering for real-time streaming applications.

Integration and implementation considerations

Technical implementation determines project success more than underlying model quality. Three areas require careful evaluation: orchestration framework compatibility, API design quality, and scaling considerations.

Orchestration framework compatibility

Custom WebSocket implementations often cost significantly more in developer time than anticipated. In fact, a recent industry report found that 45% of teams building voice agents cite integration difficulty as a top challenge that extends timelines and inflates costs. The initial connection setup is straightforward, but handling connection drops, managing state, and implementing proper error recovery quickly becomes complex.

Pre-built integrations reduce development time from weeks to days. AssemblyAI provides step-by-step documentation for major orchestration frameworks like LiveKit Agents, Pipecat, and Vapi, offering battle-tested code that handles edge cases your team hasn't encountered yet.

Consider framework compatibility early in your selection process. If you're using Vapi for voice agent orchestration, choose a speech-to-text provider with native Vapi support.

API design quality: Evaluate the developer experience

The quality of the developer experience directly impacts your implementation timeline and long-term maintenance costs. Well-designed APIs make complex tasks simple, while poor APIs create ongoing frustration.

Green flags for good API design include:

- Comprehensive error handling with clear error messages

- Consistent response formats across endpoints

- Robust SDKs in multiple programming languages

- Clear connection state management for streaming

- Graceful degradation when network conditions change

Red flags that indicate poor developer experience:

- Sparse or outdated documentation

- Limited SDK support forcing raw API calls

- Unclear pricing for production loads

- Complex authentication mechanisms

- Inconsistent behavior across different endpoints

Can you establish a WebSocket connection, handle audio streaming, and process results with minimal code? The answer reveals whether you're dealing with a developer-focused API or an afterthought. For detailed technical guidance, review our streaming documentation.

Scaling considerations: Plan for success scenarios

Production deployments expose limitations that aren't apparent during prototyping. Understanding scaling constraints prevents painful migrations later.

Verify actual concurrent connection limits, not marketing claims. Some providers throttle connections aggressively once you exceed free tier limits, causing production failures during peak usage. Ask specific questions about concurrent WebSocket connections and what happens when you exceed limits.

Geographic distribution matters for latency. Ensure low latency for your user base locations, not just major US markets. A voice agent with 150ms latency in San Francisco but 800ms in Singapore will fail international expansion.

Cost scaling requires careful analysis. Session-based pricing (like AssemblyAI's per-hour streaming models) offers more predictable costs compared to complex per-minute models with hidden fees. For implementation best practices and scaling strategies, check our guide to getting started with real-time streaming transcription.

Pricing models and cost considerations

The price tag on an API is only one part of the total cost equation. Understanding different pricing models and hidden costs helps you budget accurately and avoid surprises at scale.

Common pricing models

Speech-to-text APIs typically use one of several pricing approaches:

- Per-minute/hour pricing: You pay for the amount of audio processed. Simple to understand and predict based on usage patterns.

- Per-request pricing: Charges per API call regardless of audio length. Can be cost-effective for short utterances but expensive for long recordings.

- Tiered pricing: Volume discounts at certain usage thresholds. Beneficial for high-volume applications but requires commitment.

- Subscription models: Fixed monthly cost for a certain usage allowance. Provides budget predictability but may include overage charges.

Most providers charge extra for advanced features. Speaker diarization, custom vocabulary, entity detection, and real-time streaming often come with additional fees that can significantly impact your total cost at scale.

Hidden and indirect costs

Beyond direct API costs, consider the total cost of ownership:

- Integration and Development Time: A poorly documented or complex API can cost weeks of engineering effort. Developer time often exceeds API usage fees, especially in the early stages.

- Maintenance Overhead: How much ongoing work will be required to maintain the integration? Frequent API changes, poor reliability, or complex error handling create ongoing costs.

- Infrastructure Requirements: Some solutions require additional infrastructure for audio preprocessing, result storage, or connection management. These costs compound over time.

- The Cost of Inaccuracy: What happens when transcription errors occur? As recent research shows, accuracy failures directly correlate with user frustration, leading to consequences like a missed sale, compliance failure, or poor customer experience that costs far more than the API itself.

Evaluating total cost of ownership

When comparing providers, create a comprehensive cost model:

Consider vendor stability and commitment to the space. A slightly more expensive provider that invests in continuous improvement and provides excellent support often delivers better value than the cheapest option. The cost of switching providers later far exceeds modest price differences.

Getting started with speech-to-text APIs

Moving from evaluation to implementation requires a structured approach. Here's how to successfully deploy speech-to-text APIs in your application.

Start with a focused proof of concept

Don't rely on generic demos or marketing materials. Create a proof of concept using your actual use case to validate both technical capabilities and business value.

Your proof of concept should:

- Use real audio from your application domain

- Test with your actual latency requirements

- Include your critical business vocabulary

- Measure accuracy on your specific metrics

- Evaluate the complete integration experience

Start small with one focused use case. Voice agents should begin with single conversation flows, while meeting transcription should start with one team's calls.

Prioritize based on constraints

Every project has constraints that should drive your technology choices:

- Timeline constraints: If you need to launch in 8 weeks, choose the solution with the best existing integrations and support, even if another option might be technically superior with more development time.

- Budget constraints: Consider total cost including development time, not just API pricing. A more expensive API with better documentation might be cheaper overall.

- Technical constraints: Your existing technology stack influences your options. If you're deeply invested in AWS, Amazon Transcribe might integrate more smoothly despite limitations.

- Compliance constraints: Healthcare applications need HIPAA compliance. Financial services require specific certifications. These requirements immediately narrow your options.

Our step-by-step voice agent tutorials can help you get started quickly with practical examples and best practices.

Implementation timeline expectations:

- Week 1-2: API evaluation and testing with real audio samples

- Week 3-4: Integration development and basic functionality testing

- Week 5-6: Production deployment with monitoring systems

- Week 7-8: Performance optimization and scaling preparation

Most organizations see initial results within 30 days, with full ROI realized within 6-12 months depending on use case complexity.

Plan for monitoring and optimization

Production deployment is the beginning, not the end. Successful applications continuously improve based on real usage data.

Essential monitoring includes:

- Accuracy metrics: Track WER and critical token accuracy over time

- Latency monitoring: Measure end-to-end response times, not just API latency

- Error rates: Monitor failed requests, timeouts, and retries

- User feedback: Collect qualitative feedback on transcription quality

- Cost tracking: Monitor usage patterns and cost per user or transaction

Build feedback loops into your application. When users correct transcriptions, capture those corrections to identify systematic errors. If certain audio conditions consistently cause problems, implement preprocessing or choose a different model.

Implementation checklist

Before going to production, verify these critical elements:

- ✅ Latency: End-to-end response time meets requirements

- ✅ Accuracy: Acceptable performance on business-critical tokens

- ✅ Reliability: Proper error handling and retry logic implemented

- ✅ Scalability: Tested at expected peak load

- ✅ Monitoring: Metrics and alerting in place

- ✅ Compliance: Security and regulatory requirements met

- ✅ Documentation: Integration documented for team knowledge transfer

The market continues evolving rapidly with improvements in accuracy, latency, and capabilities. Focus your evaluation on core requirements that won't change—the need for accurate, fast, and reliable transcription. Choose a provider committed to continuous improvement and you'll benefit from ongoing advances without changing your integration.

Ready to test speech-to-text for your specific requirements? Try our API for free and see how purpose-built models transform voice applications.

Frequently asked questions about speech-to-text APIs

What makes voice agent speech-to-text different from regular transcription?

Voice agents need sub-300ms real-time processing and intelligent endpointing for natural conversations, while regular transcription works offline without time pressure.

How do I test speech-to-text accuracy for my specific use case?

Test with real audio containing your business terminology and measure accuracy on critical terms like product names, IDs, and industry jargon rather than generic metrics.

What's the difference between batch and streaming speech-to-text APIs?

Batch APIs handle pre-recorded files for content like podcasts, while streaming APIs process live audio for real-time applications like voice agents and customer service.

Which speech-to-text providers offer the best integrations for developers?

Evaluate providers based on pre-built integrations for your use case, SDK quality, and documentation completeness.

How much do speech-to-text APIs typically cost?

Basic transcription costs fractions of a cent to several cents per minute, with advanced features like real-time streaming often costing extra.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.