29 questions to ask when building AI voice agents

Don't launch an AI voice agent that fails in production. Use this checklist for speech-to-text, LLM, and orchestration questions to separate demos from real products.

AI voice agents look amazing in demos, but in production…sometimes, not so much.

Over 60% of AI agent implementations fail to meet performance expectations when deployed in real-world environments. The gap between controlled demos and actual customer interactions is massive, but it's not just about the technology itself. It’s about how it's implemented.

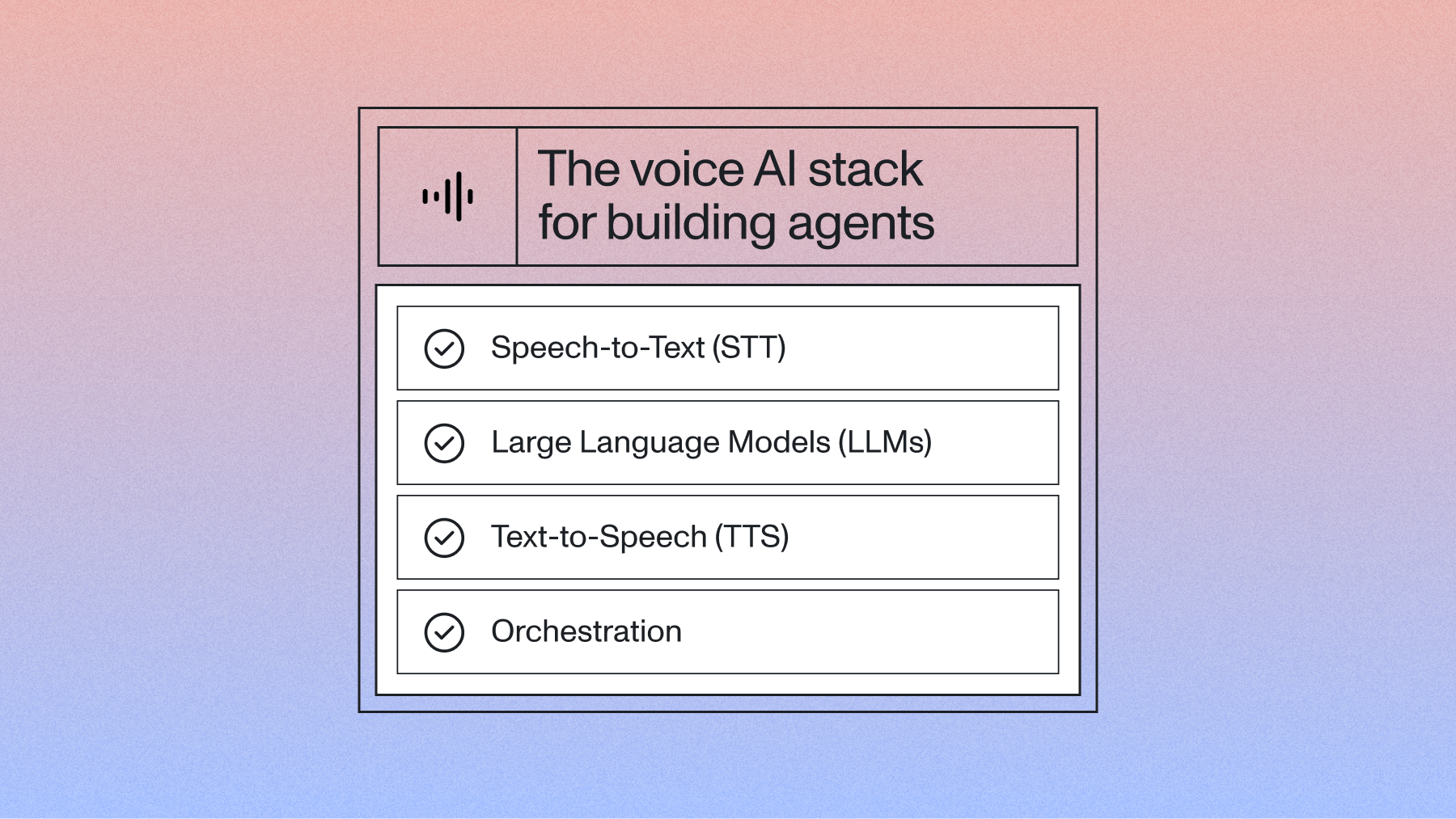

Building voice agents isn't about picking the shiniest new LLM or the trendiest orchestration platform. It's about making smart technical decisions across every layer of the stack:

- Streaming speech recognition that works in noisy environments

- Language models that don't hallucinate critical information

- Voice synthesis that sounds natural

- Orchestration that ties it all together without introducing conversation-killing latency

What separates voice agents that actually work from those that frustrate users is asking the right questions before you build (not after you deploy).

We've compiled must-answer technical questions across six categories that development teams should solve before launching a voice agent.

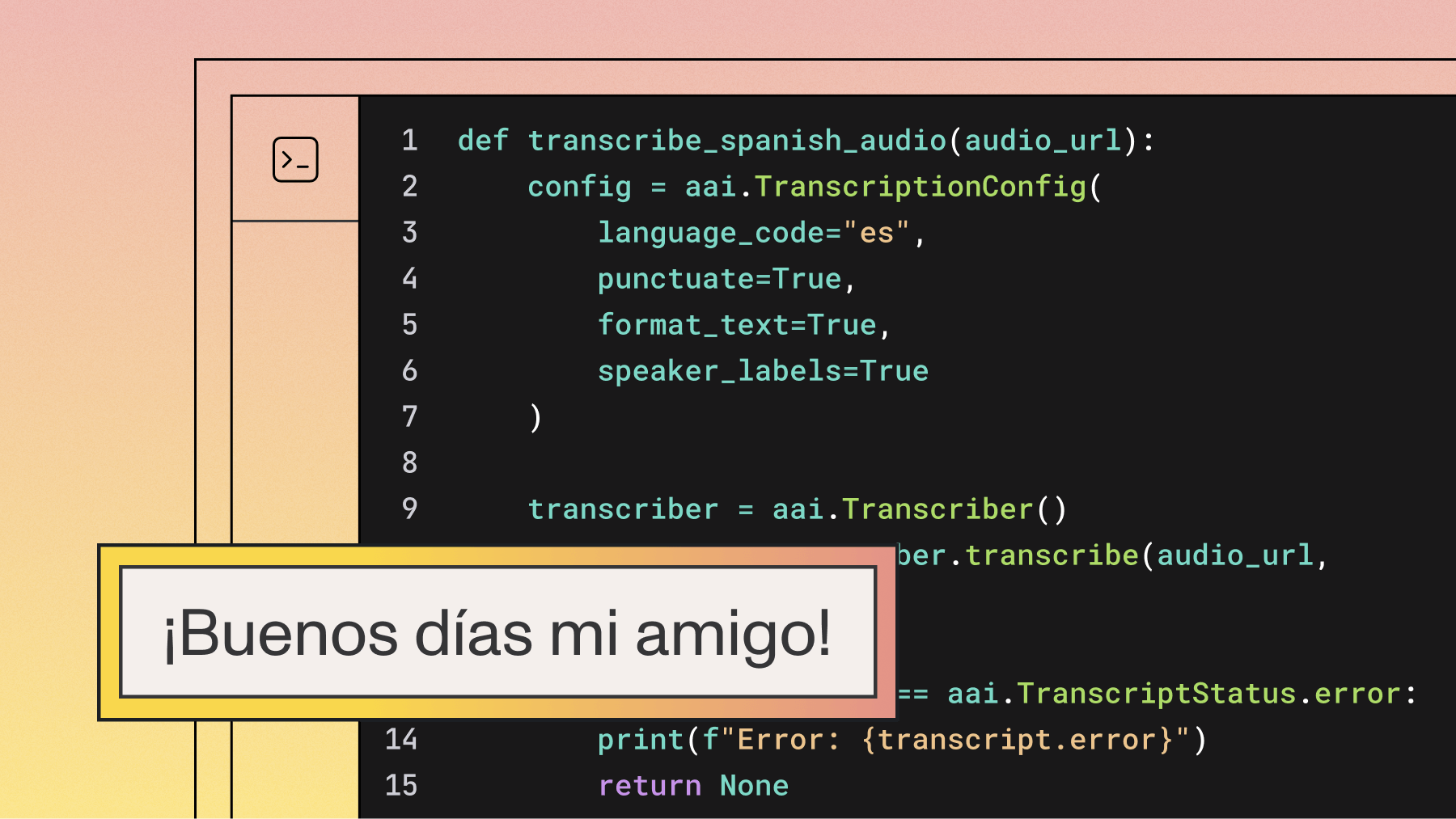

Speech-to-text foundation

Speech recognition forms the foundation of every voice interaction. Get it wrong, and nothing else matters.

Q: What streaming speech-to-text model are you using?

Not all real-time speech recognition models are created equal. Look beyond advertised word error rates and test with audio that actually matches your use case. Many teams find their chosen speech-to-text model performs dramatically worse outside perfectly clean test.

Q: How does your speech-to-text model handle noisy environments?

Background noise kills voice agent performance. Your users aren't calling from sound booths. They're in cars, coffee shops, and noisy offices. Test your streaming speech-to-text model with varying noise levels and types to double-check that it’s usable in challenging acoustic environments. You can also consider using a noise-cancelling tool for meetings like krisp.

Q: What is your streaming speech-to-text latency in real-world conditions?

Your real-time speech-to-text component needs to balance accuracy with speed. Look for models that provide streaming capabilities with partial results to reduce perceived latency.

Q: How well does it handle accented speech?

Many speech-to-text models perform worse on non-native speakers and regional accents. This creates accessibility issues and frustrates users. Test your chosen model across different accents relevant to your user base—don't assume performance will be consistent.

Q: How accurately does your speech-to-text model handle domain-specific terminology?

Technical terms, product names, and industry jargon often trip up general speech-to-text models. If your voice agent needs to recognize specific vocabulary, evaluate models that allow custom vocabulary boosting or fine-tuning capabilities.

Q: Does your speech-to-text model provide confidence scores?

Smart voice agents need fallback strategies when they're unsure. Speech-to-text models that provide word-level confidence scores let you build more intelligent handling for low-confidence inputs—like asking for clarification rather than proceeding with incorrect information.

LLM integration

The large language model interprets user intent, generates responses, and manages conversation flow. Your LLM selection and integration determines how intelligent your agent actually feels in practice.

Q: What LLMs are you using?

The LLM landscape has newer models with different trade-offs between capability, cost, and speed. It's tempting to choose the latest flagship model, but many voice applications perform better with specialized models optimized for dialogue. Choose the model that works the best for your use case.

Q: What context window limitations affect your agent's conversational memory?

Context windows determine how much conversation history your LLM can "remember." Limited windows force you to summarize or discard earlier exchanges, (potentially losing important context). Voice conversations typically generate 125-150 tokens per minute of dialogue, so calculate whether your chosen model's window can handle your expected conversation length. Consider implementing memory management strategies, or even a memory management tool, that prioritize keeping the most relevant information within the available context.

Q: How are you balancing response quality with latency requirements?

Creating natural-sounding AI voice agents that customers are happy to interact with requires careful attention to the tradeoffs between latency and accuracy. Latency is introduced at numerous steps in the agent workflow, but pay careful attention to the latency introduced in the retrieval-augmented generation (RAG) step, as well as the trade-off between model size, accuracy, and latency with the LLM and text-to-speech models you choose. Deciding between VAD and a separate turn detection model will also affect latency.

Q: What tool-calling capabilities does your LLM support for external integrations?

Voice agents typically need to access external systems like databases, APIs, or knowledge bases. Different LLMs handle this integration in different ways, from function calling to structured output formats. Think about how easily your chosen model connects with your existing systems and whether it can generate properly formatted requests consistently.

Q: How are you evaluating and mitigating hallucinations in production conversations?

LLM hallucinations can ruin voice agents, especially for customer service or information retrieval use cases. Add guardrails that validate critical information before presenting it to users. Consider using retrieval-augmented generation (RAG) to ground responses in verified data sources rather than relying solely on the model's parameters, and use an evaluation tool such as Coval.

Text-to-speech considerations

Your agent’s voice shapes how users perceive your entire system. Text-to-speech quality has advanced, but many of the finer details still separate natural-sounding agents from obviously robotic ones.

Q: What text-to-speech models are you using?

Modern text-to-speech options range from highly efficient but basic voices to neural models that capture human-like nuance. Evaluate voice selection: does it match your brand identity and user expectations? Teams sometimes discover that technical specs don't predict user reaction. Some technically "perfect" voices feel uncanny while less advanced ones might sound more approachable.

Q: Does your text-to-speech model speaking rate match human conversational patterns?

AI agents typically speak too fast. Data from Canonical shows they average about 15% faster than human conversation, which can make them harder to understand and increase cognitive load. The ideal speech rate varies by context. Explanations should be slower than confirmations. Consider implementing dynamic rate adjustment based on content complexity, and test comprehension with your target users.

Q: How does your text-to-speech model handle prosody, emotions, and emphasis?

Flat, monotone delivery makes even perfect content feel robotic. Modern text-to-speech systems offer markup languages (SSML) to control emphasis, pauses, pitch, and other speech characteristics. The challenge is knowing when (and how) to apply these controls. Blindly adding emphasis tags can make things worse. Some organizations develop prosody rules based on content type, while others use LLMs to generate appropriate SSML markup dynamically or offer accent localizations.

Q: What fallback strategies exist for text-to-speech failures or instabilities?

Text-to-speech engines occasionally fail or experience quality degradation. Smart implementations include fallback options:

- Alternative text-to-speech providers

- Pre-generated audio for common responses

- Degradation to text for digital channels

Without these fallbacks, a single text-to-speech issue can make your entire agent unusable. Monitor text-to-speech performance in production and implement automatic switching when quality thresholds aren't met.

Turn-taking and conversation flow

Natural conversation all comes down to turn-taking. The most natural-sounding voice needs to manage when to speak, listen, and respond to interruptions.

Q: What turn detection approach are you using?

Turn detection, also referred to as intelligent endpointing, determines when a user has finished speaking and it's the agent's turn to respond:

- Simple Voice Activity Detection (VAD) works by detecting silence but fails with thinking pauses or background noise.

- Text pattern-based approaches wait for grammatically complete thoughts, which works better but adds latency.

- Audio classifier models can detect speech endpoints based on acoustic cues.

LLM-integrated approaches use both text patterns and conversational context.

AssemblyAI also built a first-of-its-kind turn detection model that uses both audio and text to predict end of turn with its new streaming model.

Q: How does your agent handle interruptions?

Users frequently interrupt AI agents. Sometimes, that’s to correct misunderstandings, and other times it’s out of impatience. Basic systems simply restart their response when interrupted, but that creates a frustrating loop. More advanced implementations can pause mid-response, process the interruption, and either resume or change course based on the new input.

Q: What endpointing strategy are you using?

Endpointing decides when to stop listening and start processing. Acoustic endpointing uses silence duration, which is simple but error-prone. Semantic endpointing waits for complete thoughts, which improves accuracy but increases latency. Hybrid approaches start with acoustic detection but apply semantic validation before finalizing.

Q: Have you explored using a specialized turn model?

Purpose-built turn models like Pipecat’s Smart Turn predict when a speaker has completed their turn (outperforming general approaches). These models learn subtle cues from conversation data and can dramatically reduce both false endpoints (cutting users off) and missed endpoints (awkward silences). Consider whether your use case justifies a specialized model or if you need to build custom detection for domain-specific conversation patterns.

Q: What mechanisms do you have for natural conversational tempo?

Human conversations have natural rhythm. We speed up and slow down, use "backchannel" acknowledgments like "mm-hmm," and adjust our pacing based on complexity. Basic voice agents maintain the same mechanical tempo regardless of content, and that’s what makes them feel artificial. Advanced systems adjust response speed, incorporate brief acknowledgments during longer user turns, and vary pause lengths based on conversational context.

Orchestration architecture

Orchestration connects real-time speech recognition, large language models, and voice synthesis into a working system. Your orchestration platform determines what's possible with your voice agent.

Q: Which orchestration platform are you using?

The orchestration landscape includes several options depending on your needs. Consider whether you need visual design tools for non-technical team members, API-first architecture for developer flexibility, or open-source foundations for complete control. Some developers also prefer the DIY approach and build their agent without the use of a platform.

Q: How are you handling complex real-time workflows?

Voice conversations don’t follow simple linear paths. Users ask follow-up questions, change topics mid-conversation, or request information the agent doesn't immediately have. Your orchestration needs to handle these complex flows while maintaining context and managing parallel processes like database lookups.

Q: What monitoring and analytics capabilities do you have for production calls?

At minimum, you need transcripts, completion rates, and escalation tracking. More advanced monitoring includes sentiment analysis, topic clustering, and automated quality scoring.

Q: How are you managing context across multi-turn conversations?

Users hate repeating information they've already provided. Your orchestration layer needs to track entities mentioned earlier (names, dates, account numbers) and understand references to previous exchanges. Some platforms handle this automatically, while others require explicit state management.

Infrastructure considerations

Your technical foundation determines whether your voice agent performs reliably in production or collapses under real-world conditions.

Q: What cloud infrastructure are you using for deployment?

Your chosen infrastructure impacts reliability, cost, and geographic performance. Evaluate compute options optimized for AI workloads rather than general-purpose VMs.

Q: How are you handling telephony integration?

Twilio, Vonage, Amazon Connect, and open-source alternatives provide different price points and capabilities. Match your telephony stack to your expected call volume and quality requirements.

Q: What are your reliability and redundancy strategies?

Voice agents need redundancy at every level: from multi-region deployment to fallback models if primary AI services fail. Design for graceful degradation rather than complete outages.

Q: How are you addressing security and compliance requirements?

Voice conversations sometimes contain sensitive information. Add appropriate encryption, data retention policies, and compliance controls based on your industry requirements.

Q: What observability tools are you using?

Comprehensive monitoring should include technical metrics (latency, error rates) and conversation quality indicators. Implement real-time alerting for big-time failures that impact user experience.

From checklist to production-ready agents

AI voice technology keeps changing, but the fundamentals stay the same: understand what people are saying, process it intelligently, and respond naturally. All within latency constraints that maintain conversation flow.

AssemblyAI's streaming speech recognition API lets you build reliable voice agents with industry-leading accuracy and sub-second latency. Try it for yourself for free.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.