This guide compares the top real-time transcription APIs and services available in 2026, evaluating each platform's accuracy, latency, pricing, and key features to help you choose the right solution for your Voice AI application. We'll cover everything from technical implementation details to specific use cases, giving you the practical insights needed to integrate real-time speech-to-text into your product.

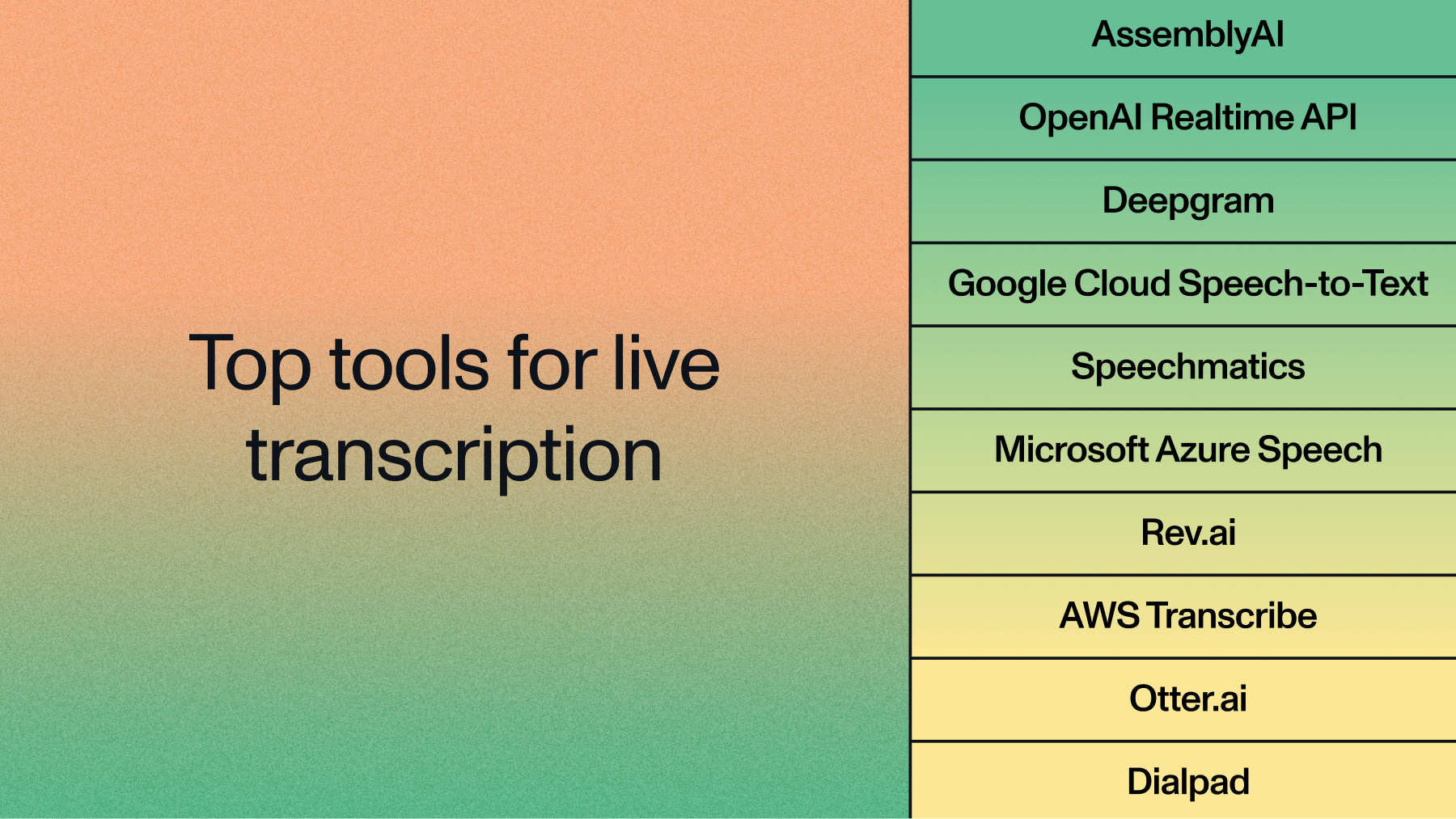

Real-time transcription tools at a glance

Real-time transcription converts live audio streams into text instantly as people speak. This technology processes audio in small chunks and returns text results with minimal delay, typically under one second.

Real-time Transcription Tools

Real-time Transcription Tools at a Glance

| Tool |

Accuracy |

Latency |

Languages |

Key Features |

Pricing |

Best For |

| AssemblyAI |

High |

~300ms |

6 |

Speaker diarization, word timestamps, unlimited streams |

$0.15/hour |

Production Voice AI apps |

| OpenAI Realtime API |

High |

300-500ms |

99 |

GPT integration, function calling |

$0.06/minute |

Conversational AI |

| Deepgram |

Good |

250ms |

36 |

Nova-2 models, custom models |

$0.0125/minute |

High-volume transcription |

| Google Cloud Speech-to-Text |

Good |

300ms |

125+ |

GCP integration, auto punctuation |

$0.024/minute |

Google ecosystem apps |

| Speechmatics |

Good |

400ms |

50 |

Custom dictionary, translation |

Custom pricing |

Enterprise deployments |

| Microsoft Azure Speech |

Fair |

300-400ms |

140+ |

Azure integration, custom speech |

$1/hour |

Microsoft-centric orgs |

| Rev.ai |

Fair |

500ms |

36 |

Human review option |

$0.035/minute |

Hybrid accuracy needs |

| AWS Transcribe |

Fair |

300-500ms |

31 |

AWS integration, medical vocab |

$0.024/minute |

AWS infrastructure |

| Otter.ai |

Good |

1-2s |

English only |

Meeting summaries, collaboration |

$16.99/month |

Team meetings |

| Dialpad |

Fair |

500ms-1s |

5 |

Built-in telephony, coaching |

$95/user/month |

Contact centers |

What is real-time transcription?

Real-time transcription is the process of converting spoken audio into written text as it's being spoken. Unlike batch transcription that processes complete audio files, real-time systems process audio in small chunks and return partial results that update as more context becomes available.

The technology relies on streaming protocols like WebSocket connections to maintain persistent communication between your application and the transcription service. Audio data flows continuously to the server while transcription results stream back, creating a live text feed that appears almost simultaneously with speech.

Key technical components include:

- Streaming architecture: WebSocket connections enable continuous data flow without repeated HTTP requests

- Audio chunking: Raw audio splits into small segments for immediate processing

- Partial transcripts: Preliminary results appear quickly and refine as the AI model gains more context

- Endpointing detection: The system identifies when speakers pause or finish speaking

Real-time transcription typically achieves latencies between 200-500 milliseconds. The accuracy often runs slightly lower than batch processing since the model has less context to work with, but modern AI models have narrowed this gap significantly.

Key features to look for in real-time transcription software

Choosing the right real-time transcription solution requires evaluating several critical capabilities that directly impact your application's performance and user experience.

Low latency: Sub-second processing times determine whether your transcription feels truly "real-time" to users. Look for services offering consistent latencies under 500ms, with some achieving sub-200ms for premium experiences.

High accuracy: Word error rates measure transcription accuracy, with leading services achieving high accuracy on clear audio. Performance varies significantly across accents, background noise levels, and technical vocabulary, so test with your actual use case audio.

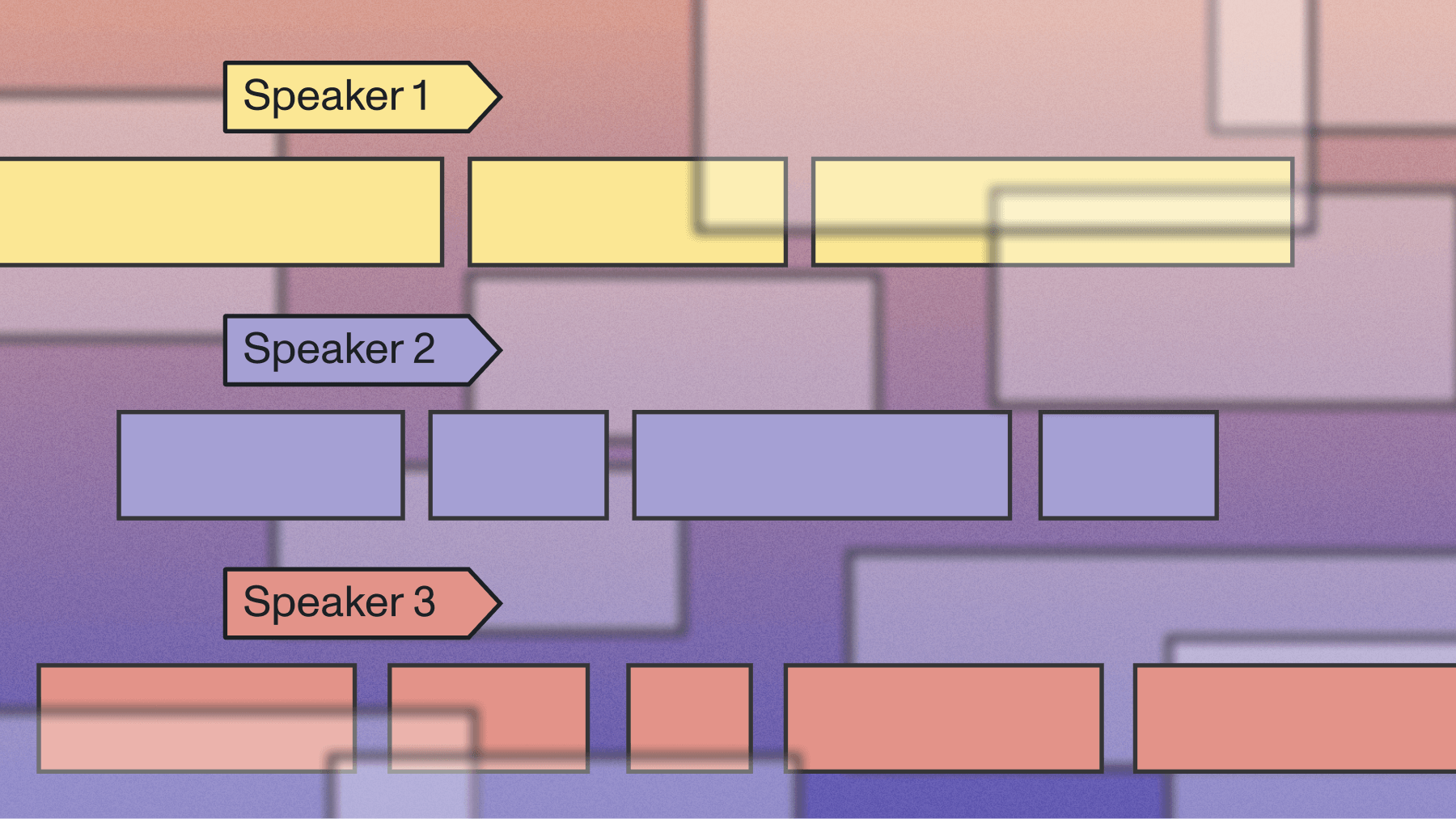

Speaker diarization: Identifying and labeling different speakers transforms a wall of text into a readable conversation. Advanced systems can handle overlapping speech and maintain speaker labels even when participants rejoin after disconnecting.

Language support: While English dominates most offerings, global applications need broader coverage. Consider not just the number of languages but also dialect support and code-switching capabilities for multilingual conversations.

Scalability and reliability: Production applications need consistent performance whether handling one stream or thousands. Check for explicit concurrency limits, uptime guarantees, and how the service handles traffic spikes.

Advanced features separate professional-grade solutions from basic offerings:

- Custom vocabulary: Add industry-specific terms, product names, and acronyms

- Confidence scores: Understand transcription certainty for each word

- Punctuation and formatting: Automatic capitalization, punctuation, and number formatting

- Word-level timestamps: Precise timing for each word enables subtitle generation

Evaluate streaming accuracy and latency

Test diarization, word timestamps, and sub-second latency in your browser. See how real-time transcription performs on your audio before you integrate.

Try playground

Common use cases for real-time transcription

Real-time transcription powers diverse applications across industries, each with unique requirements for accuracy, latency, and features.

Live meeting transcription: Virtual meetings on Zoom, Teams, and Google Meet benefit from instant transcription for note-taking and accessibility. Participants can follow along in real-time, search past discussions, and generate automated summaries.

Broadcast captioning: Television networks, streaming platforms, and live event organizers use real-time transcription for closed captions. These applications demand ultra-low latency to synchronize with video feeds and meet accessibility regulations.

Contact center analytics: Real-time transcription enables immediate agent coaching, compliance monitoring, and sentiment analysis during customer calls. Supervisors can monitor conversations, receive alerts for specific keywords, and intervene when necessary.

Accessibility services: Real-time captions help deaf and hard-of-hearing individuals participate in conversations, lectures, and events. Educational institutions increasingly rely on automated transcription to meet accessibility requirements while managing costs.

Voice assistants and conversational AI: Chatbots and virtual assistants need instant transcription to understand user requests and respond naturally. The transcription feeds into natural language processing pipelines that determine intent and generate appropriate responses.

Compliance and documentation: Financial services, healthcare, and legal organizations transcribe conversations for regulatory compliance and record-keeping. These use cases prioritize accuracy and security over pure speed, often requiring specialized vocabulary support.

Top 10 real-time transcription tools

1. AssemblyAI

AssemblyAI's real-time transcription API delivers industry-leading accuracy through its Universal-Streaming model. The streaming API maintains ~300ms latency while processing audio through WebSocket connections, making it ideal for production Voice AI applications requiring both speed and precision.

The platform excels at developer experience with comprehensive SDKs for Python, JavaScript, Ruby, and other major languages. Features like automatic speaker diarization, word-level timestamps, and confidence scores come standard without additional configuration.

AssemblyAI's infrastructure handles millions of hours monthly with high uptime guarantees, ensuring reliability for mission-critical applications. The service supports 6 languages (English, Spanish, French, German, Italian, and Portuguese) for real-time streaming, with broader language support available for asynchronous transcription.

Pricing: Pay-as-you-go starts at $0.15 per hour with volume discounts available. No upfront commitments or contracts required, with unlimited concurrent streams included at every tier.

Build with low-latency streaming transcription

Get an API key and start streaming in minutes with SDKs for Python and JavaScript. Access diarization, word timestamps, and confidence scores by default.

Get API key

2. OpenAI Realtime API

OpenAI's Realtime API combines Whisper's transcription capabilities with GPT-4's language understanding for sophisticated voice applications. The service enables function calling and tool use directly from voice input, allowing developers to build conversational AI that can take actions based on spoken commands.

Latency typically ranges from 300-500ms, with the trade-off being access to OpenAI's broader AI ecosystem. The API supports 99 languages and includes automatic language detection, making it suitable for global applications.

The integration with GPT models allows for immediate processing of transcribed text, enabling applications that can understand context and respond intelligently to voice commands.

Pricing: $0.06 per minute for audio input, with additional costs for GPT model usage.

3. Deepgram

Deepgram's Nova-2 models focus on speed and cost-efficiency for high-volume transcription needs. The platform achieves 250ms average latency with good accuracy on standard audio, positioning itself as the budget-friendly option for applications prioritizing throughput over maximum accuracy.

Custom model training allows organizations to improve accuracy for specific domains or acoustic environments. Deepgram also offers on-premises deployment for organizations with strict data residency requirements.

The platform includes features like keyword detection, topic modeling, and sentiment analysis built into the transcription pipeline. Their streaming API supports multiple audio formats and provides detailed confidence scores for each transcribed word.

Pricing: $0.0125 per minute for streaming transcription, with volume discounts starting at high usage levels.

4. Google Cloud Speech-to-Text

Google Cloud Speech-to-Text's streaming recognition API integrates seamlessly with the broader Google Cloud Platform ecosystem. Supporting over 125 languages and variants, it's particularly strong for international applications requiring broad language coverage.

The service includes automatic punctuation, profanity filtering, and speaker diarization as built-in features. Integration with other GCP services like Translation API and Natural Language API enables sophisticated multilingual applications.

Google's platform offers both standard and enhanced models, with enhanced models providing better accuracy for challenging audio conditions. The service includes automatic language detection and can handle code-switching between languages within the same audio stream.

Pricing: $0.024 per minute for standard models, with enhanced models at higher pricing tiers.

5. Speechmatics

Speechmatics offers real-time transcription in 50 languages with a focus on accuracy across accents and dialects. Their self-supervised learning approach aims to reduce bias and improve performance on underrepresented languages.

The platform includes custom dictionary support and real-time translation capabilities. Enterprise features include on-premises deployment and dedicated infrastructure options for organizations with specific security requirements.

Speechmatics provides detailed analytics on transcription performance, including confidence scores, speaker identification, and audio quality metrics. Their API supports both streaming and batch processing modes.

Pricing: Custom pricing based on volume and deployment model.

6. Microsoft Azure Speech Services

Azure Speech Services provides tight integration with Microsoft's ecosystem, including Teams, Office, and Dynamics. The service supports 140+ languages and includes features like custom speech models and pronunciation assessment.

Real-time transcription combines with Azure Cognitive Services for sentiment analysis, translation, and speaker identification. The platform's strength lies in enterprise scenarios already invested in Microsoft infrastructure.

The service offers both standard and neural voice models, with neural models providing more natural-sounding speech synthesis. Custom neural voice models can be trained for specific use cases or brand voices.

Pricing: $1 per hour for standard transcription, with custom neural voice models available at higher tiers.

7. Rev.ai

Rev.ai brings human transcription expertise to automated systems, offering both pure AI and human-reviewed options. Their streaming API achieves good accuracy with 500ms latency, with the option to route difficult audio to human transcribers.

The hybrid approach works well for applications needing guaranteed accuracy on challenging audio. Rev.ai also provides post-processing services like summarization and topic extraction.

The platform includes features like custom vocabulary, speaker identification, and sentiment analysis. Their API provides detailed metadata including confidence scores, word-level timestamps, and speaker labels.

Pricing: $0.035 per minute for automated transcription, with human transcription available at premium pricing.

8. AWS Transcribe

Amazon Transcribe Streaming integrates naturally with AWS infrastructure, making it the default choice for applications already running on AWS. The service includes specialized vocabularies for medical and legal domains.

Features like automatic content redaction and speaker identification come standard. The service scales automatically with AWS infrastructure, though accuracy typically varies based on audio quality and use case.

AWS Transcribe supports both real-time streaming and batch processing, with the streaming API providing partial results that update as more audio is processed. The service includes built-in profanity filtering and content redaction capabilities.

Pricing: $0.024 per minute for standard streaming, with medical transcription at higher pricing.

9. Otter.ai

Otter.ai focuses specifically on meeting transcription with features like automated summaries, action item extraction, and collaborative editing. While latency runs higher at 1-2 seconds, the platform excels at post-meeting workflows.

The service integrates with Zoom, Google Meet, and Microsoft Teams for automatic recording and transcription. Otter's strength lies in its consumer-friendly interface and collaboration features rather than raw API capabilities.

The platform includes AI-powered features like meeting summaries, action item detection, and keyword highlighting. Users can edit transcripts collaboratively and share meeting notes with team members.

Pricing: $16.99 per user per month for Pro plan with monthly minute allowances.

10. Dialpad

Dialpad embeds real-time transcription within its business phone system, focusing on contact center use cases. The platform includes real-time coaching, sentiment analysis, and automated call summaries.

While not available as a standalone API, Dialpad's integrated approach simplifies deployment for organizations needing both telephony and transcription. The platform handles call recording, transcription, and analytics in one solution.

The service provides real-time agent assistance with suggested responses, compliance monitoring, and automatic call scoring. Integration with CRM systems enables automatic logging of call transcripts and outcomes.

Pricing: $95 per user per month for AI-powered plans including transcription.

Getting started with real-time transcription

Implementing real-time transcription typically requires WebSocket streaming for lowest latency. While some providers offer alternative streaming methods, WebSocket connections maintain persistent bidirectional communication that delivers the best performance for real-time applications.

Setting up your connection:

- Generate API keys from your provider's dashboard

- Configure authentication headers or connection parameters

- Establish secure WebSocket connection with proper error handling

- Implement automatic reconnection logic for network interruptions

Audio configuration matters significantly for transcription quality:

- Use 16kHz sample rate for optimal balance of quality and bandwidth

- Choose PCM or Opus encoding based on your bandwidth constraints

- Send audio chunks every 100-250ms for smooth streaming

- Include voice activity detection to reduce unnecessary processing

Your application needs robust response handling to manage the continuous stream of transcription results. Partial transcripts arrive frequently and update as the AI model processes more context. Store both partial and final transcripts, using the final versions for permanent records while displaying partials for immediate feedback.

Production deployments require careful attention to error handling and retry logic. Network interruptions, rate limits, and service outages can disrupt streams. Implement exponential backoff for reconnection attempts and maintain audio buffers to prevent data loss during brief disconnections.

Plan real-time transcription at scale

Discuss streaming architecture, concurrency needs, and reliability for production deployments. Our team can help you evaluate latency, uptime, and rollout strategy.

Talk to AI expert

Frequently asked questions

How accurate is real-time transcription compared to batch processing?

Real-time transcription typically achieves slightly lower accuracy than batch processing due to limited context. While batch processing can analyze entire audio files for context, real-time systems must transcribe with only preceding audio, though modern AI models have significantly narrowed this gap.

What's the typical latency for real-time transcription APIs?

Most real-time transcription APIs deliver latencies between 200-500 milliseconds, measuring from when audio is sent to when transcription returns. Premium services achieve sub-200ms latency, while budget options may experience 500ms to 2 seconds delay.

How many concurrent streams can I run with real-time transcription?

Concurrent stream limits vary dramatically between providers—some offer unlimited concurrent streams, while others may limit you to specific numbers of simultaneous connections. Check specific rate limits and consider load balancing across multiple API keys for high-volume applications.

What audio formats are supported for real-time transcription?

Most services support PCM (raw audio) and Opus compression for streaming, typically requiring 16kHz sample rate and 16-bit depth. Some providers accept 8kHz for telephony or 48kHz for broadcast quality, but 16kHz remains the standard for optimal accuracy and efficiency.

How does real-time transcription handle background noise and multiple speakers?

Modern AI models use noise suppression and speaker diarization to handle challenging audio conditions. Performance degrades with excessive background noise, but techniques like beamforming microphones and acoustic echo cancellation can significantly improve results in noisy environments.

Title goes here

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.