Speech-to-Text

The industry's best products need the industry's best models. Build on speech-to-text models that lead the way in accuracy and quality, accessible through a simple API.

Speech-to-text quality that speaks for itself.

Our Universal-3 Pro model sets the standard for accuracy and performance on the details that define speech-to-text quality.

Welcome to another edition of Traveler TV. Today we're at the Arthur Ravenel Jr. Bridge, located here. It opened in 2005 and is currently the longest cable-stayed bridge in the Western Hemisphere. The design features two diamond-shaped towers that span the Cooper River and connect downtown Charleston with Mount Pleasant. The bicycle or pedestrian paths provide unparalleled views of the harbor and is the perfect spot to catch a sunrise or sunset. To walk or bike the bridge, you can park on either the downtown side here or on the Mount Pleasant side in Memorial Waterfront Park. To learn more about the Arthur Ravenel Jr. Bridge and other fun things to do in Charleston, South Carolina. Visit our website at travelerofcharleston.com or download our free mobile app, Exploring Charleston SC.

Welcome to another edition of Traveler TVTv. Today, we're at the Arthur RavenelRa Jr.Junior Bridge, located here. It opened in 2005 and is currently the longest cable stayedstay bridge in the Western Hemisphere. The design features two2 diamond shaped diamond shaped towers that span the Cooper River and connect downtown Charleston with Mount Pleasant. The bicycle or pedestrian paths provide unparalleled views of the harbor and is the perfect spot to catch a sunrise or sunset. To walk or bike the bridge, you can park on either the downtown side here or on themount Mountpleasant Pleasant side in Memorial Waterfrontwaterfront Parkpark. To learn more about the Arthur RavenelRaven Jr.Junior Bridge and other fun things to do in Charleston, South Carolina., Visitvisit our website at travelerofcharleston.traveler of charleston dot com or download our free mobile app, exploring Charlestoncharleston SCs. The

Saudi Arabia, Fiji, Tonga, Hong Kong and Bahrain. All these countries are using Australian medical coding. For example, if you want to go to New Zealand, if you know Australian medical coding, you can go New Zealand easily as a medical coder or clinical coder. So for example, if you want to go Singapore, but in Singapore they will use both ICD-10-CM and CPT and as well as Australian medical coding. So now we can see how can I search job in Australia.

Saudi the Arabia, FijiPh, Tonga, Hong Kong and Bahrain. All these countries are using AustralianMedical medical coding. For example, if you want to go to New Zealand,zealand. ifIf you know AustralianMedical medical coding, you can go New Zealandzealand easily. asA a medical codercode or clinical codercode. So for example, if you want to go Singapore, but in Singapore, they will use both, ICD-10-CMIct 10 Centimeters and CPTCpt, and as well as Australianare still medical coding. So now we can see how can I searchsay jobJob in Australia.?

The flyovers by U.S. warplanes off North Korea's east coast over the weekend were an agreed move between South Korea and the United States. Two B-1B Lancer bombers from Andersen Air Force Base in Guam and six F-15C air superiority fighter jets from Okinawa's Kadena Air Base flew in international airspace off North Korea's east coast for approximately 3 hours from Saturday night until early Sunday morning.

The flyoversfly overs by U.S.Us warplaneswarp planes off North Korea's east coast over the weekend were an a agreed move between South Korea and the United States. Two2B1 B-1Blancer Lancer bombers from Andersen Air Forceforce Basebase inand Guam, and six6 F-15Cf 15 sea air superiority fighter jets from Okinawa's KadenaCad Air Basebase, flew in international airspace off North Korea's eastEast coastCoast for approximately 3 hours from Saturday night until early Sunday morning.

Powering the world’s most trusted Voice AI products

Your product experience is only as good as the foundation that powers it. Make sure you build on the best.

The accuracy and quality that Voice AI apps require

Our speech-to-text models redefine what quality means for Voice AI by delivering transcripts that are consistently trustworthy, and built for real-world performance.

- Capture meaning, not just sound with contextual prompting across every conversation

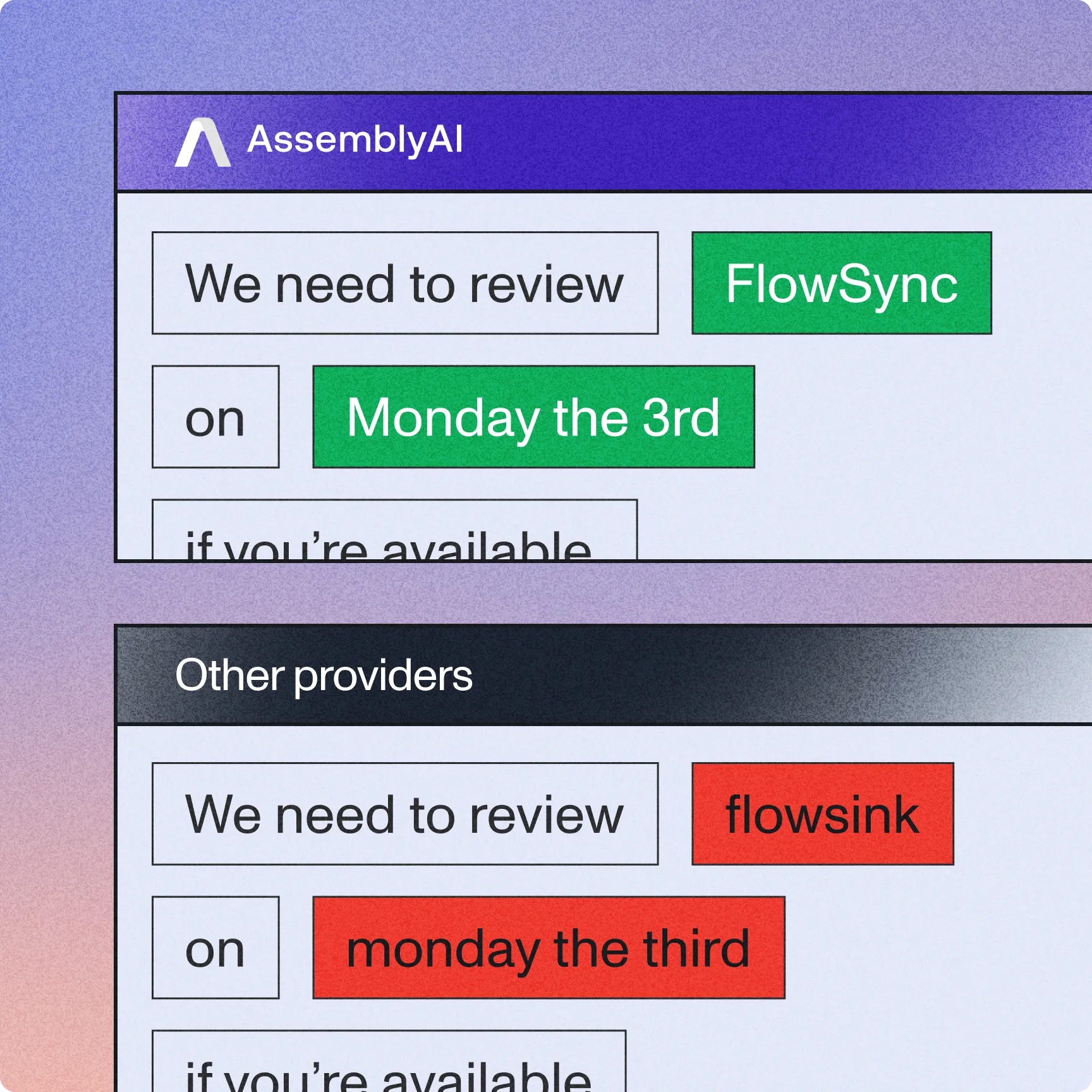

- 57% better recognition of key terms like names, codes, and medical terms

- 64% reduction in speaker counting errors compared to competitors

- 1500+ word context-aware prompting for domain expertise compared to other leading speech-to-text models

Don't just transcribe speech, understand it

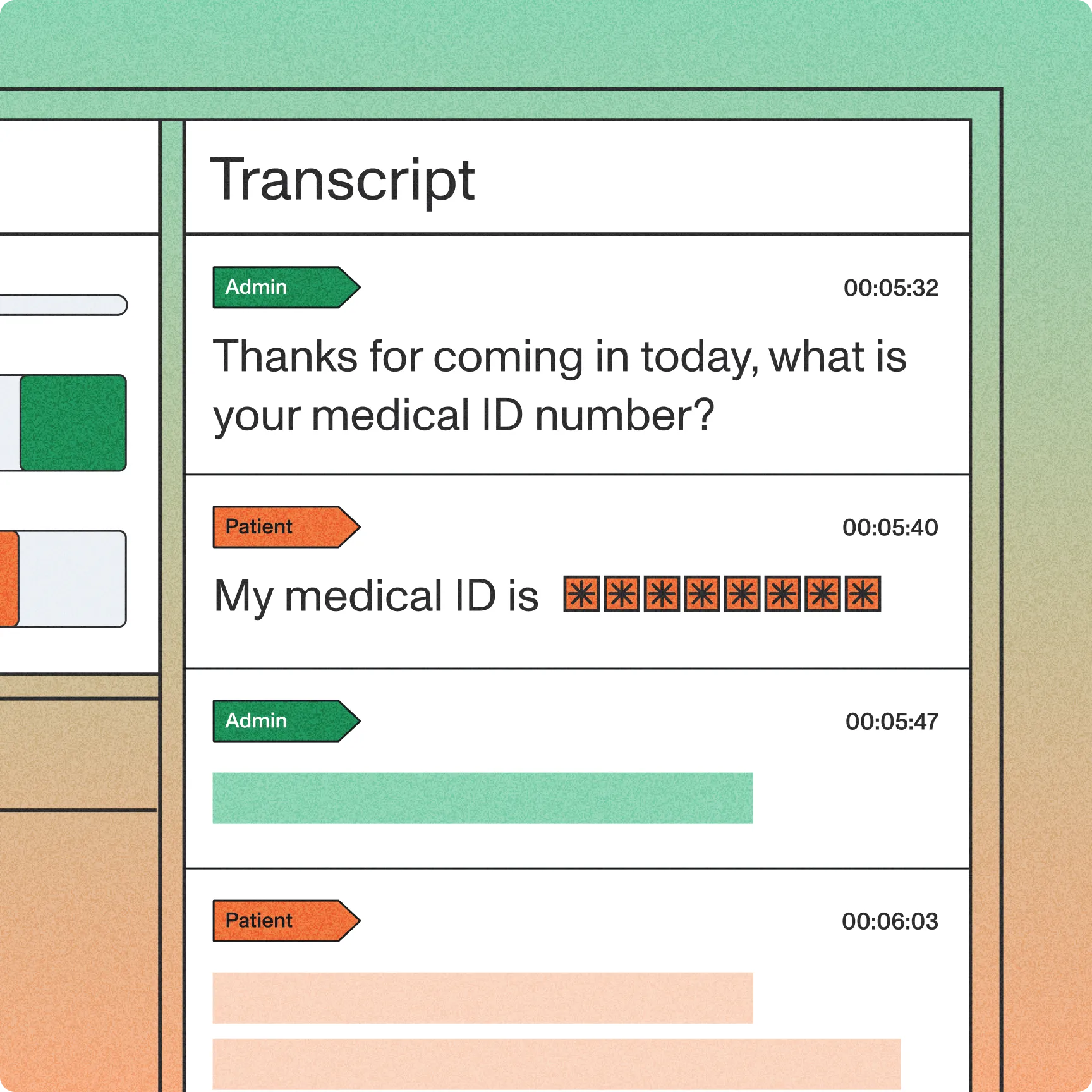

Analyze dialog flow and speaker relationships to capture who is speaking, what they mean, and why it matters.

- Track dialogue and speaker context across turns for natural comprehension, labeling speakers by name or role

- Resolve ambiguity automatically — distinguishing between similar terms and acronyms

- Capture non-speech audio events, tone, corrections, and implied meaning to preserve intent

- Ensure reliable performance in overlapping, multi-speaker, and noisy environments

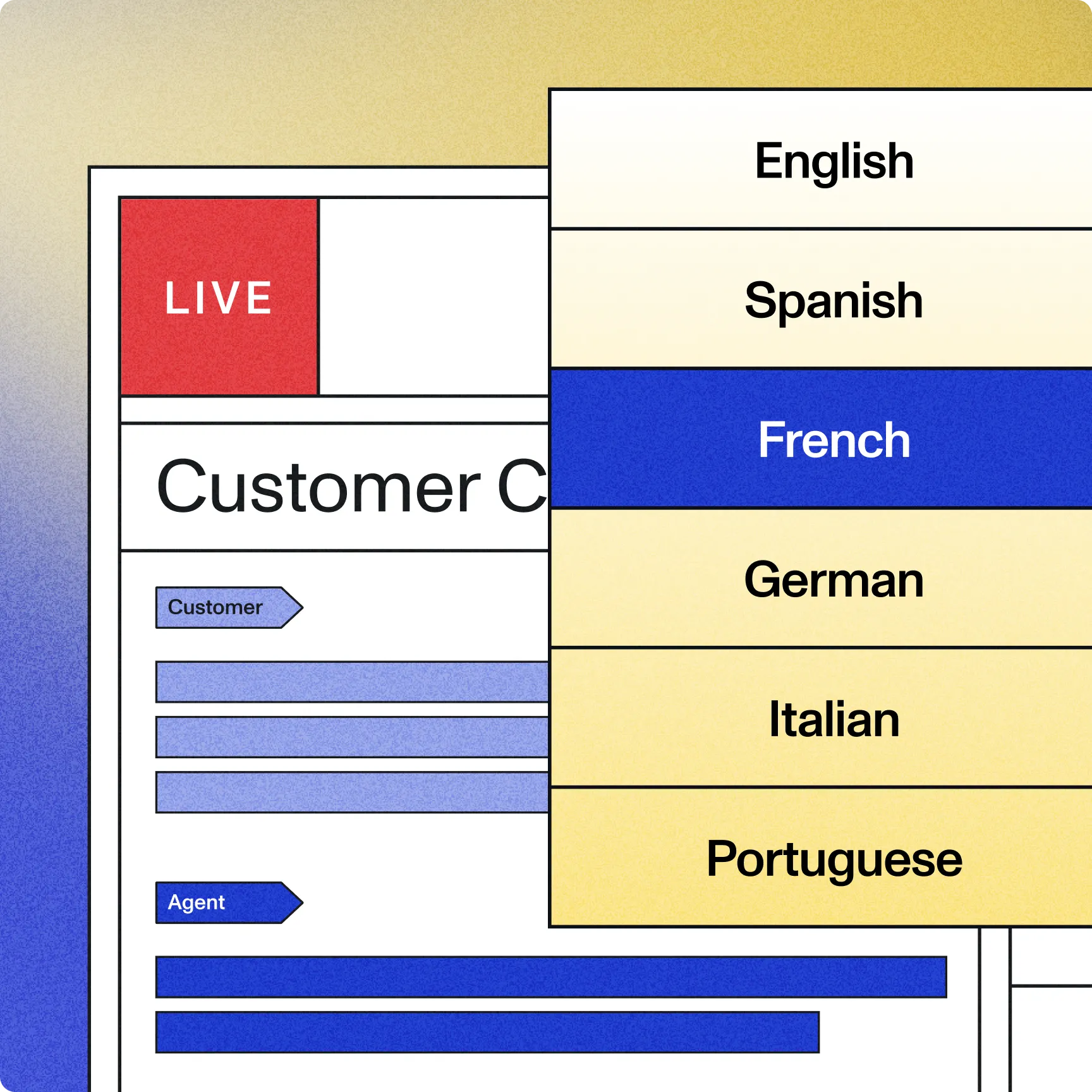

Reliable performance across the globe

We speak your customers' language, so you can serve a global customer base.

- 99-language support with automatic detection and code-switching between English and other languages

- Adapt to regional accents, dialects, and cultural expressions

- Language aware formatting for global date, number, and punctuation standards

- Handle medical, legal, and technical terms easily with specialized vocab and use-case specific prompting

A single platform for all things Voice AI

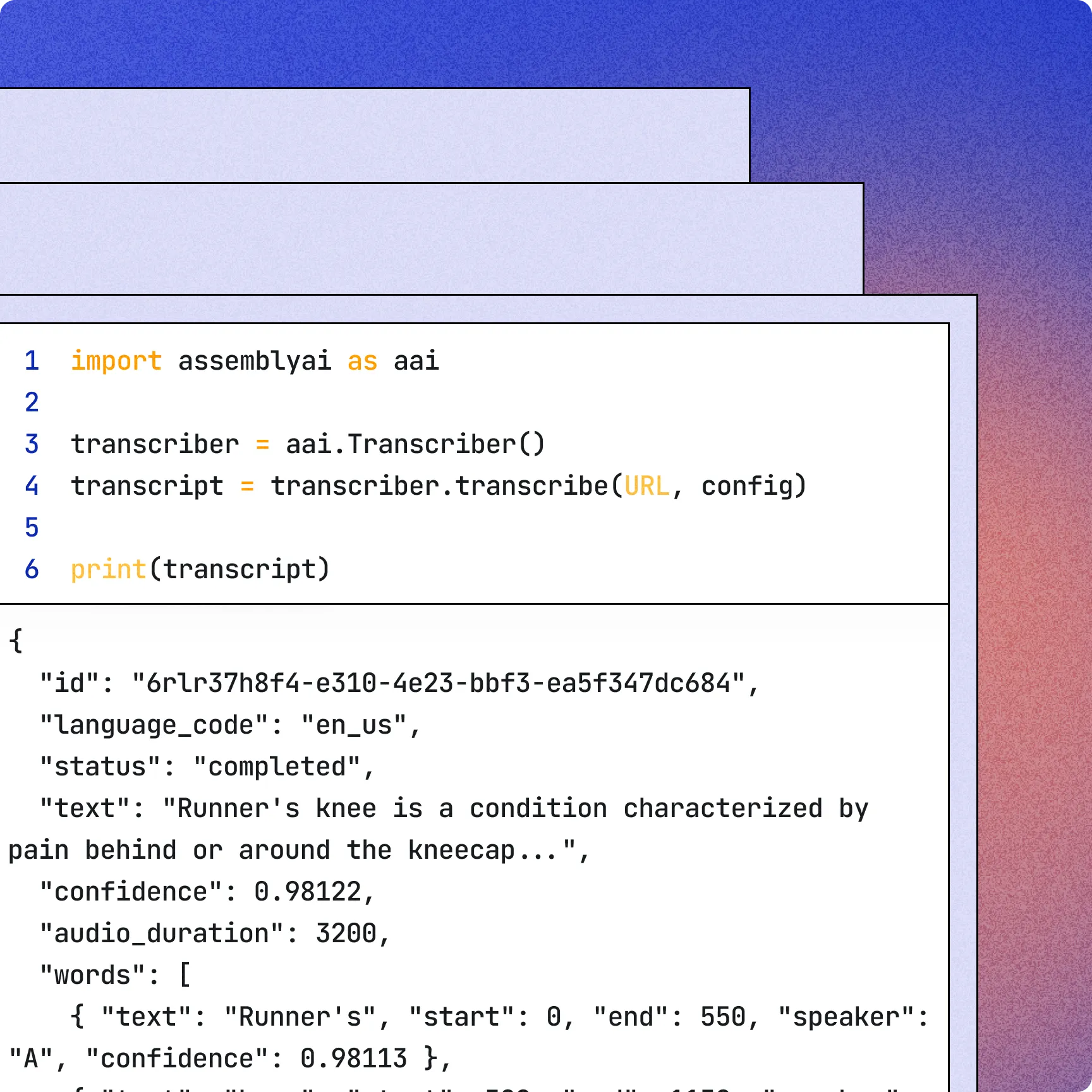

Innovate, ship, and scale faster than ever, all on a developer-first API.

- Unified speech-to-intelligence pipeline through LLM Gateway

- Seamlessly integrate with leading LLMs, including OpenAI, Anthropic, and Google

- Reliable, enterprise-grade infrastructure with zero rate limits

- No contract, usage-based pricing that's built to scale with you

Capturing speech is where it starts. Creating outcomes is where it counts.

Learn why today’s most innovative companies choose us.

Reduction in customer complaints and support tickets

Conversion rate for their Conversational Intelligence product

Jiminny scored 15% higher customer win rates after implementing AssemblyAI.

Assembly is instrumental in our transcription process, providing crucial input for our LLM API to process further. It's become an integral part of our workflow.

Designed for voice experiences that feel more intuitive and responsive

International Language Support

Gain support to transcribe over 99+ languages and counting, including Global English (English and all of its accents).

Speaker Diarization

Detect multiple speakers in an audio file and what each speaker said. Receive a list of utterances, where each utterance corresponds to an uninterrupted segment of speech from a single speaker.

Automatic Language Detection

Identify the dominant language spoken in an audio file and use it during the transcription.

Word Timings

Receive a response that also includes an array with information about each word, and the start and end timings.

Auto Punctuation and Casing

Automatically add casing and punctuation of proper nouns to the transcription text.

Fewer correction loops and smoother conversations

Model | Overall | Alphanumerics | Proper Nouns |

|---|---|---|---|

AssemblyAI Universal | 94.1% | 4.00% | 13.87% |

Deepgram Nova-3 | 92.1% | 4.97% | 21.14% |

AssemblyAI's accuracy is better than any other tools in the market (and we have tried them all).

Frequently Asked Questions

A speech-to-text API is a developer interface that turns audio into text. Your app sends an audio file or live stream to an endpoint and receives a transcript, often with word timestamps, speaker labels, and confidence scores. Many providers support both batch and real-time transcription for integrating captions, notes, or analytics into products.

AssemblyAI’s Universal model leads our published benchmarks with a 93.3% Word Accuracy Rate and delivers near‑human accuracy, even on noisy or challenging audio.

Yes. For pre-recorded audio, AssemblyAI detects and transcribes code-switching, with best results for English+Spanish or English+German. Configure via language_codes (max two; one must be “en”) or enable language_detection with code_switching and an optional confidence threshold.

Yes. AssemblyAI offers real-time Streaming Speech-to-Text via a secure WebSocket API, returning partial and final transcripts within a few hundred milliseconds. It’s optimized for ultra-low latency (~300 ms P50) and supports use cases like live captioning and voice agents.

Sign up and get your API key in the Dashboard. Install an SDK (e.g., JavaScript). Initialize the client with your key, then call transcribe with your audio. For streaming/voice agents, set ASSEMBLYAI_API_KEY in your environment. Create keys in the Dashboard’s API Keys section.

Pay‑as‑you‑go: $0.15/hr for Universal (pre‑recorded and streaming); $0.27/hr for Slam‑1 (pre‑recorded). Billed per second. Optional Speech Understanding features are priced separately (e.g., Speaker Identification $0.02/hr).

Yes. AssemblyAI integrates with LLMs via LLM Gateway—a single API to OpenAI (GPT), Anthropic (Claude), Google (Gemini), and more. You can route transcripts directly to these models, switch providers without code changes, and use chat completions, multi-turn, tool calling, and agentic workflows, with unified management and billing.

Unlock the value of voice data

Build what’s next on the platform powering thousands of the industry’s leading of Voice AI apps.